Aside from getting around the apparent weakness in the "LevinRule"* as others have suggested, here is another way to verify the total probability is 1, namely, by changing variables.

{transformation} =

Solve[{u1, u2} == {Log[x1/(1 - x1 - x2)], Log[x2/(1 - x1 - x2)]}, {x1, x2}, Reals]

(*

{{x1 -> E^u1/(1 + E^u1 + E^u2),

x2 -> -E^-u1 (-E^u1 + E^u1/(1 + E^u1 + E^u2) + E^(2 u1)/(1 + E^u1 + E^u2))}}

*)

jacobian = Det@D[{x1, x2} /. transformation, {{u1, u2}}] // Simplify

(* E^(u1 + u2)/(1 + E^u1 + E^u2)^3 *)

(* new limits of integration *)

Reduce[{u1, u2} == {Log[x1/(1 - x1 - x2)], Log[x2/(1 - x1 - x2)]} &&

0 < x1 < 1 && 0 < x2 < 1 - x1, {u1, u2}, {x1, x2}]

(* (u1 | u2) ∈ Reals *)

Integrate[

f[x1, x2, 0, 0, 1, 1, 0] * jacobian /. transformation,

{u1, -∞, ∞}, {u2, -∞, ∞}] (* implied by (u1 | u2) ∈ Reals *)

(* 1 *)

*The use of "LevinRule" and "MultidimensionalRule" in the OP's integral as the automatically chosen method can be seen by using the approach presented in Determining which rule NIntegrate selects automatically, or by inspecting calls to the integration rules collected via

{calls} = Last@Reap@NIntegrate[f[x1, x2, 0, 0, 1, 1, 0], {x1, 0, 1}, {x2, 0, 1 - x1},

IntegrationMonitor :> (Sow[#1] &)]

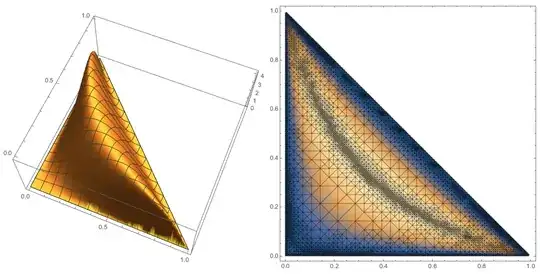

The output may be viewed in this image. I hesitate to call it a bug, if it were an edge-case that is hard to detect; numerical routines often have limitations. There are two issues, why choose "LevinRule", and why "LevinRule" gives wrong approximations 0.36 or 0.5 (if 1/(2 Pi) is factored out of the integral sign as noted here).

Further remarks on the choice of "LevinRule" and a strange workaround.

NIntegrate seems to conclude the integral is oscillatory, because of the following. The domain of integration is mapped to a unit square, which transforms the integrand to

Exp[1/2 *

(-Log[x1/(1 - x1 - (1 - x1) x2)]^2 - Log[((1 - x1) x2)/(1 - x1 - (1 - x1) x2)]^2) /

(2 π x1 x2 (1 - x1 - (1 - x1) x2))

]

Whether the integrand is oscillatory is determined by whether the argument to Exp is real. That depends on whether the arguments to the logarithms

x1/(1 - x1 - (1 - x1) x2), ((1 - x1) x2)/(1 - x1 - (1 - x1) x2)

are negative over the domain 0 <= x1 <= 1, 0 <= x2 <= 1. This is determined by plugging the intervals in the form Interval[{0, 1.}] for x1, x2 each. But Interval[] is only guaranteed to compute an interval that contains the exact result. But note that the computed intervals contain negative numbers:

{x1/(1 - x1 - (1 - x1) x2), ((1 - x1) x2)/(1 - x1 - (1 - x1) x2)} /.

Thread[{x1, x2} -> Interval[{0, 1.}]]

(* {Interval[{-∞, ∞}], Interval[{-∞, ∞}]} *)

However, the arguments are nonnegative:

Minimize[{#, 0 <= x1 <= 1 && 0 <= x2 <= 1}, {x1, x2}] & /@

{x1/(1 - x1 - (1 - x1) x2), ((1 - x1) x2)/(1 - x1 - (1 - x1) x2)}

(* {{0, {x1 -> 0, x2 -> 1/2}}, {0, {x2 -> 0, x1 -> 0}}} *)

Before we conclude it is a bug, consider what's going on in the denominator of the arguments:

{1 - x1 - (1 - x1) x2, 1 - x1 - (1 - x1) x2 // Factor} /.

Thread[{x1, x2} -> Interval[{0, 1}]]

(* {Interval[{-1, 1}], Interval[{0, 1}]} *)

The first evaluates to 1 + Interval[{-1, 0}] + Interval[{-1, 0}], which is exactly right. But the two intervals are treated as independent quantities, which originally they aren't, and so the interval sum comes out to be Interval[{-1, 1}]. The factored expression yields a more precise result.

It gets more complicated when we consider approximate reals, such as 1.. Interval adds or subtracts an epsilon from the end points when calculating with them, so that 1 - Interval[{0, 1.}] equals Interval[{-4.44089*10^-16, 1}]. This has been discussed a little here and remarked on here.

I'll leave off the analysis here, except to note that it suggested the following as a potential fix:

f2 = 1/Expand[1/f[x1, x2, 0, 0, 1, 1, 0]];

f2 == f[x1, x2, 0, 0, 1, 1, 0] // Simplify (* check algebraic equivalence *)

(* True *)

NIntegrate[f2, {x1, 0, 1}, {x2, 0, 1 - x1}]

(* 1. *)

ProbabilityDistribution[]and itsMethod -> "Normalize"setting. – J. M.'s missing motivation Jul 06 '16 at 13:40Method -> "UnitCubeRescaling". Also, it is advisable to use1/2instead of0.5in your PDF. – J. M.'s missing motivation Jul 06 '16 at 13:58_?NumericQcauses it to return 1. – Szabolcs Jul 06 '16 at 14:03MultidimensionalRuleprovide a more flexible solution? (both work) – Feyre Jul 06 '16 at 14:15_?NumericQorMethod -> "UnitCubeRescaling". – Miguel Jul 06 '16 at 14:23Method -> {Automatic, "SymbolicProcessing" -> False}causes it to fail in other ways. – Szabolcs Jul 06 '16 at 14:34"SymbolicProcessing" -> 0;Method -> {"DoubleExponential", "SymbolicProcessing" -> 0}works. – J. M.'s missing motivation Jul 06 '16 at 14:42NIntegrateseems to be using"LevinRule"but I don't know why. And I don't know why it fails so badly. Pretty much all the suggestions that work are just one way or another to prevent"LevinRule"from being applied. – Michael E2 Jul 07 '16 at 04:05N@ Integrate[f[x1, x2, 0, 0, 1, 1, 0], {x1, 0, 1}, {x2, 0, 1 - x1}]yields0.5000000002523569`. I also get0.5if IRationalize[]the integrand and useN[integral, prec].Integrate[]factors out the-1/(2 Pi), and for whatever reason, that changes the value returned byNIntegrate[]whenNis applied. Seems the integral is a pathological case for the Levin Rule (without the settingMinRecursionhigher as Anton showed). – Michael E2 Jul 07 '16 at 15:02MinRecursion -> 1. After all, it can work on nonoscillatory integrals, although, as you say, it's a poor choice and shouldn't be expected to work well. (To a certain extent I can see what it did wrong. Something tricks it into computing small integrals & errors on subregions.) – Michael E2 Jul 08 '16 at 18:33