I'm working on implementing a Q-Learning algorithm for a 2 player board game.

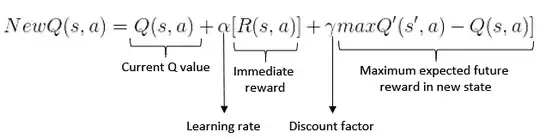

I encountered what I think may be a problem. When it comes time to update the Q value with the Bellman equation (above), the last part states that for the maximum expected reward, one must find the highest q value in the new state reached, s', after making action a.

However, it seems like the I never have q values for state s'. I suspect s' can only be reached from P2 making a move. It may be impossible for this state to be reached as a result of an action from P1. Therefore, the board state s' is never evaluated by P2, thus its Q values are never being computed.

I will try to paint a picture of what I mean. Assume P1 is a random player, and P2 is the learning agent.

- P1 makes a random move, resulting in state

s. - P2 evaluates board

s, finds the best action and takes it, resulting in states'. In the process of updating the Q value for the pair(s,a), it findsmaxQ'(s', a) = 0, since the state hasn't been encountered yet. - From

s', P1 again makes a random move.

As you can see, state s' is never encountered by P2, since it is a board state that appears only as a result of P2 making a move. Thus the last part of the equation will always result in 0 - current Q value.

Am I seeing this correctly? Does this affect the learning process? Any input would be appreciated.

Thanks.

s1and makes movea1resulting ins2. P2 evaluatess2and responds with an action that leads to states3(but doesn't update the q-table yet). From here P1 makes another move, resulting in states4. It is at this point that P2 updates the q table using the Bellman equation. In this equation, the immediate reward is the reward from states3(the one that resulted from P2 making a move) and the future reward is the reward that resulted from P1 responding tos3, i.e., the reward fors4. Is this correct? Many thanks! – Pete Apr 18 '19 at 09:56