In order to be able to render the data in a file you need to be able to translate it into a format that can be efficiently accessed by the render engine. One method of achieving this is to convert it into an suitably formatted image that can then be accessed via an Image Texture node - but an image is only a 'flat' 2-dimensions and we need to be able to represent the 3-dimensional volume.

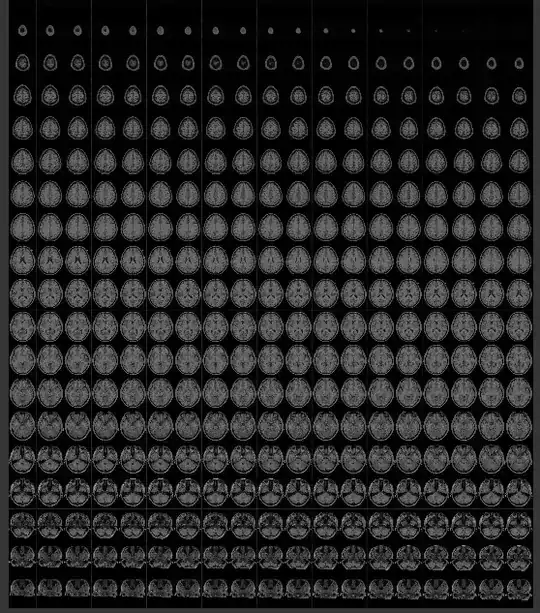

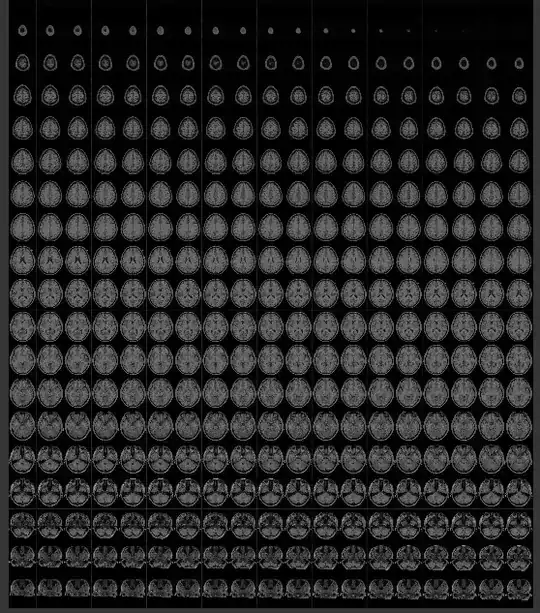

In order to store the 3-dimensional voxels in a 2-dimensional image we can split the volume into multiple slices and store each slice as a separate 'tile' in the image as follows :

Then, to render the image, we can use some maths to translate from the 3D XYZ coordinates into the 2D image coordinates by using the 'Z' coordinate to determine within which 'slice' that point resides and the X and Y to pick that pixel from that 'slice'.

Converting the raw bytes in your sample file can be achieved with the following python script :

#Convert a 'raw' byte data set into a tiled EXR image by 'slice'

import bpy

import imp

import os

import sys

import struct

import math

def convert_rawbytes_to_exr(fname, oPattern, oframeno, res_x, res_y, res_z,multiRow=False):

f = open(fname, "rb")

size = res_x * res_y * res_z

density = f.read(size)

build_exr_from_buffers(gen_filename("mri",oPattern, oframeno), (res_x, res_y, res_z), density, density,density, None, multiRow=multiRow)

f.close()

# Generate filename by combining name, pattern, frameno

def gen_filename(name, pattern, frameno):

return pattern % (name, frameno)

def build_exr_from_buffers(filename, dimensions, bufferR, bufferG, bufferB, bufferA, multiRow=False):

if multiRow:

numColumns = math.ceil(math.sqrt(dimensions[2]))

numRows = math.ceil(dimensions[2] / numColumns)

else:

numColumns = dimensions[2]

numRows = 1

filename = str(dimensions[2])+"_"+str(numColumns)+"x"+str(numRows)+"_"+filename

print("Building image %s" % filename)

# Size the image to allow space for Z images of size X by Y

width = (dimensions[0]+1)*numColumns

if numRows >1:

height = (dimensions[1]+1)*numRows

else:

height = dimensions[1]

# Create the image

image = bpy.data.images.new(filename, width=width, height=height,float_buffer=False, alpha=False, is_data=True)

# Create an empty array of pixel data (each will hold R, G, B, A values as floats)

pixels = [None] * width * height

for x in range(0,width):

for y in range(0,height):

pixels[y*width+x] = [0.0,0.0,0.0,0.0]

print("File '"+filename+"', Dimensions = ("+str(dimensions[0])+","+str(dimensions[1])+","+str(dimensions[2])+")")

for z in range(0,dimensions[2]):

print("Processing layer "+str(z))

#Calculate the location of this 'tile'

tileNoX = z % numColumns

tileNoY = int((z - tileNoX) / numColumns)

tileOffset = tileNoX*(dimensions[0]+1)+tileNoY*width*(dimensions[1]+1)

#print("Tile = ("+str(tileNoX)+","+str(tileNoY)+") : "+str(tileOffset))

for x in range(0,dimensions[0]):

for y in range(0,dimensions[1]):

p = x+y*dimensions[0]+z*dimensions[0]*dimensions[1]

# If R, G, or B are 'none' then 0.0 is assumed

valR = 0

valG = 0

valB = 0

if bufferR != None:

#valR = struct.unpack('f',bufferR[p*4:p*4+4])[0]

valR = float(bufferR[p])/255

if bufferG != None:

#valG = struct.unpack('f',bufferG[p*4:p*4+4])[0]

valG = float(bufferG[p])/255

if bufferB != None:

#valB = struct.unpack('f',bufferB[p*4:p*4+4])[0]

valB = float(bufferB[p])/255

# bufferA can be None to indicate not used (in which case 1.0 is assumed)

if bufferA != None:

valA = float(bufferA[p])/255

else:

valA = 1.0

#pixels[(y*width)+x+z*(dimensions[0]+1)] = [valR,valG,valB,valA]

pixels[tileOffset + x + y*width] = [valR,valG,valB,valA]

print("Image build complete, storing pixels...")

# 'flatten' the array - so [R1,G1,B1,A1], [R2,G2,B2,A2], [R3,G3,B3,A3],.... becomes R1,B1,G1,A1,R2,G2,B2,A2,R3,G3,B3,A3,....

# and store it in the image

image.pixels = [chan for px in pixels for chan in px]

print("Updating image...")

image.update()

print("Saving image...")

# Save image to file

scn = bpy.data.scenes.new('img_settings')

scn.render.image_settings.file_format = 'OPEN_EXR'

scn.render.image_settings.exr_codec = 'ZIP'

scn.render.image_settings.color_mode = 'RGBA'

#scn.render.image_settings.color_depth = '32'

img_path = bpy.path.abspath('//')

img_file = image.name+'.exr'

image.save_render(img_path+img_file, scene=scn)

image.use_fake_user = True

print("Complete.")

convert_rawbytes_to_exr(bpy.path.abspath("//"+"AW_t1_final_norm_361-433-361.raw"), "%s_%06i", 0, 361, 433, 361, multiRow=True)

The above code was adapted from an add-on to convert a Smoke Domain into an image in a similar way - to capture the smoke voxels to allow them to be manipulated - see https://baldingwizard.wixsite.com/blog/tutorial-mesh-to-volume.

Note the last line of the script - this calls the above functions with the relevant parameters - in this case, specifying the location of the 'raw' file, the filename format and frame number (left over from the smoke2exr add-on to allow for multiple frames), the dimensions of the 'raw' data, and a flag to indicate the conversion should split over multiple rows (the original add-on converted to a single row of slices - but I discovered this caused inaccuracies as the number of slices grows large; splitting over multiple rows drastically reduces the issue).

Once your file is in place, run the script - it will take a while and you must have sufficient memory. On my system I have 8Gb of memory and the size of the image resulted in pagefile swapping (so any larger and it would really struggle). However, it successfully converted in a 5 or 10 minutes or so (open the Blender System Console before you run the script so you can see progress).

Once complete you should have an image containing multiple tiles :

To convert from 3D coordinates into 2D image coordinates requires some maths as follows :

# Expression to convert Generated coordinates into 'sliced' coordinates for image generated from Smoke2EXR

# Use the Node Expressions add-on to generate the node group from this text

_x = Input[x]

_y = Input[y]

_z = Input[z]

_slice = min(1,max(_z,0)) * ZSlices{128}

_sliceNo1 = floor(_slice)

_sliceNo2 = _sliceNo1 + 1

#...calculate tileX and tileY. Note '0.001' added in to avoid rounding crossover issues

_tilePosX1 = mod(_sliceNo1, TileColumns)

_tilePosY1 = floor(_sliceNo1 / TileColumns+0.001)

_newx1 = (clip(_x) + _tilePosX1)/ TileColumns

_newy1 = (clip(_y) + _tilePosY1)/ NumRows

_tilePosX2 = mod(_sliceNo2, TileColumns)

_tilePosY2 = floor(_sliceNo2 / TileColumns+0.001)

_newx2 = (clip(_x) + _tilePosX2)/ TileColumns

_newy2 = (clip(_y) + _tilePosY2)/ NumRows

Output1[] = combine(_newx1, _newy1, 0)

Output2[] = combine(_newx2, _newy2,0)

# Choose interpolation mode... linear actually seems to produce less banding.

InterpolationMix = _slice - _sliceNo1

I used the Node Expresions add-on to convert the above text directly into a Node Group to perform the above functions. However, you can instead manually build the nodes to perform the same function.

Setup the node tree as follows :

The mapping node allows you to move and rotate the volume - moving it partially outside the 'domain' of the volume allows you to easily 'slice through' to see the inside detail. The node group contain the above function defined in the text - to convert from XYZ coordinates into XY image coordinates - set the input parameters appropriate to your image (in this case 361 slices arranged as a 19x19 grid - these values are encoded in the generated filename). This drives the two Image Texture nodes (using two nodes allows two points to be extracted simultaneously in order to allow interpolation between the two - for better detail). The following maths nodes allow you to control the density and contrast to enable you to tune it to pick out the detail, and the MixRGB node set to Multiple can be used to 'tint' the volumetric (using off-white tends to produce more visible detail in the volumetric).

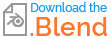

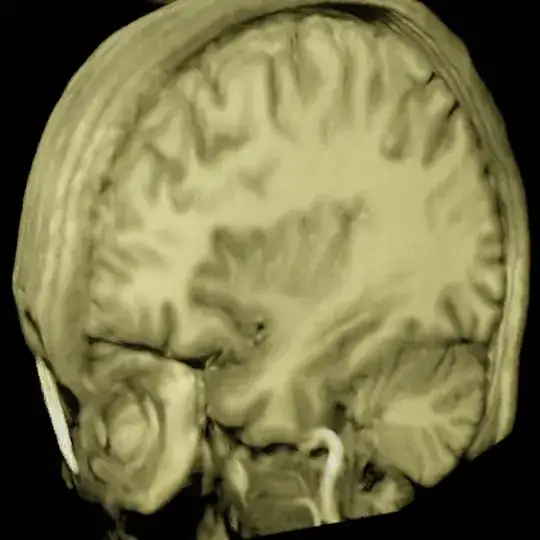

I used Eeevee to render the volume (don't forget to set the volumetric Start, End, Tile Size and possibly Volumetric Shadows - depending on the effect you're looking for) and this allows it to be manipulated in real time and produces pleasing results :

Blend file included  (doesn't include the image or raw data linked in the comments on the question (they aren't mine to give) but does contain the above code and the generated node group)

(doesn't include the image or raw data linked in the comments on the question (they aren't mine to give) but does contain the above code and the generated node group)

For completeness, here's a render using Cycles (this takes considerably longer than Eevee but does produce more physically accurate results) :

A.ravel().astype('uint8').tofile('./bytes.raw')– ajwood Aug 24 '19 at 22:39