I want to convert object pose (location and rotation) from world coordinate to the camera coordinate. Is there a function to convert not only the location but also the rotation of an object to camera coordinates in blender?

3 Answers

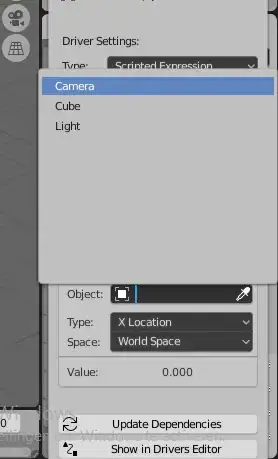

Convert Space

Every object in blender has the bpy.types.Object.convert_space method.

For example to convert the world space matrix of the cube, into the local space of the camera

>>> cube = C.object

>>> cube

bpy.data.objects['Cube']

>>> cam = C.scene.camera

>>> cam

bpy.data.objects['Camera']

>>> cam.convert_space(matrix=cube.matrix_world, to_space='LOCAL')

Matrix(((0.6859205961227417, 0.7276763319969177, 1.4901161193847656e-08, 0.0),

(-0.32401347160339355, 0.305420845746994, 0.8953956365585327, 0.0),

(0.6515582203865051, -0.6141703724861145, 0.44527140259742737, 0.0),

(0.0, 0.0, 0.0, 1.0)))

- 84,216

- 10

- 108

- 233

There are two ways to do this:

- Parenting

just make your object a child of the cam, and it will copy its location and rotation.

select first the camera, then the object. press Ctrl+p

- 321

- 1

- 11

-

As I need to do it in a pipeline any idea how can the same be accomplished using python blender pkg? – Guru Hegde Oct 04 '20 at 15:03

-

He @GuruHegde, its not completely clear to me what you want. Do you want to automate the process of connecting the camera and a object with python, or something else? What python pkg, do you mean just the blender api? – Alex bries Oct 04 '20 at 15:52

-

Currently, I am using blender (bpy- python api) to create a dataset(around 10K images) for my deep learning 6D-pose estimation task. I am saving the rendered image and also object pose(obj.location and obj.rotation_euler). But the pose values I am saving does not seem to be in Camera coordinate. My guess is that this is in world ordinate. I tried converting this by multiplying with camera.matrix_world matrix which did not work for me. So this is why I want to find a simple way in blender python API to convert object pose from world to camera coordinate. – Guru Hegde Oct 04 '20 at 16:47

-

If i understand you right you want the location of an object(s), relative to the camera. In this case, make the camera a parent of that object(s). And apply location and rotation to that object, all movement will now be relative to the camera. python parent: https://blender.stackexchange.com/questions/9200/how-to-make-object-a-a-parent-of-object-b-via-blenders-python-api, python apply location and rotation: bpy.ops.object.transform_apply(location=True, rotation=True, scale=False) – Alex bries Oct 04 '20 at 17:06

The only thing you should do is to just find the relative matrix of the object with respect to camera. (for details see THIS_LINK where I have explained how these kind of stuff work)

You should do like below:

import bpy

from mathutils import Matrix as mat

cam_name = "Camera" #or whatever it is

obj_name = "Cube" #or whatever it is

cam = bpy.data.objects[cam_name]

obj = bpy.data.objects[obj_name]

mat_rel = cam.matrix_world.inverted() @ obj.matrix_world

location

relative_location = mat_rel.translation

rotation

relative_rotation_euler = mat_rel.to_euler() #must be converted from radians to degrees

relative_rotation_quat = mat_rel.to_quaternion()

and you'll get the following result:

print(relative_location)

>>> <Vector (-0.0079, 0.0600, -11.2562)>

print(relative_rotation_euler)

>>> <Euler (x=-0.9435, y=-0.7096, z=-0.4413), order='XYZ'>

print(relative_rotation_quat)

>>> <Quaternion (w=0.7805, x=-0.4835, y=-0.2087, z=-0.3369)>

- 1,006

- 7

- 14

-

Your approach and the one above from @batFINGER don't give exactly the same results. In my case, I'm getting

Quaternion((0.8464428186416626, -0.5244007110595703, 0.048664890229701996, 0.07855068147182465))using @batFINGER's, andQuaternion((0.8464428782463074, -0.5244006514549255, 0.048664890229701996, 0.07855068147182465))using yours. Could the matrix inversion be the cause of minor numerical differences? – Bruno Oct 11 '21 at 12:25 -

Instead of explicitly calling

inverted()and performing the matrix multiplication, it could be advised to use a linear system solver, e.g.,Matrix(np.linalg.solve(camera.matrix_world, blade.matrix_world)).to_quaternion(), which in my case givesQuaternion((0.8464428186416626, -0.5244007110595703, 0.0486648827791214, 0.07855071127414703)). Still not a perfect match, but seems closer. – Bruno Oct 11 '21 at 12:29 -

@Bruno the order of magnitude of this difference is

10**-7, it will be never seen. the cause of this might be rooted either in quaternion conversion or in matrix manipulations (either inversion or multiplication roundings) but I think quat conversion case is more probable. Anyway it cannot be seen or measured and doesn't matter – MohammadHossein Jamshidi Oct 11 '21 at 14:47 -

I agree the difference in the quaternion representation is negligible. The first time I noticed the difference was when comparing the matrices. @batFINGER's approach gives

Matrix(((0.9829229712486267, -0.18401706218719482, 1.4901161193847656e-08, 1.0398652605658754e-08), (0.08193755149841309, 0.43766748905181885, 0.8953956365585327, 0.624844491481781), (-0.16476815938949585, -0.8801049590110779, 0.44527140259742737, 0.3107289671897888), (0.0, 0.0, 0.0, 1.0)))– Bruno Oct 11 '21 at 16:04 -

and yours gives

Matrix(((0.9829230308532715, -0.1840170919895172, 1.4901162970204496e-08, -0.007881620898842812), (0.08193756639957428, 0.4376675486564636, 0.8953956961631775, 0.6848568320274353), (-0.16476818919181824, -0.8801050186157227, 0.44527143239974976, -10.945426940917969), (0.0, 0.0, 0.0, 1.0))). The last column has "bigger" differences. – Bruno Oct 11 '21 at 16:05 -

provide a file on https://blend-exchange.com/ for me to see – MohammadHossein Jamshidi Oct 11 '21 at 16:44

-

Unfortunately, I can't. But here are the matrices:

>>> camera.matrix_world Matrix(((0.6859206557273865, -0.32401347160339355, 0.6515582203865051, 7.358891487121582), (0.7276763319969177, 0.305420845746994, -0.6141703724861145, -6.925790786743164), (0.0, 0.8953956365585327, 0.44527140259742737, 4.958309173583984), (0.0, 0.0, 0.0, 1.0)))– Bruno Oct 11 '21 at 16:57 -

>>> obj.matrix_world Matrix(((0.5403022766113281, -0.8414709568023682, 0.0, 0.0), (0.8414709568023682, 0.5403022766113281, 0.0, 0.0), (0.0, 0.0, 1.0, 0.6978417634963989), (0.0, 0.0, 0.0, 1.0)))– Bruno Oct 11 '21 at 16:58 -

I'm sorry if it's not possible. I can't follow this way. – MohammadHossein Jamshidi Oct 11 '21 at 18:29