I want to render an object (plane) and want to know exactly where this object is in the image. To do so, I discovered this answer which was already quite helpful. Here the code I use, based on the link:

import bpy

import numpy as np

import bpy_extras

import cv2

from bpy_extras.object_utils import world_to_camera_view

def draw_object_to_rendered_image():

scene = bpy.context.scene

# needed to rescale 2d coordinates

render = scene.render

res_x = render.resolution_x

res_y = render.resolution_y

obj = bpy.data.objects['Plane.001']

cam = bpy.data.objects['Camera']

# use generator expressions () or list comprehensions []

verts = (vert.co for vert in obj.data.vertices)

coords_2d = [world_to_camera_view(scene, cam, coord) for coord in verts]

# 2d data printout:

rnd = lambda i: round(i)

print('x,y')

corners = []

for x, y, distance_to_lens in coords_2d:

#add coordinates to array

corners.append(rnd(res_x*x))

corners.append(rnd(res_y*y))

print("{},{}".format(rnd(res_x*x), rnd(res_y*y)))

img = cv2.imread('C:/Users/banck/blender/0001.png')

points = np.zeros((4, 2), np.int32)

#offset

fac = 320

#change order of coordinates

points[0] = [corners[0*2], corners[0*2+1]+fac]

points[3] = [corners[1*2], corners[1*2+1]+fac]

points[1] = [corners[2*2], corners[2*2+1]+fac]

points[2] = [corners[3*2], corners[3*2+1]+fac]

points = points.reshape((-1, 1, 2))

img = cv2.fillPoly(img, [points], (255,255,0))

print(corners)

cv2.imshow("test", img)

cv2.waitKey(0)

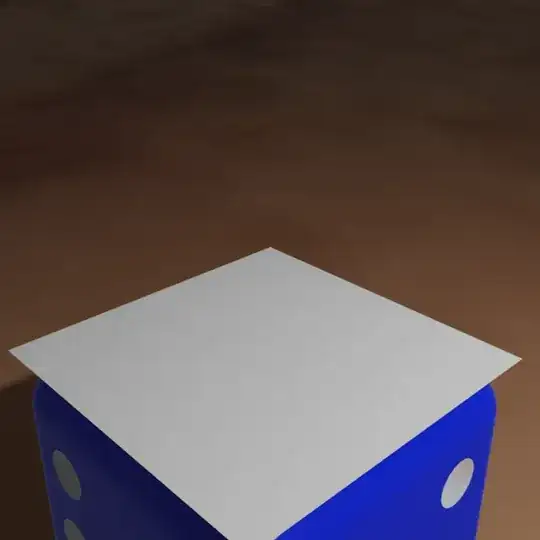

OK this is my rendered scene:

you see the gray plane, I actually try to get the coordinates from. The plane marks the surface of the cube. So later I just want to know the position of the top surface of the cube and not render the plane itself. But back to the problem.

you can already see I used a fac=320 as an offset for the y-coordinate. This is half the rendered image size of 640px*640px. I use it since I get negative values for the y if the plane is in the lower half of the camera.

If I use opencv to draw the calculated coordinates on the rendered image, it looks like that:

The blue rectangle is the object drawn with opencv. You can see that the x coordinates a close to the real gray plane, but not perfect, while the y-coordinates are completely off and not even the shape is correct.

Can somebody please explain to me why it is off, and how to make it work correctly? I would assume since the math behind the rendering is known there should be a way to make this calculation and coordinates match up. Grateful for any help.

------- UPDATE --------

I managed to get the calculations right, but only under some conditions. The plane I use must be at the location 0,0,0 no rotation and scale 1,1,1. While the position of the camera can be wherever it wants, as soon as I move the plane it doesn't line up anylonger. I am quite sure I need to do some transformations to the plane in this case, but I don't have a lot of expierence with the API and different views. This is the code I use to calc and draw the plane:

def calc_top_face_in_image():

scene = bpy.context.scene

# needed to rescale 2d coordinates

render = scene.render

res_x = render.resolution_x

res_y = render.resolution_y

obj = bpy.data.objects['top_plane']

cam = bpy.data.objects['Camera']

# use generator expressions () or list comprehensions []

verts = (vert.co for vert in obj.data.vertices)

coords_2d = [world_to_camera_view(scene, cam, coord) for coord in verts]

# 2d data printout:

rnd = lambda i: round(i)

# 640 is the height of the rendered image

y_offset = 640

# these are (or will be) the coordinates x,y for the corners of the "top_plane"[![enter image description here][4]][4]

corners = np.zeros((4, 2), np.int32)

i = 0

print('x,y')

for x, y, distance_to_lens in coords_2d:

corners[i] = [rnd(res_x*x), y_offset-rnd(res_y*y)]

i+=1

print("{},{}".format(rnd(res_x*x), rnd(res_y*y)))

#swap to correct order

corners[[1,2,3]] = corners[[2,3,1]]

img = cv2.imread('wherever/you/render/your/image/0001.png')

black = np.zeros_like(img)

black = cv2.fillPoly(black, [corners], (255,255,255))

img[black == 255] = (img[black == 255]/2 + 127)

cv2.polylines(img,[corners],True,(255,255,0))

cv2.imshow("test", img)

cv2.waitKey(0)

cv2.imwrite("wherever/you/render/your/image/0001_overlay.png", img)

Here is the image:

Like I said, this works very well even if you move the camera, but if you move the object you want to track it does not work anymore. So it really is a problem if you want to track multiple object. I would be happy if somebody could give me a hand for the correct calculation when the object is perfectly centered etc.