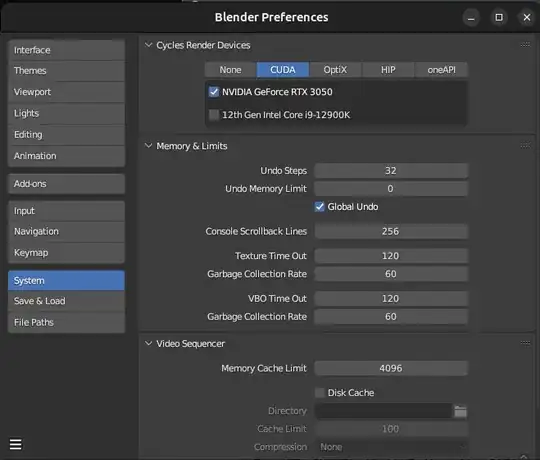

You are already using the GPU for rendering(most likely). If you install latest drivers, set up the System tab in Preferences correctly choosing OptiX(preferably) or CUDA for Nvidia RTX GPUs and then select GPU Compute as Device in Render Properties tab, Properties Editor that is all you need to do.

You should not concern yourself with what statistics Windows Task Manager or Resource Monitor reports - they are incorrect. Other hardware monitoring software may also report incorrect GPU usage. That does not matter. What should matter is the time your renders take.

Sometimes I get an error when trying to render that GPU is out of

memory, even though I can see, that the GPU usage rate doesn't go

above 20%.

Again - you cannot see actual usage, it's shown incorrectly, but even if it was correct(which it isn't) this would make sense, because if the scene does not fit into memory, rendering computations will not start because the data cannot be loaded into VRAM so there is nothing the GPU can be used for in that scenario, except trying to load the scene. Also usage has nothing to do with memory, so even if usage was low, GPU can still run out of memory. But this does not matter, because once again - you cannot see GPU useage.

Here is my GPU definitely rendering a complex(in terms of lighting) archviz scene:

And here it is with Blender closed:

...CPU usage rate jumps to 80%...

That's right. CPU is used no matter what during rendering. It needs to be used for preparing and loading the scene and for managing the render process. That is to be expected.

...my CPU is overheating up to 90 degrees when in Render viewport and

when rendering.

It's not a hardware issue.

This temperature is an issue for hardware whether you like it or not. It is possible it might eventually lead to hardware problems. Although from my experience hardware is not that easy to damage, and it will more likely lead to unexpected unexplained crashes in the middle of the night on weekend in an office computer left to render visualisations for a meeting with a client 10 am on Monday... because of course it will. Computers are sometimes not built with rendering in mind and it is not uncommon for CPU coolers not to be adequate for rendering especially if the PC is not meant to be a "workstation" computer. You might want to address that. If you are lucky, simply adjusting cooling settings in the UEFI might be enough to fix it.