I now have a need like this: I have two cameras in blender, marked A and B. I want to know which meshes A can see but B cannot?

-

1This is vague. Try to be specific. – Lukasz-40sth Jan 09 '24 at 09:54

-

@Lukasz-40sth: what about the question is vague? i don't understand. For me the question although it is very short, it is crystal clear. – Chris Jan 09 '24 at 10:10

-

this should help you: https://blender.stackexchange.com/questions/45146/how-to-find-all-objects-in-the-cameras-view-with-python – Chris Jan 09 '24 at 10:11

-

1@Chris "can be seen" indeed is vague: in Cycles you can be visible in a reflection for example. Also in Eevee you can be seen as a single pixel barely visible through a 99.9% alpha object. What if an object is occluded, can it be seen (if there was no object) as opposed to actually being visible? If the OP explained his project it would be easier to find the right solution. – Markus von Broady Jan 09 '24 at 10:37

-

1@MarkusvonBroady: for me is "can be seen" if one pixel can be seen and reflection is for me NOT the object. Materials won't matter. Of course, that this my opinion. Maybe i am thinking too easy ;) – Chris Jan 09 '24 at 10:45

-

Great thanks for your reply! My project is not that complex. I assume all faces are opaque and I don't consider reflections. But I need to account for occlusion, which means if there is a face that is obscured by another face, I don't consider that face to be visible. I noticed that a few people mentioned calculating whether the face is within the camera's view frustum, which doesn't meet my needs as it doesn't seem to take occlusions into account. – Jerry Li Jan 09 '24 at 12:01

-

I also noticed someone else mentioned scene.ray_cast, I haven't tested it yet, but I think this will work similar to what I need, but it can only handle one ray at a time. I could indeed write a function to split a camera into a bunch of rays and calculate them separately, but that doesn't seem that convenient. – Jerry Li Jan 09 '24 at 12:01

-

1I would render cryptomatte with 1 sample from a given camera and then check which colors are present in the render. – Markus von Broady Jan 09 '24 at 12:05

-

@MarkusvonBroady This is interesting, but doesn't the lighting in the scene change the color of the faces?And how can I color each face individually? – Jerry Li Jan 09 '24 at 12:28

-

update, scene.ray_cast can only caculate the object, not the face, which is not what I need. – Jerry Li Jan 09 '24 at 12:36

-

Lighting in the scene changes the color, but only if the shader reacts to lighting. You can use a different mat (an emissive mat) in your renders to check occlusion, or, you could just render an AOV ouput. If occluding objects output 0 and test object outputs 1, your object is not occluded if your single-pixel render has a (floating point) value > 0. – Nathan Jan 10 '24 at 00:48

-

I think bmesh raycast returns the index of the polygon https://docs.blender.org/api/current/mathutils.bvhtree.html#mathutils.bvhtree.BVHTree.ray_cast – Gorgious Jan 10 '24 at 07:54

-

@Gorgious yes,that works – Jerry Li Jan 14 '24 at 12:18

1 Answers

Thanks to everyone's help, I finally solved the problem. I put my approach here, it may not be the best way, but it works. You can try the following code, copy the code into blender and run it. This code will do the following things:

- Remove all the existing object in the scene.

- Add a camera and config the rendering setting.

- Add a "monkey".

- Calculate the rays that the camera should emit.

- Calculate the faces that hit by rays.

- Delete the faces that not hit by rays.

- Add some cylinders to represent rays (for debugging).

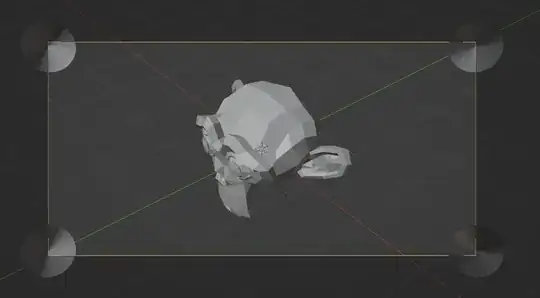

If everything works perfectly, you will find all the meshes that can not seen by the camera are removed.Like the following picture.

import numpy as np

import bpy

import bmesh

import mathutils

def delete_objects(del_obj):

with bpy.context.temp_override(window=bpy.context.window, selected_objects=list(del_obj)):

bpy.ops.object.delete()

def init():

if bpy.context.mode != 'OBJECT':

bpy.ops.object.mode_set(mode='OBJECT')

delete_objects([ob for ob in bpy.context.scene.objects])

# set the camera

bpy.ops.object.add(location=(0, 0, 0), type='EMPTY')

camera_parent = bpy.context.active_object

bpy.ops.object.camera_add()

camera = bpy.context.active_object

camera.parent = camera_parent

camera.data.shift_x = 0

camera.data.shift_y = 0

camera.data.clip_start = 0.1

camera.data.clip_end = 1e6

camera.data.angle = 0.785398

camera.data.type = 'PERSP'

camera_constraint = camera.constraints.new(type='TRACK_TO')

camera_constraint.track_axis = 'TRACK_NEGATIVE_Z'

camera_constraint.up_axis = 'UP_Y'

camera_constraint.target = camera.parent

bpy.context.scene.camera = camera

camera.location = (0, 0, 8)

bpy.context.scene.render.film_transparent = True

bpy.context.scene.world.use_nodes = False

bpy.context.scene.world.color = (0, 0, 0)

bpy.context.scene.render.resolution_x = 800

bpy.context.scene.render.resolution_y = 400

# add a monkey

bpy.ops.mesh.primitive_monkey_add(enter_editmode=False, align='WORLD', location=(0, 0, 0), scale=(1, 1, 1))

def get_rays():

# Get the real fov, because the fov in blender is actually the fov of the widest side.

reso_x, reso_y = bpy.context.scene.render.resolution_x * bpy.context.scene.render.resolution_percentage / 100, bpy.context.scene.render.resolution_y * bpy.context.scene.render.resolution_percentage / 100

aspe_x, aspe_y = bpy.context.scene.render.pixel_aspect_x, bpy.context.scene.render.pixel_aspect_y

fov = bpy.context.scene.camera.data.angle

if reso_x * aspe_x >= reso_y * aspe_y:

fov_x, fov_y = fov, fov / (reso_x * aspe_x) * (reso_y * aspe_y)

else:

fov_y, fov_x = fov, fov / (reso_y * aspe_y) * (reso_x * aspe_x)

# Calculate the direction of the ray

K = np.array([[1. / np.tan(fov_x * 0.5) * 0.5, 0., 0.5], [0., 1. / np.tan(fov_y * 0.5) * 0.5, 0.5], [0, 0., 1]])

c2w = np.array(bpy.context.scene.camera.matrix_world)

# note: the 2 here is something that like upper sampling.

# I found that if the rays are too sparse, there may be some problems,

# because ray_cast will only return one of the faces when it hits a point or line.

i, j = np.meshgrid(np.linspace(0, 1, int(reso_x 2)), np.linspace(0, 1, int(reso_y * 2)), indexing="xy")

dirs = np.stack([(i - K[0][2]) / K[0][0], -(j - K[1][2]) / K[1][1], -np.ones_like(i)], -1)

# This line is a matrix multiplication, but with some transpositions on both the input and output.

dirs = np.einsum('H W j , i j->H W i', dirs, c2w[:3, :3])

return dirs

def main():

init()

bpy.context.scene.camera.parent.rotation_euler = (np.pi / 6, np.pi / 4, 0)

bpy.context.view_layer.update()

cam_location = bpy.context.scene.camera.matrix_world.translation

rays = get_rays()

short = [rays[0][0], rays[0][-1], rays[-1][0], rays[-1][-1]]

# ray_cast!

# note we need to put all the faces that hit by the ray_cast to a set, and then delete them together.

mapps = {}

for ray_direction_world in rays.reshape((-1, rays.shape[-1])):

result, location, normal, index, obj, matrix = bpy.context.scene.ray_cast(bpy.context.view_layer.depsgraph, cam_location, ray_direction_world)

if result:

if mapps.get(obj) is None:

mapps[obj] = set()

mapps[obj].add(index)

for obj, v in mapps.items():

bpy.context.view_layer.objects.active = obj

bpy.ops.object.mode_set(mode='EDIT')

bpy.ops.mesh.select_mode(type='FACE')

bm = bmesh.from_edit_mesh(obj.data)

bm.faces.ensure_lookup_table()

# When entering the face editing mode, all faces of the current object will be selected.

for i in v:

bm.faces[i].select = False

bpy.ops.mesh.delete(type='ONLY_FACE')

bpy.ops.object.mode_set(mode='OBJECT')

# add some cylinders to represent rays

for ray_direction_world in short:

bpy.ops.mesh.primitive_cylinder_add(radius=0.005, depth=100, enter_editmode=False, align='WORLD', location=cam_location, scale=(1, 1, 1))

ray_object = bpy.context.object

ray_object.rotation_euler = mathutils.Vector(ray_direction_world).to_track_quat('Z', 'Y').to_euler() # 设置射线方向

main()

- 1

- 3