I'm trying to convert the normal of a material to an image texture, simple thing! It's not even taking a more complex model and baking it to a low poly... But for some reason, every time I bake the normals seem to darken the model's surroundings!

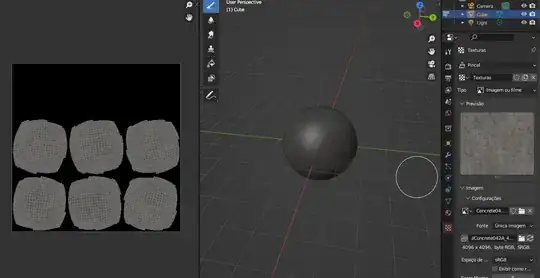

This is the model I made just trying to make simple concrete:

But when I bake the normal map with these settings:

This is what comes out in the texture per object:

It looks right when I get close to the camera, but it looks like the normals are obscuring the model! I'm using object space, I've tried tangent space and it always seems to repeat itself, I've tried CPU and GPU computation and there's no difference... What's happening?

Update

There's no secret to what I'm doing, this was just a test for me to see if I could quickly generate a normal map in Blender for a new texture, so I created a new texture myself and painted it with a diffuse image of concrete material that I I found it on the internet...

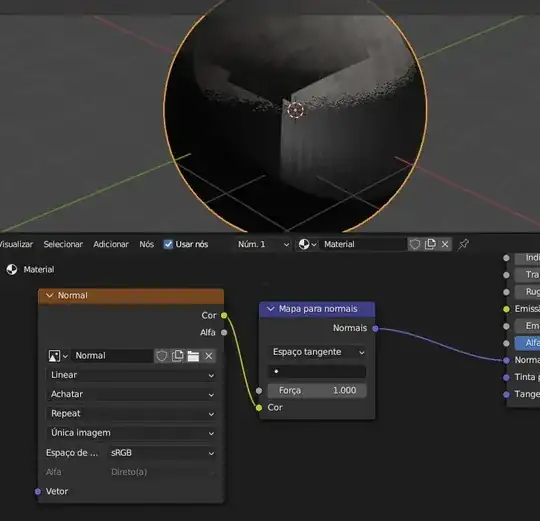

Then I simply used the bump node together with a color gradient to simulate a normal map for the model like this:

Then I generated it from the normal that was for a texture image and then I connected the normal image that had been generated to the normal map and then... Why did it get dark?

Update 2

When I bake trying to use tangent space, things get completely bizarre!