Yes, you can do this with a Wiener Filter which uses the Wiener-Hopf equation to determine the least squared solution to the filter that would compensate for your channel, using the known transmit and receive sequences. The channel is the unknown being solved, and the tx and rx sequences are known. The Wiener Filter is a Minimum Mean Square Error (MMSE) equalizer as it will determine the filter coefficients to minimize the mean square error for a given filter length. (Similarly "LMS Equalizers", which this is not, use an adaptive and iterative algorithm and converge to the same criteria of minimum least squares error).

BOTTOM LINE:

Here is the Matlab function with error checking removed:

function coeff = equalize(tx,rx,depth,ntaps)

%Determines equalizer coefficients using the Wiener-Hopf equations

%TX = Transmitted (Desired) waveform, row vector, length must be > depth+2*ntaps

%RX = Received (Distorted) waveform, row vector, length must be >=depth

%DEPTH = Depth of solution matrix (recommend 10x ntaps but based on duration of stationarity)

%NTAPS = Number of taps for equalizer filter

%force row vectors

tx= tx(:)';

rx= rx(:)';

delay=floor(ntaps/2);

A=convmtx(rx(1:depth).',ntaps);

R=A'A;

X=[zeros(1,delay) tx(1:depth) zeros(1,ceil(ntaps/2)-1)].';

ro=A'X;

coeff=(inv(R)*ro);

USE:

Once the coeff for the FIR filter are determined using the function above, then the Matlab filter function can process the receive sequence:

tx_recovered = filter(coeff, 1, rx)

If you want to see the channel response of the filter use:

freqz(coeff)

If you want the solution to be the estimate of the channel instead of the compensation filter that undoes the channel response, simply swap tx and rx:

coeff = equalize(rx,tx,depth,ntaps)

DETAILS FOR THE VERY INTERESTED:

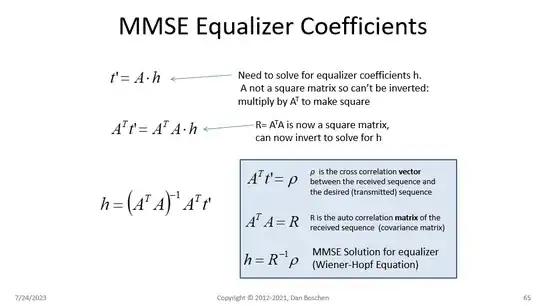

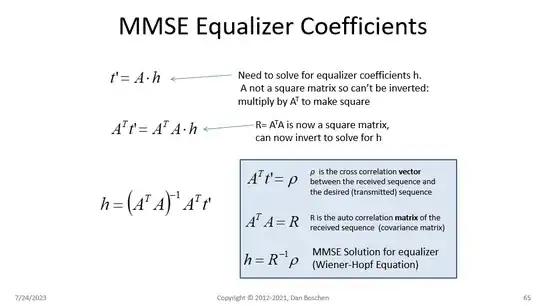

See my slides below giving a high level overview / derivation of the process, This in general form is the Normal Equation (http://mathworld.wolfram.com/NormalEquation.html) used for least squared curve fitting and other applications. I believe I was first introduced to this viewpoint in demonstrating how the Normal Equation is performing deconvolution from the book "Theory and Practice of Modem Design" by John A.C. Bingham.

In practice, I typically do a cross correlation first to determine the channel response time (delay spread) and initial time alignment, and then use an initial equalizer FIR length (# of taps) that exceeds the delay spread (not knowing if leading or trailing echos dominate I will typically start with 2x the delay spread for the FIR length). Once I see the result, the filter size can be reduced if desired based on insignificant magnitudes of the coefficients at the edges of the filter. If the sequences are not exactly aligned, but still within the span of the filter, then the dominant tap will be offset accordingly- so not critical to align beforehand and this gives you insight into what happens if they are grossly misaligned.

Here is an interesting example of the equalizer function I used recently on a sound file from Dalen to equalize the waveforms received by the left and right channels as received by two microphones (treating left as transmit and right as receive and ignoring the actual third party transmitter for the two). The two channels are not recognizable prior to equalization, and completely aligned in amplitude, phase and characteristic after.

Here is a plot of the left and right channels prior to equalization:

Here is the same plot after equalization, right was filtered with the equalizer, and left was filtered with a simple filter just as long as the equalizer with a single unity gain tap in the center and zero elsewhere (to match the delay as the equalizer assumes nominal delay is in the center of the equalizer filter):

This is a zoom in plot of the waveforms after equalization showing how identical the two sequences have become:

How do I know this? I teach courses on DSP and Python related to wireless comm through dsprelated.com and the ieee with new courses running soon! These slides shown are part of my "DSP for Software Radio" course.