PROLOGUE

My answer to this question is in two parts since it is so long and there is a natural cleavage. This answer can be seen as the main body and the other answer as appendices. Consider it a rough draft for a future blog article.

Answer 1

* Prologue (you are here)

* Latest Results

* Source code listing

* Mathematical justification for preliminary checks

Answer 2

* Primary determination probability analysis

* Explanation of the lossless adaptive CORDIC algorithm

* Small angle solution

This turned out to be a way more in depth and interesting problem than it first appeared. The answer given here is original and novel. I, too, am very interested if it, or parts of it, exist in any canon.

The process can be summarized like this:

An initial primary determination is made. This catches about 80% of case very efficiently.

The points are moved to difference/sum space and a one pass series of conditions tested. This catches all but about 1% of cases. Still quite efficient.

The resultant difference/sum pair are moved back to IQ space, and a custom CORDIC approach is attempted

So far, the results are 100% accurate. There is one possible condition which may be a weakness in which I am now testing candidates to turn into a strength. When it is ready, it will be incorporated in the code in this answer, and an explanation added to the other answer.

The code has been updated. It now reports exit location counts. The location points are commented in the function definition. The latest results:

Count: 1048576

Sure: 100.0

Correct: 100.0

Presumed: 0.0

Actually: -1

Faulty: 0.0

High: 1.0

Low: 1.0

1 904736 86.28

2 1192 86.40

3 7236 87.09

4 13032 88.33

5 108024 98.63

6 8460 99.44

Here are the results if my algorithm is not allowed to go into the CORDIC routine, but assumes the answer is zero (8.4% correct assumption). The overall correct rate is 99.49% (100 - 0.51 faulty).

Count: 1048576

Sure: 99.437713623

Correct: 100.0

Presumed: 0.562286376953

Actually: 8.41248303935

Faulty: 0.514984130859

High: 1.05125

Low: 0.951248513674

1 904736 86.28

2 1192 86.40

3 7236 87.09

4 13032 88.33

5 108024 98.63

6 8460 99.44

Okay, I've added an integer interpretation of Olli's algorithm. I would really appreciate somebody double checking my translation into Python. It is located at the end of the source code.

Here are the results:

Count: 1048576

Correct: 94.8579788208

1 841216 80.22 (Partial) Primary Determination

2 78184 87.68 1st CORDIC

3 105432 97.74 2nd

4 10812 98.77 3rd

5 0 98.77 4th

6 12932 100.00 Terminating Guess

Next, I added the "=" to the primary slope line comparisons. This is the version I left in my code.

The results improved. To test it yourself, simply change the function being called in the main() routine.

Correct: 95.8566665649

1 861056 82.12

2 103920 92.03

3 83600 100.00

Here is a Python listing for what I have so far. You can play around with it to your heart's content. If anybody notices any bugs, please let me know.

import array as arr

#================================================

def Main():

#---- Initialize the Counters

theCount = 0

theWrongCount = 0

thePresumedZeroCount = 0

theCloseButNotZeroCount = 0

theTestExits = arr.array( "i", [ 0, 0, 0, 0, 0, 0, 0 ] )

#---- Test on a Swept Area

theLimit = 32

theLimitSquared = theLimit * theLimit

theWorstHigh = 1.0

theWorstLow = 1.0

for i1 in range( theLimit ):

ii1 = i1 * i1

for q1 in range( theLimit ):

m1 = ii1 + q1 * q1

for i2 in range( theLimit ):

ii2 = i2 * i2

for q2 in range( theLimit ):

m2 = ii2 + q2 * q2

D = m1 - m2

theCount += 1

c, t = CompareMags( i1, q1, i2, q2 )

if t <= 6:

theTestExits[t] += 1

if c == 2:

thePresumedZeroCount += 1

if D != 0:

theCloseButNotZeroCount += 1

Q = float( m1 ) / float( m2 )

if Q > 1.0:

if theWorstHigh < Q:

theWorstHigh = Q

else:

if theWorstLow > Q:

theWorstLow = Q

print "%2d %2d %2d %2d %10.6f" % ( i1, q1, i2, q2, Q )

elif c == 1:

if D <= 0:

theWrongCount += 1

print "Wrong Less ", i1, q1, i2, q2, D, c

elif c == 0:

if D != 0:

theWrongCount += 1

print "Wrong Equal", i1, q1, i2, q2, D, c

elif c == -1:

if D >= 0:

theWrongCount += 1

print "Wrong Great", i1, q1, i2, q2, D, c

else:

theWrongCount += 1

print "Invalid c value:", i1, q1, i2, q2, D, c

#---- Calculate the Results

theSureCount = ( theCount - thePresumedZeroCount )

theSurePercent = 100.0 * theSureCount / theCount

theCorrectPercent = 100.0 * ( theSureCount - theWrongCount ) \

/ theSureCount

if thePresumedZeroCount > 0:

theCorrectPresumptionPercent = 100.0 * ( thePresumedZeroCount - theCloseButNotZeroCount ) \

/ thePresumedZeroCount

else:

theCorrectPresumptionPercent = -1

theFaultyPercent = 100.0 * theCloseButNotZeroCount / theCount

#---- Report the Results

print

print " Count:", theCount

print

print " Sure:", theSurePercent

print " Correct:", theCorrectPercent

print

print "Presumed:", 100 - theSurePercent

print "Actually:", theCorrectPresumptionPercent

print

print " Faulty:", theFaultyPercent

print

print " High:", theWorstHigh

print " Low:", theWorstLow

print

#---- Report The Cutoff Values

pct = 0.0

f = 100.0 / theCount

for t in range( 1, 7 ):

pct += f * theTestExits[t]

print "%d %8d %6.2f" % ( t, theTestExits[t], pct )

print

#================================================

def CompareMags( I1, Q1, I2, Q2 ):

# This function compares the magnitudes of two

# integer points and returns a comparison result value

#

# Returns ( c, t )

#

# c Comparison

#

# -1 | (I1,Q1) | < | (I2,Q2) |

# 0 | (I1,Q1) | = | (I2,Q2) |

# 1 | (I1,Q1) | > | (I2,Q2) |

# 2 | (I1,Q1) | ~=~ | (I2,Q2) |

#

# t Exit Test

#

# 1 Primary Determination

# 2 D/S Centers are aligned

# 3 Obvious Answers

# 4 Trivial Matching Gaps

# 5 Opposite Gap Sign Cases

# 6 Same Gap Sign Cases

# 10 Small Angle + Count

# 20 CORDIC + Count

#

# It does not matter if the arguments represent vectors

# or complex numbers. Nor does it matter if the calling

# routine considers the integers as fixed point values.

#---- Ensure the Points are in the First Quadrant WLOG

a1 = abs( I1 )

b1 = abs( Q1 )

a2 = abs( I2 )

b2 = abs( Q2 )

#---- Ensure they are in the Lower Half (First Octant) WLOG

if b1 > a1:

a1, b1 = b1, a1

if b2 > a2:

a2, b2 = b2, a2

#---- Primary Determination

if a1 > a2:

if a1 + b1 >= a2 + b2:

return 1, 1

else:

thePresumedResult = 1

da = a1 - a2

sa = a1 + a2

db = b2 - b1

sb = b2 + b1

elif a1 < a2:

if a1 + b1 <= a2 + b2:

return -1, 1

else:

thePresumedResult = -1

da = a2 - a1

sa = a2 + a1

db = b1 - b2

sb = b1 + b2

else:

if b1 > b2:

return 1, 1

elif b1 < b2:

return -1, 1

else:

return 0, 1

#---- Bring Factors into 1/2 to 1 Ratio Range

db, sb = sb, db

while da < sa:

da += da

sb += sb

if sb > db:

db, sb = sb, db

#---- Ensure the [b] Factors are Both Even or Odd

if ( ( sb + db ) & 1 ) > 0:

da += da

sa += sa

db += db

sb += sb

#---- Calculate Arithmetic Mean and Radius of [b] Factors

p = ( db + sb ) >> 1

r = ( db - sb ) >> 1

#---- Calculate the Gaps from the [b] mean and [a] values

g = da - p

h = p - sa

#---- If the mean of [b] is centered in (the mean of) [a]

if g == h:

if g == r:

return 0, 2;

elif g > r:

return -thePresumedResult, 2

else:

return thePresumedResult, 2

#---- Weed Out the Obvious Answers

if g > h:

if r > g and r > h:

return thePresumedResult, 3

else:

if r < g and r < h:

return -thePresumedResult, 3

#---- Calculate Relative Gaps

vg = g - r

vh = h - r

#---- Handle the Trivial Matching Gaps

if vg == 0:

if vh > 0:

return -thePresumedResult, 4

else:

return thePresumedResult, 4

if vh == 0:

if vg > 0:

return thePresumedResult, 4

else:

return -thePresumedResult, 4

#---- Handle the Gaps with Opposite Sign Cases

if vg < 0:

if vh > 0:

return -thePresumedResult, 5

else:

if vh < 0:

return thePresumedResult, 5

#---- Handle the Gaps with the Same Sign (using numerators)

theSum = da + sa

if g < h:

theBound = ( p << 4 ) - p

theMid = theSum << 3

if theBound > theMid:

return -thePresumedResult, 6

else:

theBound = ( theSum << 4 ) - theSum

theMid = p << 5

if theBound > theMid:

return thePresumedResult, 6

#---- Return to IQ Space under XY Names

x1 = theSum

x2 = da - sa

y2 = db + sb

y1 = db - sb

#---- Ensure Points are in Lower First Quadrant (First Octant)

if x1 < y1:

x1, y1 = y1, x1

if x2 < y2:

x2, y2 = y2, x2

#---- Variation of Olli's CORDIC to Finish

for theTryLimit in range( 10 ):

c, x1, y1, x2, y2 = Iteration( x1, y1, x2, y2, thePresumedResult )

if c != 2:

break

if theTryLimit > 3:

print "Many tries needed!", theTryLimit, x1, y1, x2, y2

return c, 20

#================================================

def Iteration( x1, y1, x2, y2, argPresumedResult ):

#---- Try to reduce the Magnitudes

while ( x1 & 1 ) == 0 and \

( y1 & 1 ) == 0 and \

( x2 & 1 ) == 0 and \

( y2 & 1 ) == 0:

x1 >>= 1

y1 >>= 1

x2 >>= 1

y2 >>= 1

#---- Set the Perpendicular Values (clockwise to downward)

dx1 = y1

dy1 = -x1

dx2 = y2

dy2 = -x2

sdy = dy1 + dy2

#---- Allocate the Arrays for Length Storage

wx1 = arr.array( "i" )

wy1 = arr.array( "i" )

wx2 = arr.array( "i" )

wy2 = arr.array( "i" )

#---- Locate the Search Range

thePreviousValue = x1 + x2 # Guaranteed Big Enough

for theTries in range( 10 ):

wx1.append( x1 )

wy1.append( y1 )

wx2.append( x2 )

wy2.append( y2 )

if x1 > 0x10000000 or x2 > 0x10000000:

print "Danger, Will Robinson!"

break

theValue = abs( y1 + y2 + sdy )

if theValue > thePreviousValue:

break

thePreviousValue = theValue

x1 += x1

y1 += y1

x2 += x2

y2 += y2

#---- Prepare for the Search

theTop = len( wx1 ) - 1

thePivot = theTop - 1

x1 = wx1[thePivot]

y1 = wy1[thePivot]

x2 = wx2[thePivot]

y2 = wy2[thePivot]

theValue = abs( y1 + y2 + sdy )

#---- Binary Search

while thePivot > 0:

thePivot -= 1

uy1 = y1 + wy1[thePivot]

uy2 = y2 + wy2[thePivot]

theUpperValue = abs( uy1 + uy2 + sdy )

ly1 = y1 - wy1[thePivot]

ly2 = y2 - wy2[thePivot]

theLowerValue = abs( ly1 + ly2 + sdy )

if theUpperValue < theLowerValue:

if theUpperValue < theValue:

x1 += wx1[thePivot]

x2 += wx2[thePivot]

y1 = uy1

y2 = uy2

theValue = theUpperValue

else:

if theLowerValue < theValue:

x1 -= wx1[thePivot]

x2 -= wx2[thePivot]

y1 = ly1

y2 = ly2

theValue = theLowerValue

#---- Apply the Rotation

x1 += dx1

y1 += dy1

x2 += dx2

y2 += dy2

#---- Bounce Points Below the Axis to Above

if y1 < 0:

y1 = -y1

if y2 < 0:

y2 = -y2

#---- Comparison Determination

c = 2

if x1 > x2:

if x1 + y1 >= x2 + y2:

c = argPresumedResult

elif x1 < x2:

if x1 + y1 <= x2 + y2:

c = -argPresumedResult

else:

if y1 > y2:

c = argPresumedResult

elif y1 < y2:

c = -argPresumedResult

else:

c = 0

#---- Exit

return c, x1, y1, x2, y2

#================================================

def MyVersionOfOllis( I1, Q1, I2, Q2 ):

# Returns ( c, t )

#

# c Comparison

#

# -1 | (I1,Q1) | < | (I2,Q2) |

# 1 | (I1,Q1) | > | (I2,Q2) |

#

# t Exit Test

#

# 1 (Partial) Primary Determination

# 2 CORDIC Loop + 1

# 6 Terminating Guess

#---- Set Extent Parameter

maxIterations = 4

#---- Ensure the Points are in the First Quadrant WLOG

I1 = abs( I1 )

Q1 = abs( Q1 )

I2 = abs( I2 )

Q2 = abs( Q2 )

#---- Ensure they are in the Lower Half (First Octant) WLOG

if Q1 > I1:

I1, Q1 = Q1, I1

if Q2 > I2:

I2, Q2 = Q2, I2

#---- (Partial) Primary Determination

if I1 < I2 and I1 + Q1 <= I2 + Q2:

return -1, 1

if I1 > I2 and I1 + Q1 >= I2 + Q2:

return 1, 1

#---- CORDIC Loop

Q1pow1 = Q1 >> 1

I1pow1 = I1 >> 1

Q2pow1 = Q2 >> 1

I2pow1 = I2 >> 1

Q1pow2 = Q1 >> 3

I1pow2 = I1 >> 3

Q2pow2 = Q2 >> 3

I2pow2 = I2 >> 3

for n in range ( 1, maxIterations+1 ):

newI1 = I1 + Q1pow1

newQ1 = Q1 - I1pow1

newI2 = I2 + Q2pow1

newQ2 = Q2 - I2pow1

I1 = newI1

Q1 = abs( newQ1 )

I2 = newI2

Q2 = abs( newQ2 )

if I1 <= I2 - I2pow2:

return -1, 1 + n

if I2 <= I1 - I1pow2:

return 1, 1 + n

Q1pow1 >>= 1

I1pow1 >>= 1

Q2pow1 >>= 1

I2pow1 >>= 1

Q1pow2 >>= 2

I1pow2 >>= 2

Q2pow2 >>= 2

I2pow2 >>= 2

#---- Terminating Guess

Q1pow1 <<= 1

Q2pow1 <<= 1

if I1 + Q1pow1 < I2 + Q2pow1:

return -1, 6

else:

return 1, 6

#================================================

Main()

You want to avoid multiplications.

For comparison purposes, not only do you not have to take the square roots, but you can also work with the absolute values.

Let

$$

\begin{aligned}

a_1 &= | I_1 | \\

b_1 &= | Q_1 | \\

a_2 &= | I_2 | \\

b_2 &= | Q_2 | \\

\end{aligned}

$$

Note that for $a,b \ge 0$:

$$ (a+b)^2 \ge a^2 + b^2 $$

Therefore

$$ a_1 > a_2 + b_2 $$

means that

$$ a_1^2 + b_1^2 \ge a_1^2 > ( a_2 + b_2 )^2 \ge a_2^2 + b_2^2 $$

$$ a_1^2 + b_1^2 > a_2^2 + b_2^2 $$

This is true for $b_1$ as well. Also in the other direction, which leads to this logic:

(The previous pseudo-code has been functionally replaced by the Python listing below.)

Depending on your distribution of values, this may save a lot. However, if all the values are expected to be close, you are better off buckling down and evaluating the Else clause from the get go. You can optimize slightly by not calculating s1 unless it is needed.

This is off the top of my head so I can't tell you if it is the best.

Depending on the range of values, a lookup table might also work, but the memory fetches might be more expensive than the calculations.

This should run more efficiently:

(The previous pseudo-code has been functionally replaced by the Python listing below.)

A little more logic:

$$

\begin{aligned}

( a_1^2 + b_1^2 ) - ( a_2^2 + b_2^2 ) &= ( a_1^2 - a_2^2 ) + ( b_1^2 - b_2^2 ) \\

&= (a_1-a_2)(a_1+a_2) + (b_1-b_2)(b_1+b_2) \\

\end{aligned}

$$

When $a_1 > a_2 $ ( and $a_1 \ge b_1 $ and $a_2 \ge b_2 $ as in the code):

$$ (a_1-a_2)(a_1+a_2) + (b_1-b_2)(b_1+b_2) >= (a1-a2)(b1+b2) + (b1-b2)(b1+b2) = [(a1+b1)-(a2+b2)](b1+b2) $$

So if $a_1+b_1 > a_2+b_2$ then

$$ ( a_1^2 + b_1^2 ) - ( a_2^2 + b_2^2 ) > 0 $$

Meaning 1 is bigger.

The reverse is true for $a_1 < a_2 $

The code has been modified. This leaves the Needs Determining cases really small. Still tinkering....

Giving up for tonight. Notice that the comparison of $b$ values after the comparison of $a$ values are actually incorporated in the sum comparisons that follow. I left them in the code as they save two sums. So, you are gambling an if to maybe save an if and two sums. Assembly language thinking.

I'm not seeing how to do it without a "multiply". I put that in quotes because I am now trying to come up with some sort of partial multiplication scheme that only has to go far enough to make a determination. It will be iterative for sure. Perhaps CORDIC equivalent.

Okay, I think I got it mostly.

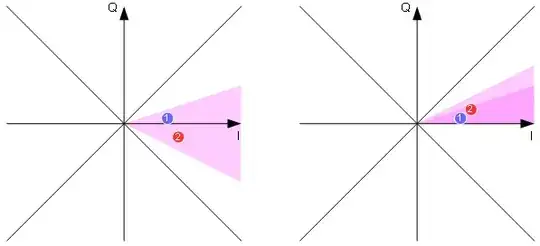

I'm going to show the $ a_1 > a_2 $ case. The less than case works the same, only your conclusion is opposite.

Let

$$

\begin{aligned}

d_a &= a_1 - a_2 \\

s_a &= a_1 + a_2 \\

d_b &= b_2 - b_1 \\

s_b &= b_2 + b_1 \\

\end{aligned}

$$

All these values will be greater than zero in the "Needs Determining" case.

Observe:

$$

\begin{aligned}

D &= (a_1^2 + b_1^2) - (a_2^2 + b_2^2) \\

&= (a_1^2 - a_2^2) + ( b_1^2 - b_2^2) \\

&= (a_1 - a_2)(a_1 + a_2) + (b_1 - b_2)(b_1 + b_2) \\

&= (a_1 - a_2)(a_1 + a_2) - (b_2 - b_1)(b_1 + b_2) \\

&= d_a s_a - d_b s_b

\end{aligned}

$$

Now, if $D=0$ then 1 and 2 are equal. If $D>0$ then 1 is bigger. Otherwise, 2 is bigger.

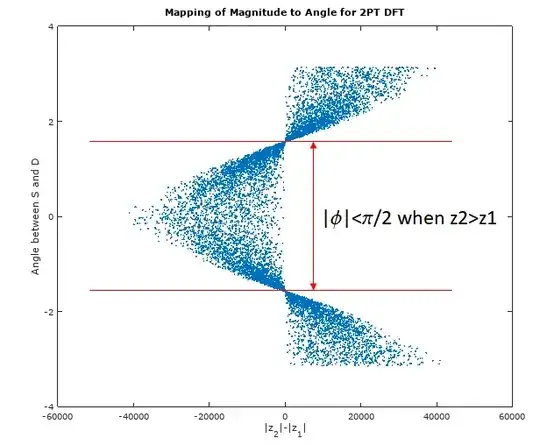

Here is the "CORDIC" portion of the trick:

Swap db, sb # d is now the larger quantity

While da < sa

da =<< 1

sb =<< 1

If sb > db Then Swap db, sb # s is the smaller quantity

EndWhile

When this loop is complete, the following has is true:

$D$ has been multiplied by some power of 2, leaving the sign indication preserved.

$$ 2 s_a > d_a \ge s_a > d_a / 2 $$

$$ 2 s_b > d_b \ge s_b > d_b / 2 $$

In words, the $d$ will be larger than the $s$, and they will be within a factor of two of each other.

Since we are working with integers, the next step requires that both $d_b$ and $s_b$ be even or odd.

If ( (db+sb) & 1 ) > 0 Then

da =<< 1

sa =<< 1

db =<< 1

sb =<< 1

EndIf

This will multiply the $D$ value by 4, but again, the sign indication is preserved.

Let

$$

\begin{aligned}

p &= (d_b + s_b) >> 1 \\

r &= (d_b - s_b) >> 1 \\

\end{aligned}

$$

A little thinking shows:

$$ 0 \le r < p/3 $$

The $p/3$ would be if $ d_b = 2 s_b $.

Let

$$

\begin{aligned}

g &= d_a - p \\

h &= p - s_a \\

\end{aligned}

$$

Plug these in to the $D$ equation that may have been doubled a few times.

$$

\begin{aligned}

D 2^k &= (p+g)(p-h) - (p+r)(p-r) \\

&= [p^2 + (g-h)p - gh] - [p^2-r^2] \\

&= (g-h)p + [r^2- gh] \\

\end{aligned}

$$

If $g=h$ then it is a simple determination: If $r=g$ they are equal. If $r>g$ then 1 is bigger, otherwise 2 is bigger.

Let

$$

\begin{aligned}

v_g &= g - r \\

v_h &= h - r \\

\end{aligned}

$$

Evaluate the two terms on the RHS of the $D2^k$ equation.

$$

\begin{aligned}

r^2 - gh &= r^2 - (r+v_g)(r+v_h) \\

&= -v_g v_h - ( v_g + v_h ) r \\

\end{aligned}

$$

and

$$ g - h = v_g - v_h $$

Plug them in.

$$

\begin{aligned}

D 2^k &= (g-h)p + [r^2- gh] \\

&= (v_g - v_h)p - v_g v_h - ( v_g + v_h ) r \\

&= v_g(p-r) - v_h(p+r) - v_g v_h \\

&= v_g s_b - v_h d_b - \left( \frac{v_h v_g}{2} + \frac{v_h v_g}{2} \right) \\

&= v_g(s_b-\frac{v_h}{2}) - v_h(d_b+\frac{v_g}{2}) \\

\end{aligned}

$$

Multiply both sides by 2 to get rid of the fraction.

$$

\begin{aligned}

D 2^{k+1} &= v_g(2s_b-v_h) - v_h(2d_b+v_g) \\

\end{aligned}

$$

If either $v_g$ or $v_h$ is zero, the sign determination of D becomes trivial.

Likewise, if $v_g$ and $v_h$ have opposite signs the sign determination of D is also trivial.

Still working on the last sliver......

So very close.

theHandledPercent 98.6582716049

theCorrectPercent 100.0

Will continue later.......Anybody is welcome to find the correct handling logic for the same sign case.

Another day, another big step.

The original sign determining equation can be factored like this:

$$

\begin{aligned}

D &= d_a s_a - d_b s_b \\

&= \left( \sqrt{d_a s_a} - \sqrt{d_b s_b} \right)\left( \sqrt{d_a s_a} + \sqrt{d_b s_b} \right) \\

\end{aligned}

$$

The sum factor will always be positive, so it doesn't influence the sign of D. The difference factor is the difference of the two geometric means.

A geometric mean can be approximated by the arithmetic mean. This is the working principle behind the "alpha max plus beta min algorithm". The arithmetic mean is also the upper bound of the geometric mean.

Because the $s$ values are bounded below by $d/2$, a rough lower bound can be established for the geometric mean without much calculation.

$$

\begin{aligned}

s &\ge \frac{d}{2} \\

ds &\ge \frac{d^2}{2} \\

\sqrt{ds} &\ge \frac{d}{\sqrt{2}} \\

&= \frac{\frac{d}{\sqrt{2}}}{(d+s)/2} \cdot \frac{d+s}{2} \\

&= \frac{\sqrt{2}}{1+s/d} \cdot \frac{d+s}{2} \\

&\ge \frac{\sqrt{2}}{1+1/2} \cdot \frac{d+s}{2} \\

&= \frac{2}{3} \sqrt{2} \cdot \frac{d+s}{2} \\

&\approx 0.9428 \cdot \frac{d+s}{2} \\

&> \frac{15}{16} \cdot \frac{d+s}{2} \\

\end{aligned}

$$

If the arithmetic mean of a is greater than b's, then if the upper bound of the geometric mean of b is less than the lower bound of the geometric mean of a it means b must be smaller than a. And vice versa for a.

This takes care of a lot of the previously unhandled cases. The results are now:

theHandledPercent 99.52

theCorrectPercent 100.0

The source code above has been updated.

Those that remain unhandled are "too close to call". They will likely require a lookup table to resolve. Stay tuned.....

Hey Dan,

Well, I would shorten it, but none of it is superfluous. Except maybe the first part, but that is what got the ball rolling. So, a top posted summary would be nearly as long. I do intend to write a blog article instead. This has been a fascinating exercise and much deeper than I initially thought.

I did trim my side note about Olli's slope bound.

You should really be studying the code to understand how few operations actually have to be done. The math in the narrative is simply justification for the operations.

The true "winner" should be the algorithm that is most efficient. A true test would be both approaches programmed on the same platform and tested there. As it is right now, I can tell you that mine (coded in C) will leave his in the dust simply due to I am prototyping with integers and he is using floats with a lot of expensive operations.

My thoughts at this point are that the remaining 0.5% cases I'm not handling are best approached with a CORDIC iterative approach. I am going to try to implement a variation of Olli's approach, including his ingenius varying slope, in integers. The "too close to call" category should be very close indeed.

I agree with you that Olli does excellent work. I've learned a lot from him.

Finally, at the end, aren't we all winners?

Dan,

Your faith in the CORDIC is inspiring. I have implemented a lossless CORDIC different than Olli's, yet might be equivalent. So far, I have not found your assertion that it is the ultimate solution true. I am not going to post the code yet because there ought to be one more test that cinches it.

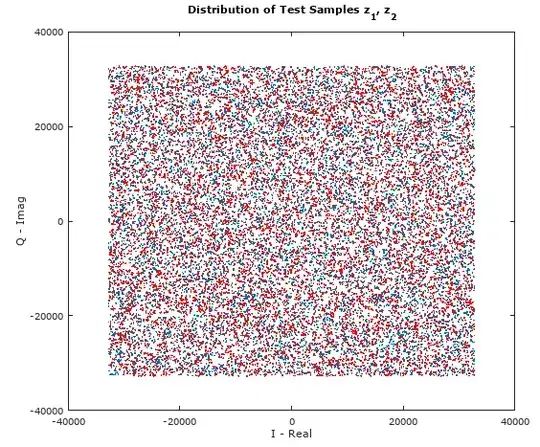

I've changed the testing a little bit to be more comparable to Olli. I am limiting the region to a quarter circle (equivalent to a full circle) and scoring things differently.

Return Meaning

Code

-1 First Smaller For Sure

0 Equal For Sure

1 First Larger For Sure

2 Presumed Zero

The last category could also be called "CORDIC Inconclusive". I recommend for Olli to count that the same.

Here are my current results:

Count: 538756

Sure: 99.9161030225

Correct: 100.0

Presumed: 0.0838969774815

Zero: 87.610619469

Faulty: 0.0103943157942

High: 1.00950118765

Low: 0.990588235294

Out of all the cases 99.92% were determined for sure and all the determinations were correct.

Out of the 0.08% cases that where presumed zero, 87.6% actually were. This means that only 0.01% of the answers were faulty, that is presumed zero erroneously. For those that were the quotient (I1^2+Q1^2)/(I2^2+Q2^2) was calculated. The high and low values are shown. Take the square root to get the actual ratio of magnitudes.

Roughly 83% of cases are caught by the primary determination and don't need any further processing. That saves a lot of work. The CORDIC is needed in about 0.7% of the cases. (Was 0.5% in the previous testing.)

***********************************************************

* *

* C O M P L E T E A N D U T T E R S U C C E S S *

* *

* H A S B E E N A C H I E V E D !!!!!!!!!!! *

* *

***********************************************************

Count: 8300161

Sure: 100.0

Correct: 100.0

Presumed: 0.0

Zero: -1

Faulty: 0.0

High: 1.0

Low: 1.0

You can't do better than that and I am pretty sure you can't do it any faster. Or not by much anyway. I have changed all the "X <<= 1" statements to "X += X" because this is way faster on an 8088. (Sly grin).

The code will stay in this answer and has been updated.

Further explanations are forthcoming in my other answer. This one is long enough as it is and I can't end it on a nicer note than this.

sqrt()andpwr(). That is, unlessabs()is not too expensive in terms of cycles. – a concerned citizen Jan 01 '20 at 17:01