OP's approach follows three basic premises:

- Output at

i, j corresponds to similarity of kernel with input, with kernel centered at i, j, so that the input and output axes are perfectly aligned, as would be the case in 1D continuous time with inputs centered at $t=0$.

- Centering indexing conventions are followed. The "center" can either be at index 0, or its

fftshift which is N//2. Usual image inputs are never centered at index 0, so N//2.

- The images, as-is, are ground truth. Pixels are discrete, so there's no such thing as "fractional index".

As will be shown, all standard filtering follows these premises.

Summary

- Only OP's approach yields valid images as outputs, for valid image inputs

- Only OP's approach yields outputs that can be used as features for vast majority of cases: distance algorithms, edge detection, contour tracing, etc

- Only OP's approach yields outputs subject to subsequent filtering, e.g. after nonlinearity

- Only OP's approach has the property that shifting the target in the image will shift the peak in the output by the same amount, for all shifts

- Only OP's approach can utilize DFT properties to yield commonly expected behavior, like time reversal

- Only OP's approach is consistent with filtering in vast majority of other contexts, including basic spectral manipulation (e.g. lowpass), STFT and CWT, and all of machine learning.

The following disclaimer is specific to this network's regular users: if any of these points are disputed, I'll only reply in comments if the entire answer has been read first.

Minimal version

When doing usual cross-correlation with same output mode, do you input the left or right image?

Right is what non-OP's approaches do, with the conj(fft2(h)) variant being within 1-sample shift:

import numpy as np; from numpy.fft import fft2, ifft2, fftshift

import scipy.signal

x = np.random.randn(30, 30) + 1j*np.random.randn(30, 30)

h = np.random.randn(30, 30) + 1j*np.random.randn(30, 30)

xs = fftshift(fftshift(x, axes=0), axes=1)

assert np.allclose(ifft2(fft2(x) * fft2(np.conj(h[::-1, ::-1]))),

scipy.signal.correlate2d(xs, h, mode='same', boundary='circular'))

Standard filtering

Low-pass, high-pass, band-pass, and much of rudimentary spectral manipulations are mostly done with zero-phase filters, so that: (a) filtering doesn't shift/delay the signal, or misalign its spectral contents; (b) the filter isn't asymmetric so that left or right side of windowed input isn't favored. Zero-phase means real-valued in Fourier; this guarantees that the filter is centered at either 0 or N//2.

CWT, STFT, and all time-frequency analysis is done per premise 1.

Convolution and cross-correlation with 'same' output mode, which ensures that output size equals input size, unpad in a way that ensures premise 1, in every major implementation (NumPy, scipy, MATLAB, all of machine learning). The unpadding is done with surgical precision to ensure this: we unpad such that at each boundary edge, the kernel overlaps (is centered over) input as much as possible.

All of this follows an extremely basic objective of a time-to-time transform: alignment. Output at $t_0$ corresponds to input being modified around $t_0$. For an LTI system with impulse response $h$, the output at $t_0$ is a modification of input with $h$ centered over $x(t_0)$, such that $y(t)$ and $x(t)$ are directly comparable, and that if $h$ is the unit impulse, then $y(t) = x(t)$.

Standard filter formulation

In continuous time, virtually all filters will look like what's on the left rather than on the right:

It's done for same reason all of mathematics is done oriented around the origin, whenever it's the case: it's the reference coordinate. Even if we were to center elsewhere, where would it be for IIR filters? It'd be just as arbitrary as centering at $t=0$, and in the end we'd still have to align it with input to get what we want.

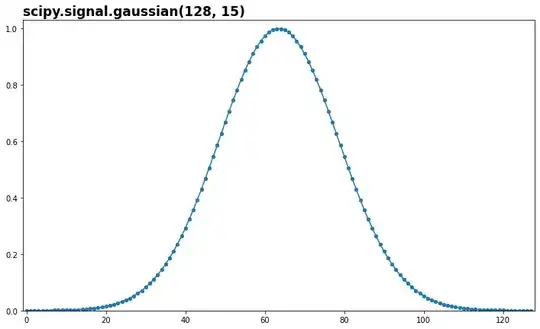

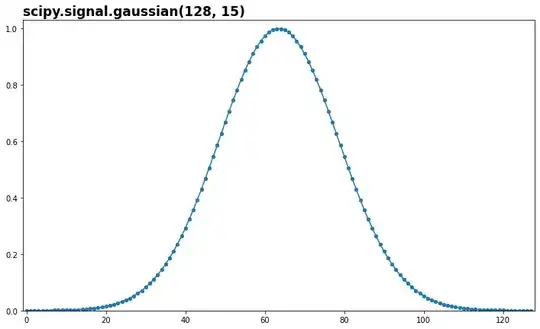

Yes, this is all very basic. And partly for same basic reasons, when we sample a time-domain filter in code, we get something that looks like this:

which is also what all major implementations will return windows as, and indeed this imports from scipy.signal.windows. Yet, they won't do filtering this way; all filtering is done zero-phase, 0-centered. For images, it means this MATLAB example would be:

Onto the premises:

P1: Input-output alignment

This agrees with the origin definition of cross-correlation:

$$

(x \star h)(\tau) = \int_{-\infty}^{\infty} x(t) \overline{h(t - \tau)} dt \tag{1}

$$

Output at shift $\tau$ corresponds to the kernel, $h$, being shifted by $\tau$. Combined with the standard of centering $h$ over $t=0$, this becomes "$h$ being centered over $\tau$".

P2: Center index is either 0 or N//2

All major library-generated filters, if visually centered, will be centered with respect to N//2. Scipy example:

from scipy.signal.windows import hann

for N in range(64, 129):

for sym in (True, False):

w = hann(N, sym=sym)

assert w[N//2] == w.max()

For sym=True, it also passes for N//2 - 1, since by definition it means left half of array equals right, and putting the second peak at N//2 + 1 would be farther off center. sym=False, the intended option for windowed FFT, only passes for N//2. ifftshift moves the peak to index 0.

More pertinently, we're working with FFT, which is centered about 0. fftshift moves this center to N//2, which is why ifftshift moves to 0. These conventions enforce DFT properties; if centers were anywhere else, we'd lose, for example, $x[n] = x[-n] \Leftrightarrow \text{zero phase}$, where negative indexing is circular per DFT's definition. Most properties would lose duality without intermediate and domain-specific shift manipulations. Conversely, if a time-domain manipulation doesn't follow said centering with standard FFT, it won't affect FFT as intended: flip(x) is not time-reversal.

P3: Discrete is ground-truth

Suppose this is false. Then every major library and centering conventions are invalidated.

Of course, we can insist on being centered over fractions, which would require bandlimited half-index-shift interpolation for the even case. This is a valid, yet completely separate sentiment, and completely separate operation that is regardless forced to build (operate) upon a non-fractional indexed output.

Other useful properties

OP's proposal is the only one that satisfies

$$

\tau + 1 > \tau

$$

For ifft(fft(x) * conj(fft(h))), index N//2 and N//2 + 1 are apart by N//2: there's a jump-discontinuity, and indexing is no longer ordinal. This invalidates discrete-continuous domain correspondence, as continuous domains (in any signal processing) are by definition ordinal.

This discontinuity invalidates all spatially local operations upon the output. Any further filtering (e.g. after an intermediate operation), distance algorithms, edge detection, contour tracing - gone. OP's approach preserves spatial contiguity.

Re: Olli's answer

In summary, the question's approach of using ifftshift results in a small indexing error of half a sampling period, for the even period case. This problem cannot be solved by any non-interpolating shifting scheme.

i.e., if it's considered a problem, OP's approach suffers the same as the other alternatives in question.

Then a peak at the first sample in the circular cross-correlation output is quite intuitively, it being at index zero, understood as indicating zero displacement between the input images

Quite unintuitively, index N//2 - 1 is displacement by N//2 - 1, and index N//2 is displacement by -N//2. Shifting target in image by T left or right won't always shift output's peak by T left or right - likewise for up and down.

Another motivation behind the question's approach may be to detect displacements using local methods that do not handle wraparound because they were designed for non-periodic data.

In other words: if your inputs are valid images and you want a valid image output, use OP's approach. Indeed this refers to spatial contiguity.

Re: other approaches

We contrast OP's approach with others' by exploring latter explicitly. This section primarily concerns advocacy for fft(flip(conj(h)))-based approach with the goal of being exact, which was described in this answer by @Gillispie.

Following the answer's setup, Gillispie seeks to show that, in order to obtain equivalence between convolution and cross-correlation, we must time reverse correctly. Regardless of whether or not that point is achieved correctly, there's no mention of the critical practical fact that input images are visually centered, and that not accounting for it will run into all the problems described in this answer. Turns out, it's also not achieved correctly.

To be safe, we examine the relevant portions that show the objective is to be exact when it comes to circular time reversal:

The reason is that for discrete signals such as images, conjugation in the Fourier domain does not equate to time reversal. Rather, it performs modulo N time reversal: $ [x_1, x_2, x_3,... x_N] => [x_1, x_N, x_{N-1}, x_{N-2},... x_2]$.

This completely correct observation is, unfortunately, spinned into the opposite conclusion. We re-iterate the motivation of equivalence, also of correspondence between time and freq domains:

The conjugation property of the continuous time Fourier transform says that conjugating in the frequency domain conjugates and flips in the time domain: $\mathcal{F}^{-1} (\overline{X(\omega)}) = \overline{x(-t)}$ . So you should be able to perform cross-correlation via FFT by just conjugating one of the FFTed vectors, right? Wrong!

So the goal is $\mathcal{F}^{-1} (\overline{X(\omega)}) = \overline{x(-t)}$, in discrete. If readers were careful with this answer, they already see the problem. If not, let's be explicit: "$x(-t)$" assumes the existence of $x(0)$. Yet, $x(0)$ is not at index 0 - it is at index N//2, as Gillispie is aware by stating that flip is the solution. So, we seek $x[n] \rightarrow x[-n]$, with $x[0]$ at N//2. This is not achieved:

It's not achieved for any other choice of $x[0]$ centering either - not N//2 + 1, not N//2 - 1, not 0, or anything else. It is not achieved with Jdip's approach either. It is, ironically, achieved only with OP's approach:

A separate but related problem is described by Olli:

Gillispie is right to emphasize the concern, as it has numerous implications. For a zero-phase complex filter, for example, conjugation is identical to time reversal, but only if it's done right.

The motivation for OP's answer to the linked Q&A was two-fold: to correct Gillispie on his own goal, and to pose the most useful variant of cross-correlation for general image processing. After reading this answer of mine, let the reader judge whether OP succeeded.

ifftshiftbecomesfftshift. – Olli Niemitalo May 09 '23 at 09:55fftshift()in order to implement cross correlation in frequency domain. Even for 2D. You said I'm wrong, I offered you open a question. That's it. Let's focus on creating knowledge. – Royi May 10 '23 at 17:45'same','valid', andpad_modes do. – OverLordGoldDragon May 26 '23 at 00:11