Another way to define the derivative of $f(x)$ (eg $f:\mathbb{R} \rightarrow \mathbb{R}$) is the following:

Assuming that $f(x+h)$ can be expressed as

$$f(x+h)=f(x)+h \cdot d_f(x, h)$$

for some function $d_f(x, h)$ and arbitrary $h$.

Then we define the derivative $f'(x)$ at point $x$ to be:

$$f'(x)=d_f(x,0)$$

When $d_f(x, 0)$ is well-defined.

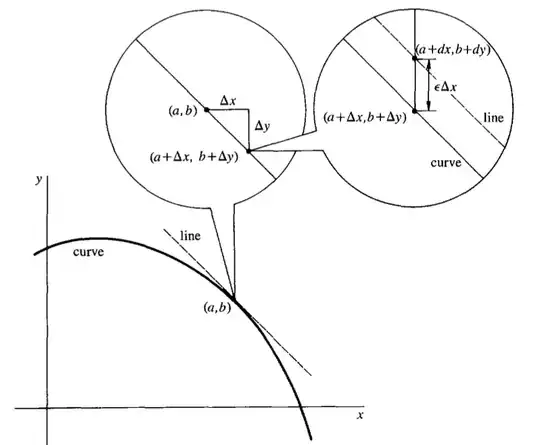

Intuitively the definition expresses the slope of the secant at points $x+h$, $x$ when it becomes tangent (ie $h=0$).

Using above definition, one can prove all the properties of derivatives.

Assume $f(x)$, $g(x)$ have derivatives $f'(x)$, $g'(x)$. That means there are functions $d_f(x,h)$ and $d_g(x, h)$ such that $f(x+h)=f(x)+h \cdot d_f(x, h)$ and $g(x+h)=g(x) + h \cdot d_g(x, h)$ and $f'(x)=d_f(x,0)$ and $g'(x)=d_g(x,0)$.

Linearity

$cf(x+h) = c(f(x)+h\cdot d_f(x,h))=cf(x)+h \cdot (c \cdot d_f(x,h))$.

Thus $(cf)'=c \cdot d_f(x,0)=cf'$.

Addition Rule

$f(x+h)+g(x+h)=f(x)+g(x)+h \cdot (d_f(x,h)+d_g(x,h))$.

Thus $(f+g)'=d_f(x,0)+d_g(x,0)=f'+g'$.

Product Rule

$f(x+h) \cdot g(x+h) \\ \begin{align} &= (f(x)+h \cdot d_f(x,h)) \cdot (g(x)+h \cdot d_g(x,h)) \\ &= f(x)g(x)+h(g(x) \cdot d_f(x,h)+f(x) \cdot d_g(x,h)+h \cdot d_f(x,h) \cdot d_g(x,h)) \end{align}$

Thus $(f \cdot g)'=g(x) \cdot d_f(x,0)+f(x) \cdot d_g(x,0)+0=g \cdot f'+f \cdot g'$.

Chain Rule

$f(g(x+h)) \\ \begin{align} &= f(g(x)+h \cdot d_g(x,h)) \\ &= f(g(x))+h \cdot d_g(x,h) \cdot d_f(g(x),h \cdot d_g(x,h)) \end{align}$

Thus $(f \circ g)'=g' \cdot f'(g)$.

Constant

$c = c +h \cdot 0$. Thus $(c)'=0$.

Identity

$f(x) = x$

$f(x+h) = x + h \cdot 1$

Thus $f'(x) = (x)' = 1$.

Reverse

$f(x)=1/g(x)$.

Thus $(g(x) \cdot f(x))' = (1)' \implies gf' + g'f = 0 \implies f' = -\frac{g'f}{g} = \frac{-g'}{g^2}$.

Inverse

$f(f^{-1}(x))=x$.

Thus $(f(f^{-1}(x)))'= (f^{-1})' \cdot f' = 1 \implies (f^{-1})' = \frac{1}{f'}$.

Monomial

$f(x) = x^n \implies f'(x) = nx^{n-1}$ can be proved with induction based on $f(x)=x$ and the product rule.

$f(x) = x^{-n} \implies f'(x) = -nx^{-n-1}$ can be proved with chain rule on $f(x)=h(g(x))=(\frac{1}{x})^n$.

$f(x) = x^{1/n} \implies f'(x) = \frac{1}{n}x^{1/n-1}$ can be proved with inverse rule $f^{-1}(x)=x^n \implies f' = 1/(f^{-1})'$.

Exponential

$(e^x)' = (\sum_{n=0}^\infty \frac{x^n}{n!})' = \sum_{n=1}^\infty \frac{x^{n-1}}{(n-1)!} = \sum_{n=0}^\infty \frac{x^n}{n!} = e^x$

so on..

Most importantly the derivatives of many functions (eg polynomials, rational functions, power series, trigonometric functions, etc..) can be computed using above definition and rules without any use or mention of limits.

With this definition of derivative one can even demonstrate a variation of L'Hôpital's rule to evaluate ratios of $\frac{f(a)}{g(a)}$

when $f(a)=g(a)=0$.

Assuming $f,g$ have derivatives, one can consider the ratio

$$\begin{align}\frac{f(a+h)}{g(a+h)} &=\frac{f(a)+h \cdot d_f(a,h)}{g(a)+h \cdot d_g(a,h)}\\&=\frac{d_f(a,h)}{d_g(a,h)}\end{align}$$

Then the original ratio is given when $h=0$, that is

$$\frac{f(a)}{g(a)}=\frac{f'(a)}{g'(a)}$$

Assuming $g'(a) \ne 0$.

An important note is that $f(x+\Delta x) \approx f(x) + \Delta x \cdot f'(x)$ is valid approximation when $d_f(x, \Delta x) \approx d_f(x, 0)$ which is a more general condition than $\Delta x \approx 0$.