I think it is better to understand the result with some more clarity.

Let's first begin with the continuity part:

Theorem 1: Let a function $f:[a, b] \to\mathbb {R} $ be strictly monotone and continuous on $[a, b] $ and let $I=f([a, b]) $ be the range of $f$. Then there exists a function $g:I \to\mathbb {R} $ such that $g$ is continuous on $I$ and $$f(g(x)) =x\, \forall x\in I, g(f(x)) =x\, \forall x\in[a, b] $$

The function $g$ is unique and traditionally denoted by $f^{-1}$ and the important point in the above theorem is that inverse of a continuous function is also continuous. Also observe that if a continuous function is invertible it must also be one-one and continuity combined with one-one nature on an interval forces the function to be strictly monotone. Another point worth remarking is that $I=f([a, b]) $ is also an interval which is either $[f(a), f(b)] $ or $[f(b), f(a)] $ depending upon whether $f$ is increasing or decreasing.

You should be able to prove the above theorem using properties of continuous functions on a closed interval.

Once we are done with the continuity part it is not much difficult to deal with derivatives and we have:

Theorem 2: Let a function $f:[a, b] \to\mathbb {R} $ be strictly monotone and continuous on $[a, b] $. Let $c\in (a, b) $ be such that $f'(c) \neq 0$ and $d=f(c) $. Then the inverse function $f^{-1}$ is differentiable at $d$ with the derivative given by $$(f^{-1})'(d)=\frac{1}{f'(c)}=\frac{1}{f'(f^{-1}(d))}$$

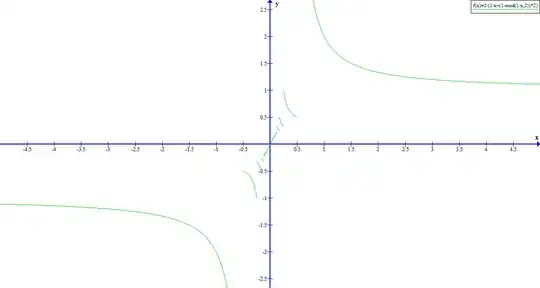

Before coming to the proof of the theorem above it is best to illustrate it via a typical example. So let $f:[-\pi/2,\pi/2]\to\mathbb{R}$ be defined by $f(x) =\sin x$ and the range of $f$ here is $I=[-1,1]$. The derivative $f'(x) =\cos x$ is non-zero in $(-\pi/2,\pi/2)$ and hence the inverse function $f^{-1}$ (usually denoted by $\arcsin$) is differentiable on $(-1,1) $.

To evaluate $(f^{-1})'(x)$ for $x\in (-1,1)$ we need to use a point $y\in(-\pi/2,\pi/2)$ such that $x=f(y) =\sin y$ and we have $$(f^{-1})'(x)=\frac{1}{f'(y)}=\frac{1}{\cos y}=\frac{1}{\sqrt{1-\sin^2y}}=\frac{1}{\sqrt{1-x^2}}$$ The proof of above theorem is based on the definition of derivative. One should note that as per theorem 1 the inverse function $f^{-1}$ is continuous on range of $f$ and in particular at point $d=f(c) $. We have

\begin{align}

(f^{-1})'(d)&=\lim_{h\to 0}\frac{f^{-1}(d+h)-f^{-1}(d)}{h}\notag\\

&=\lim_{k\to 0}\frac{k}{f(c+k)-f(c)}\notag\\

&=\frac{1}{f'(c)}\notag

\end{align}

Here we have used $$k=f^{-1}(d+h)-f^{-1}(d)=f^{-1}(d+h)-c$$ so that $$d+h=f(c+k)$$ or $$h=f(c+k) - d=f(c+k) - f(c) $$ and note that by continuity of $f^{-1}$ at $d$ we have $k\neq 0,k\to 0$ as $h\to 0$.

It should be observed that for the result to hold we must ensure that derivative $f'(c) \neq 0$ and $f^{-1}$ is continuous at $d=f(c) $.