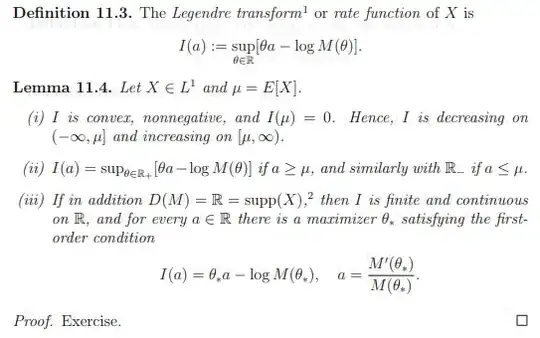

Let $X \in L^1$ be a random variable on some probability space, define $M(\theta) \equiv E(e^{\theta X})$ as its moment generating function and let $D(M) \equiv \{\theta \in \mathbb{R} : M(\theta) < \infty\}$. I'm reading a really toxic set of lecture notes which has the following lemma as an exercise and I cant find the same theorem online. I'm having trouble proving bullet point (iii) in particular:

My attempt at a proof follows. You may ignore bullet point (i) and (ii) if you wish, but they may be helpful:

For ease in notation I define $f_a(\theta) \equiv a\theta - \log(M(\theta))$

Bullet point (i): Note $f_a(0) = 0 \leq \sup_\theta f_a(\theta) = I(a)$, convexity follows easily since $\forall a, b \in \mathbb{R}$, $$(\lambda a + (1-\lambda)b)\theta - \log (M(\theta)) = \lambda (a \theta - \log(M(\theta))) + (1-\lambda)(b\theta - \log (M(\theta))) \leq \lambda I(a) + (1-\lambda)I(b)$$ and we can take the supremum of the LHS over $\theta$.

We also have, by the convexity of $-\log(x)$ and Jensen's inequality, $$-\theta \mu = E(-\log(e^{\theta X})) \ge -\log(E(e^{\theta X})) = -\log (M(\theta))$$ Rearranging gives $f_\mu(\theta) \leq 0$ for all $\theta$ (trivially for $\theta$ such that the mgf is infinite) so that the supremum $I(\mu) \leq 0$. Lastly, we see that for each $b \leq a \leq \mu$, we have the existence of a $\lambda \in [0,1]$ so that $a = \lambda b + (1-\lambda)\mu$. From convexity, non-negativity and the fact that $I(\mu) = 0$, it is immediate that $I(a) \leq \lambda I(b) \leq I(b)$. The identical set of ideas works for $\mu \leq a \leq b$

Bullet point (ii): For $a \ge \mu$, and any $\theta < 0$, we have trivially $f_a(\theta) \leq f_\mu(\theta) \leq 0$ from bullet point (i). The conclusion is trivial from here, and a similar set of inequalities is true for the other case.

Bullet point (iii): This is where I am lost. I know that $M'(\theta)$ exists $\forall \theta \in \mathbb{R}$ since $X \in L^1$ (for those who are unaware of how to prove this, simply find an appropriate dominating function for the difference quotient and apply dominated convergence), and moreover $M'(\theta) = E(Xe^{\theta X})$. So for the last part of (iii), we can note that $f_a(\theta)$ is concave in $\theta$ and differentiable so that any critical point is a global maximum. Thus, it suffices to find $\theta$ so that (just take the derivative and set to 0) $$a - \frac{M'(\theta)}{M(\theta)} = 0$$

This would actually solve the whole question, as it will show that we have a convex function that is finite at every point in $\mathbb{R}$ (and thus finite on any open interval $(a,b)$) and is thus continuous (see here for example for a proof of this fact: Proof of "every convex function is continuous"). I just need help with the existence of such a $\theta$. Please help if you can!

(As a sidenote, the fact that this is an exercise in a set of lecture notes is genuinely utterly cruel)