I'm studying Pattern recognition and statistics and almost every book I open on the subject I bump into the concept of Mahanalobis distance. The books give sort of intuitive explanations, but still not good enough ones for me to actually really understand what is going on. If someone would ask me "What is the Mahanalobis distance?" I could only answer: "It's this nice thing, which measures distance of some kind" :)

The definitions usually also contain eigenvectors and eigenvalues, which I have little trouble connecting to the Mahanalobis distance. I understand the definition of eigenvectors and eigenvalues, but how are they related to the Mahanalobis distance? Does it have something to do with changing the base in Linear Algebra etc.?

I have also read these former questions on the subject:

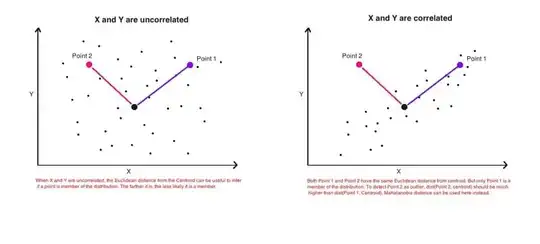

Intuitive explanations for Gaussian distribution function and mahalanobis distance

http://www.jennessent.com/arcview/mahalanobis_description.htm

The answers are good and pictures nice, but still I don't really get it...I have an idea but it's still in the dark. Can someone give a "How would you explain it to your grandma"-explanation so that I could finally wrap this up and never again wonder what the heck is a Mahanalobis distance? :) Where does it come from, what, why?

I will post this question on two different forums so that more people could have a chance answering it and I think many other people might be interested besides me :)

Thank you in advance for help!