Imagine that we have a family of probability disributions with p.d.f $f_{\theta}(z)$ where $\theta \in \Theta$. We also know that there is a linear dependence between parameters. As a consequence we can restrict to a nested model with p.d.f $f_{\theta}(z)$, where $\theta \in \Theta_{0} \subseteq \Theta$.

Formally we have such a situation: \begin{align} \Theta \subseteq \mathbb{R}^p,~~~~ h:\mathbb{R}^p \to \mathbb{R}^{p-q},~~~~ \Theta_{0} = \{\theta \in \Theta : h(\theta)=0\}. \end{align} where h is a linear map onto $\mathbb{R}^{p-q}$ so we can say that: \begin{align} h(\theta) = A\theta = 0, \end{align} where $A$ is a $(p-q) \times p$ matrix of a linear map $h$.

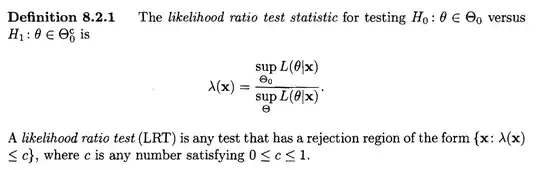

As a result we can say that $\Theta_{0} \subseteq \mathbb{R}^q$. HERE BEGINS MY PROBLEM. I would be very grateful if someone could tell me why we can conclude now that \begin{align} \sup \limits_{\theta \in \Theta_{1}}f_{\theta}(z) \overset{\huge{?}}{=} \sup \limits_{\theta \in \Theta}f_{\theta}(z). \end{align} Consequently the test statistic of a likelihood ratio test is \begin{align} \lambda(z) = \frac{\sup_{\theta \in \Theta_{1}}f_{\theta}(z)}{\sup_{\theta \in \Theta_{0}}f_{\theta}(z)}\overset{\huge{?}}{=} \frac{\sup_{\theta \in \Theta}f_{\theta}(z)}{\sup_{\theta \in \Theta_{0}}f_{\theta}(z)}. \end{align}