This is also related to an older thread in MSE ("what is the half derivative of zeta at zero?") .

One of the possible steps in the problem of that thread was to evaluate the series

$$s_a=\eta^{(0.5)}(0) = î \small{\left(\sqrt{\ln(1)}-\sqrt{\ln(2)}+\sqrt{\ln(3)}-\sqrt{\ln(4)} \cdots \right) \underset{\mathfrak E}{\approx}} - 0.347006596200 \, î $$ as the (regularized by Euler-summation $\mathfrak E$) value for the half-derivative of the alternating zeta (or "Dirichlet's eta"). Note, that the additional imaginary factor $î$ is due to the fact, that we had originally negative values under the square-root terms. In the following I'll leave this factor out for convenience.

Q: But how can I do the nonalternating series $$ s_p=\zeta^{(0.5)}(0)= î \small{ \left( \sqrt{\ln(1)}+\sqrt{\ln(2)}+\sqrt{\ln(3)}+\sqrt{\ln(4)}+ \cdots \right)} \underset{\mathfrak ???}{\approx} ??? $$ $\qquad \qquad$ I don't see so far any possibility for instance in the sense of L. Euler's famous $\eta() \to \zeta() $ - conversion.

[update]: what I've additionally just done was to apply a procedure to find the/a formal power series for the problem of finite sums of consecutive terms.

The procedure is that of the approximation of the Neumann-series of the Carleman-matrix for the function $f(x) = \sqrt{\ln(\exp(x^2)+1)}$ which is the iterative transfer function which produces the term of the series for index $n+1$ from the term at index $n$.

I'll explain this now in more detail:

For the procedure which is also known with the name "indefinite summation" we need first a function, which generates the terms of the series to be summed as iterates of itself. What function can transfer $\sqrt{\ln(x)}$ to $\sqrt{\ln(x+1)}$? This is $f(x)$ as given above, because for instance it gives for $z= \sqrt{\ln(5)}$ the result $f( z) = \sqrt{\ln(6)}$ and $f°^2( z) = \sqrt{\ln(7)}$ and so on.

So we can formally write the series $$ s_p =î \cdot ( z + f(z) + f°^2(z) + f°^3(z) + ... ) \qquad \qquad \text{ with } z=\sqrt{\ln(1)}=0 $$

An approach which I've exercised a couple of times is to implement $f()$ by a Carlemanmatrix based on $f()$'s formal powerseries. That powerseries is

$$ \mathcal {\text{Taylor}} (f(x)) \approx \small 0.83255461 + 0.30028060 x^2 + 0.020918484 x^4 - 0.0075447481 x^6 + ... $$

Let now $ C = \text{carleman}(f) $ be the Carlemanmatrix for $f(x)$ then the dotproduct of a vector $V(x)=[1,x,x^2,x^3,x^4,...]$ with $C$ gives $V(x) \cdot C= V(f(x))$ by definition, and if we look at the second column of $C$ only we have $V(x) \cdot C_{0..\infty,1} = f(x)$ at least as formal powerseries, and if it is convergent for small $x$ we can also evaluate numerically.

Now the idea of the Neumann-series comes into play. As $V(x) \cdot C_{0..\infty,1} = f(x)$ we should formally also have $V(x) \cdot C^2_{0..\infty,1} = f°^2(x)$, $V(x) \cdot C^3_{0..\infty,1} = f°^3(x)$ and so on, such that we make the ansatz: $$ V(z) \cdot ( C^0 + C^1 + C^2 + C^3 + ... )_{0..\infty,1} \overset?= z + f°(z) + f°^2(z) + f°^3(z) + ... $$ and the key-observation is here, that we have in the parenthese of the lhs the geometric series of the matrix $C$ (such a construct is also called "Neumann-series"). We can surely expect, that this is no proper sum, but with some examples of alternating geometric series instead I could get meaningful approximations when using empirical approximations to $B = (I+C)^{-1}$ and then approximate for instance $V(z) \underset{\mathfrak E}\cdot B_{0..\infty,1} \approx z - f(z) + f°^2(z) - ... + ...$ where $\mathfrak E$ means Eulersummation in the dotproduct if needed.

This is not so simple and straightforward for the non-alternating geometric series. As the Carlemanmatrix of any function has the eigenvalue $1$ (at least once) by construction we would run into $\frac 10$ and cannot immediately try the approximation with finitely truncated matrices $A = ( I - C)^{-1}$. One of the workarounds, which gives sometimes meaningful results is, to omit the first column of $(I - C)$ which gives then some result, but where the first row is then systematically missing/unknown.

Remark: a case where this workaround is successful is the problem of the sum-of-like-powers where the Carlemanmatrix is the upper-triangular Pascalmatrix $P$ . The removal of the empty first column in $ Q = (I - P)_{0..(n-1),1..n} $ allows inversion and provides the matrix $Q^{-1}$ of coefficients, with which Faulhaber had solved the summing-of-like-powers-problem. The same ansatz was also tried as far as I know by two authors for solving the extension of tetration to real iteration heights. I've similarly attempted some other series-problems with such "iteration-series" of iterated functions $f°^h(x)$ and their according Neumann-series with meaningful approximations

So I've now tested $_n Q = (I - C)_{0..n-1,1..n}$ with increasing $n$ and computed $\,_nA^* = \,_n Q^{-1} $ and made $\,_nA$ by inserting the unknown first row in $\,_nA^*$ The top-left of the heuristically approximated matrix $\,_nA$ is $$\small \,_nA_{0..16,0..1}=\begin{bmatrix} ?? & ?? \\ 0 & 1 \\ -1 & -0.662055270527 \\ 0 & 0 \\ -\frac1{2!} & -0.561866397242 \\ 0 & 0 \\ -\frac1{3!} & -0.249581408503 \\ 0 & 0 \\ -\frac1{4!} & -0.0755503260124 \\ 0 & 0 \\ -\frac1{5!} & -0.0172091887343 \\ 0 & 0 \\ -\frac1{6!} & -0.00315760368955 \\ 0 & 0 \\ -\frac1{7!} & -0.000499047959470 \\ 0 & 0 \\ -\frac1{8!} & -0.0000687607442729 \\ ... & ... \end{bmatrix} $$ Using the coefficients of the first column for a power series in $x$ building the function $a_0(x)$ we get $$ a_0( \sqrt{\ln(n_1)})-a_0( \sqrt{\ln(n_2)}) = n_2 - n_1 $$ which indicates the sum of the $(f°^k(n_1))^0$ - and which is just counting the terms.

Using the coefficients $a_{k,1}$ of the second column for a power series in $x$ building the function $a_1(x)$ we get the finite sum of the function at consecutive arguments:

$$ a_1( \sqrt{\ln(n_1)})-a_1( \sqrt{\ln(n_2)}) = \sum_{k=1}^\infty (\sqrt{\ln(n_1)}^k - \sqrt{\ln(n_2)}^k )a_{k,1} = \sum_{k=n_1}^{n_2-1} \sqrt{\ln (k)} $$

which indicates the sum of the $f°^k(n_1)$ which is the desired finite sums $s_p$ for the required terms to a very good (and seemingly arbitrary) numerical approximation.

------ End of lengthy explanation

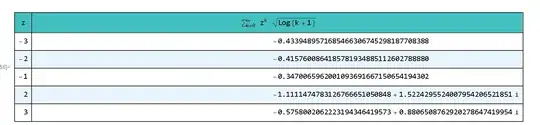

Q: However - I'm missing the first coefficient for the second power series $a_1(x)$ . That should just contain the representative value for the infinite sum of $\sqrt{\ln(k)} $ with $k=1 ... \infty$