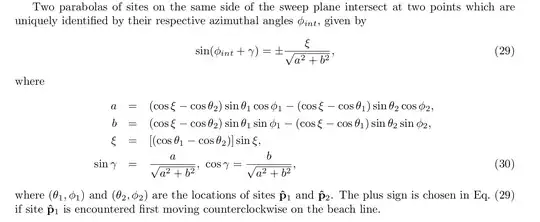

I'm trying to implement the algorithm in this paper which describes an implementation of Fortune's algorithm on a sphere, and I'm getting hung up on the math explaining how to calculate the intersection of two parabolas on a sphere. Here's the section I'm struggling with:

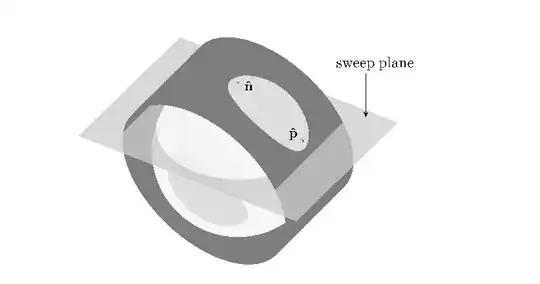

For context (and so you don't have to read the paper), $(\theta, \phi)$ are the coordinates of a point on the sphere, where $\theta$ is the inclination (angle between the point and the north pole) and $\phi$ is the azimuth (angle between the point and an arbitrary meridian line). Parabolas in this context are defined as the locus of points equidistant from a point $(\theta, \phi)$ and the sweep line $L$. $L$ isn't a line in the geodesic sense, rather it is the locus of all points with an inclination of $\xi$. You can see an illustration of this (from the paper) below:

My question is twofold:

Why is $\xi$ redefined, when it is already a known value, and how/why are they redefining it in terms of itself?

Ultimately I'm looking for the value of $\phi_{int}$, but the value is ferreted away within a $sine$ function, so it looks like to get it out I'd need to use $arcsine$, which has the potential of giving me a weird range. Can the equation be reworked to put $\phi_{int}$ on the left of an equals sign? I've tried reworking it but my knowledge of trigonometry has declined rapidly in the years since high school.

Thanks for any insight you can offer, I'm not used to reading white papers and this has proven to be a little beyond me.