Not necessarily best of quality but maybe could be made better with a bit of tuning.

kDistant[pts_List, n_] := Module[

{objfun, len = Length[pts], ords, a, c1},

ords = Array[a, n];

c1 = Flatten[{Map[.5 <= # <= len + .5 &, ords],

Element[ords, Integers]}];

objfun[oo : {_Integer ..}] := Module[

{ovals = Clip[oo, {1, len}], ptlist, diffs},

ptlist = pts[[ovals]];

diffs =

Flatten[Table[

ptlist[[j]] - ptlist[[k]], {j, n - 1}, {k, j + 1, n}], 1];

Total[Map[Sqrt[#.#] &, diffs]]

];

Round[NArgMax[{objfun[ords], c1}, ords,

Method -> {"DifferentialEvolution", "SearchPoints" -> 40},

MaxIterations -> 400]]

]

Example searching for 10 from 100000 points.

n = 10;

len = 10^5;

pts = RandomReal[{-10, 10}, {len, 3}];

Timing[furthest = kDistant[pts, n]]

(* Out[6]= {15.85276, {34502, 90523, 79761, 66318, 53570, 18000, 80585,

12958, 87680, 70241}} *)

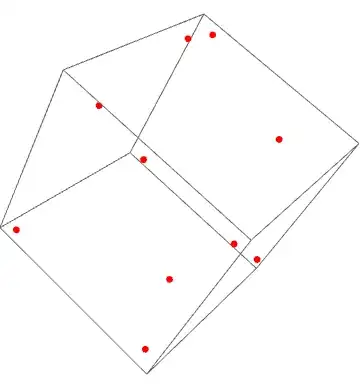

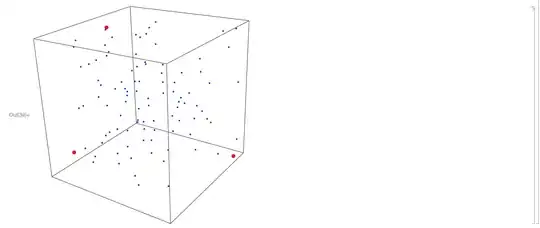

Seems to have done alright, points tend toward corners. Some pairs are close but that's probably an indication that the objective is not the best to use for k large.

Graphics3D[{Red, PointSize[Large], Point[pts[[furthest]]]}]

--- edit ---

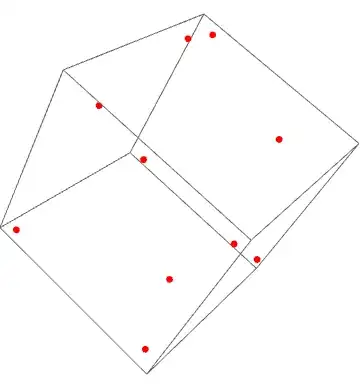

The variant below might be better for some purposes. Instead of maximizing a sum of all disatnce pairs it will maximize the sum of all minimum separations, which should have the effect of pushing all points away from one another. Some experimentation led me to increase iterations and also slightly up the number of points.

kDistantMins[pts_List, n_] := Module[

{objfun, len = Length[pts], ords, a, c1},

ords = Array[a, n];

c1 = Flatten[{Map[.5 <= # <= len + .5 &, ords],

Element[ords, Integers]}];

objfun[oo : {_Integer ..}] := Module[

{ovals = Clip[oo, {1, len}], ptlist, diffs, dnorms, minnorms},

ptlist = pts[[ovals]];

diffs =

Table[ptlist[[j]] - ptlist[[k]], {j, n - 1}, {k, j + 1, n}];

dnorms = Map[Sqrt[#.#] &, diffs, {2}];

minnorms = Map[Min, dnorms];

Total[minnorms]];

Round[NArgMax[{objfun[ords], c1}, ords,

Method -> {"DifferentialEvolution", "SearchPoints" -> 50},

MaxIterations -> 1000]]

];

Whether this turns out to be an improvement will depend on the end goal I guess.

--- end edit ---

With[{pts = {{0, 0}, {1, 1}, {1, 0}, {0, 1}}}, pts[[#]] & /@ (Position[#, Max[#]] & @ UpperTriangularize[DistanceMatrix[pts]])]? – J. M.'s missing motivation Jul 26 '16 at 18:25KFN[]makes the kernel crashed when fed theRandomReal[1,{10^6,10^3}]to it. – xyz Jul 31 '16 at 09:17