Between fast, but memory hungry, vectorized operations represented by Total[Range[10^8]^2] and slow, but memory efficient, top level iteration represented by Do solution, there's a middle ground. One can generate data in chunks of specified length and use vectorized operations on those chunks. This way you can get constant memory usage dictated by size of a chunk and still have reasonable speed thanks to vectorized operations.

Until Streaming` module, that introduces general framework for such operations, is finished and documented, we can implement simple "folding in chunks" by hand.

ClearAll[chunkFold]

chunkFold[f_, x_, {min_Integer?Positive, max_Integer?Positive, chunkSize_Integer?Positive, getChunk_}] /; min <= max :=

Module[{result = x, i},

Do[

result = f[result, getChunk[i , i + chunkSize - 1]],

{i, min, max - chunkSize, chunkSize}

];

f[result, getChunk[min + Quotient[max - min, chunkSize] chunkSize, max]]

]

chunkFold function folds given function f over subsequent "chunks" of data generated by getChunk function. getChunk[i, j] should give list of elements with indices from i to j. In total function uses indices from min to max in chunks of given chunkSize. Example of "symbolic" usage:

chunkFold[f, x, {3, 20, 5, getChunk}]

(* f[f[f[f[x, getChunk[3, 7]], getChunk[8, 12]], getChunk[13, 17]], getChunk[18, 20]] *)

In some problems, like one in OP, chunk size can be used not only to control memory usage, but also to limit size of intermediate results, that can decide whether it's enough to use machine numbers and packed arrays, or whether non-machine numbers must be used.

Let's define simple helper function, giving timing and memory usage together with result of evaluation of given expression:

timeMem = Function[,

Module[{result}, Prepend[AbsoluteTiming@MaxMemoryUsed[result = #], result]],

HoldFirst

];

Now let's use our chunkFold function for problem from OP:

chunkFold[#1 + Tr@Power[#2, 2] &, 0, {1, 10^8, 900, Range}] // timeMem

(* {333333338333333350000000, 0.969158, 18024} *)

result is calculated in one second using 18 kilobytes of memory. Which can be compared to simple Do, looping trough individual elements:

Module[{out = 0, i}, Do[out += i^2, {i, 10^8}]; out] // timeMem

(* {333333338333333350000000, 58.9343, 1112} *)

that uses one minute and one kilobyte.

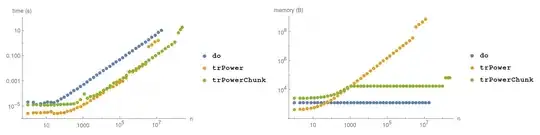

Let's check how speed and memory usage of different solutions depends on total number of elements. We'll test three functions: do is simple iteration over every element, trPower is fully vectorized, trPowerChunk is intermediate solution using vectorized functions on chunks of 900 elements.

do[n_] := Module[{out = 0, i}, Do[out += i^2, {i, n}]; out]

trPower[n_] := Tr[Range[1, n]^2]

trPowerChunk[n_] := chunkFold[#1 + Tr@Power[#2, 2] &, 0, {1, n, 900, Range}]

We see that do is slowest, but has constant, lowest, usage of memory.

trPower is fastest up to around 3*10^6, when as explained by Michael E2 numbers become to large to be stored as machine integers and Mathematica starts unpacking lists. Memory usage is proportional to number of elements, with larger proportionality constant after unpacking starts.

trPowerChunk is almost as fast as trPower before the latter starts unpacking, and fastest afterward. Memory usage of trPowerChunk grows up to n = 900, which was chosen as chunk size, and is constant afterward. From 101176707 sum of squares of consecutive 900 integers is larger than largest machine integer, so Mathematica starts unpacking arrays and we see jump in both time and memory usage. Memory usage is still constant, after unpacking, but this constant is higher.

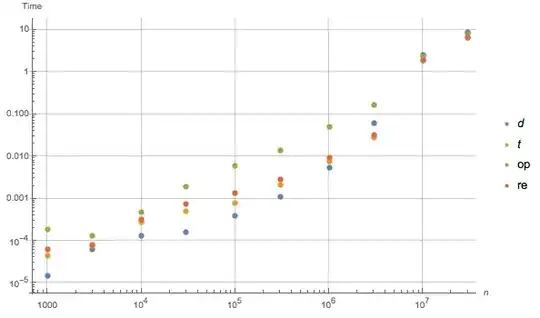

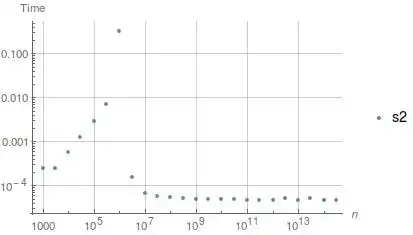

Dotisn't the fastest. – BlacKow Sep 30 '16 at 19:46s2timing is possibly slightly misleading. I get a longer time the first time I execute it (0.0486seconds) and a faster time (0.00022seconds) on subsequent calls. – mikado Sep 30 '16 at 19:47Trace[Sum[i,{i,1,100}]]it seems to actually produce all the numbers and sum them up. – Alan Oct 01 '16 at 02:02s2[a]and you will get1/6 a (1 + a) (1 + 2 a).. ButTracereally suggest that you are right... interesting – BlacKow Oct 01 '16 at 02:26