A common "trick" in neural network training is to train an autoencoder using a "degraded" sample as input and the original sample as target output. The layer type DropoutLayer[] is made for this purpose. But I would like to play with different types of noise, e.g. Gaussian noise, salt&pepper noise, to train a denoising autoencoder.

I can think of a few ways to implement this, but I'm not happy with any of them:

- I can add noise offline and pass the noisy and original samples to

NetTrainas input and target, respectively. But then exactly the same noise trained in every round, which probably leads to overfitting. - I can pass noisy samples to

NetTrainas above, but only train a few rounds, then restart with different noisy samples. (i.e. callNetTrainin a loop). But that seems to break a lot of the internal learning-rate heuristics inNetTrain(e.g. the momentum term is reset in every cycle) - I can link together a constant input, a several dropout layers and a

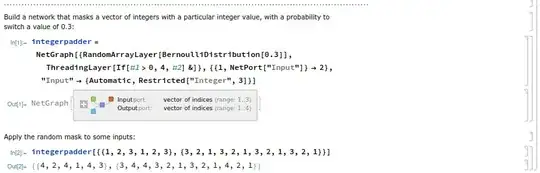

TotalLayerto get a poor man's Gaussian noise approximation, (eachDropoutLayerproduces a Bernoulli random variable per pixel, and the sum of a number of Bernoulli random variables is approximately Gaussian). This is my best idea so far, but I need a lot ofDropoutLayers to get close to a continuous distribution, and it's not very efficient to include an input that's always constant