I am using mma 11.0.1

This post is related to my previous faster way to merge data.

enlightened by Edmund's answer, I found a subtle performance issue of Merge

First, let's define

Clear[data];

data[n_] := Module[{tmp},

tmp = Join[RandomInteger[{1, 10}, {n, 2}], RandomReal[1., {n, 1}],

2];

Thread[tmp[[;; , 1 ;; 2]] -> tmp[[;; , -1]]]]

then

test = Thread[data[1000][[;; , 1 ;; 2]] -> data[1000][[;; , -1]]];

GroupBy[test, First -> Last, Total] === Merge[test, Total]

(*True*)

now some timing

timing = Transpose@

Table[test =

Thread[data[2^i][[;; , 1 ;; 2]] -> data[2^i][[;; , -1]]];

{AbsoluteTiming[GroupBy[test, First -> Last, Total];][[1]],

AbsoluteTiming[Merge[test, Total];][[1]]}, {i, 1, 19}];

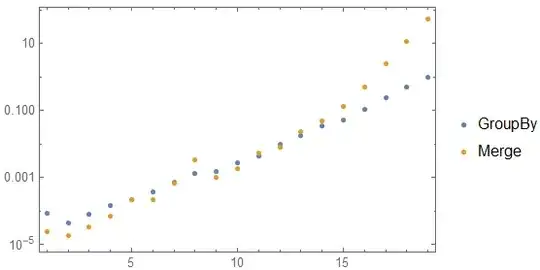

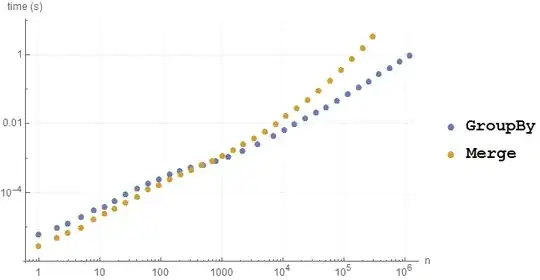

ListLogPlot[timing, PlotRange -> All, Frame -> True,

PlotLegends -> {"GroupBy", "Merge"}]

This gives

We can see that the performance of Merge is severely getting worse only when Length of list exceeds a limit. What happened?

Merge was designed for maximum performance on sets with many unique keys.... I'd rather think they screw things up in designingMerge: ) Because no matter the condition of keys,GroupByis performing well, what is more,SparseArrayis even faster. If they design it the way like that, and doesn't mention anything in the documentation. Oh my god, that is really an intentional trap, because for my example in the post, I don't thinkGroupByis more natural function to pick up with thanMerge: ) – matheorem Jun 13 '17 at 06:10