Mathematica Neural Networks based on MXNet.

And there is lots of model in MXNet(MXNet Model Zoo).

For example,I follow a tutorial An introduction to the MXNet API — part 4, It uses Inception v3(net using MXNet).

I can use this code to load this pretrained model

<< NeuralNetworks`;

net = ImportMXNetModel["F:\\model\\Inception-BN-symbol.json",

"F:\\model\\Inception-BN-0126.params"];

labels = Import["http://data.dmlc.ml/models/imagenet/synset.txt", "List"];

incepNet = NetChain[{ElementwiseLayer[#*255 &], net},

"Input" -> NetEncoder[{"Image", {224, 224}, ColorSpace -> "RGB"}],

"Output" -> NetDecoder[{"Class", labels}]]

TakeLargestBy[incepNet[Import["https://i.stack.imgur.com/893F0.jpg"],

"Probabilities"], Identity, 5]//Dataset

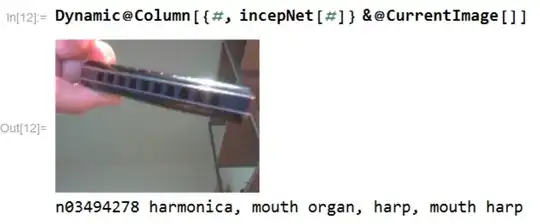

Dynamic@Column[{#, incepNet[#]} &@CurrentImage[]]

FileByteCount[Export["Inception v3.wlnet", incepNet]]/2^20.

(*44.0188 MB*)

I want to share this model to others, but it's too big (about 44 MB) because there are lots of parameters in this pretrained model.

If I only want to share this model structure to others,I think deNetInitialize is necessary.

You can see UninitializedEvaluationNet only need very little memory.

In order to share nets like UninitializedEvaluationNet, can we define a deNetInitialize function?