Here is an example with some small matrices, but it's the same for large ones. The key point is to use the NDSolve option SolveDelayed->True

Some system matrices:

matS = {{0, 0, 0, 0, 0, 0}, {0, 0, 0, 0, 0, 0}, {0, 0, 0, 0, 0,

0}, {0, 0, 0, 0, 0, 0}, {0, 0, 0, 0, 0, 0}, {0, 0, 0, 0, 0,

0}};

matD = {{0, 0, 0, 0, 1, 0}, {0, 1/1000, 0, -(1/1000), 0, 0}, {0, 0,

1,0, 0, 0}, {0, -(1/1000), 0, 1/1000, 0, -1}, {1, 0, 0, 0, 0,

0}, {0, 0, 0, -1, 0, 0}};

matM = {{1/1000000, -(1/1000000), 0, 0, 0, 0}, {-(1/1000000), 1/

1000000, 0, 0, 0, 0}, {0, 0, 0, 0, 0, 0}, {0, 0, 0, 0, 0,

0}, {0,

0, 0, 0, 0, 0}, {0, 0, 0, 0, 0, 0}};

matL = {0, 0, 0, 0, 5 (1/2 + 1/2 Erf[100000 (-(1/1000) + t)]), 0};

sysSize = Length[matS];

tInit = 0.;

tEnd = 0.01;

init = Table[0, {sysSize}];

dinit = LinearSolve[matD, -(matS.init - matL) /. t -> tInit];

if = u /.First /@ NDSolve[{matM.Derivative[2][u][t]+matD.Derivative[1][u][t] + matS.u[t] == matL, u[tInit] == init,Derivative[1][u][tInit]==dinit}, u, {t, tInit, tEnd},SolveDelayed->True]

The interpolation function then returns a vector for a time t:

if[0.001]

(*

{0.00001410474097541479`, 0.000014042475237194534`, 0.`, 0.`, -1.4042475237194535`*^-8, -1.4042475237194532`*^-8}

*)

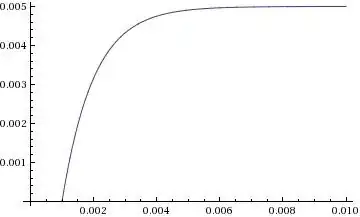

To plot it:

Plot[if[t][[2]], {t, tInit, tEnd}]

If your coefficient matrices are functions, then you can use matD[x] and make a function like matD[x_?VectorQ]:=... or some other appropriate pattern.

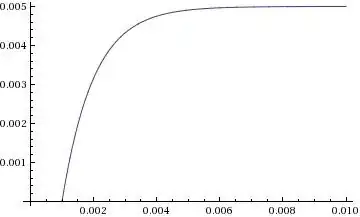

Here is an example for larger system matrices.