Let me give a different approach. One downside of WalkingRandomly's approach is, that he distributes the whole list over all subkernels. The he uses Part in each subcall to select the data to use. I will make this differently:

- I divide the

data into chunks and define each chunk as subdata on every subkernel you want to use in the current call

- then I can simply call

Map[f,subdata] on each wanted subkernel with ParallelEvaluate

The chunkenize works whether Length[data] is divisible by the number of used kernels or not.

chunkenize[data_, nkernels_] :=

Partition[data, UpTo[Ceiling[Length[data] / nkernels]]]

MyParallelMap[f_, data_, kernels_] :=

Module[{chunks = chunkenize[data, Length[kernels]]},

Block[{subdata},

MapIndexed[

ParallelEvaluate[subdata = #1, kernels[[First[#2]]]] &, chunks];

DistributeDefinitions[f];

ParallelEvaluate[Map[f, subdata], kernels]

]

]

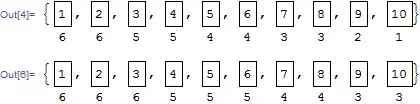

Trying it gives

data = Range[20];

f[x_] := {$KernelID, x^2}

kernels = LaunchKernels[];

MyParallelMap[f, data, kernels]

(*

{{{1,1},{1,4},{1,9},{1,16},{1,25}},{{2,36},{2,49},{2,64},{2,81},{2,100}},

{{3,121},{3,144},{3,169},{3,196},{3,225}},{{4,256},{4,289},{4,324},{4,361},{4,400}}}

*)

Or if you like

MyParallelMap[f,data,kernels[[{2,3}]]]

(*

{{{2,1},{2,4},{2,9},{2,16},{2,25},{2,36},{2,49},{2,64},{2,81},{2,100}},

{{3,121},{3,144},{3,169},{3,196},{3,225},{3,256},{3,289},{3,324},{3,361},{3,400}}}

*)

Update

Also I would really like to know why overriding Parallel`Protected`$kernels does not work.

When you trace the output of a simple ParallelMap call, you can investigate what happens. What I did is, I created a full trace output and checked then, on what positions the subkernels like KernelObject[1, "local"] appear.

In detail this meant to check the FullForm of a subkernel because then you see that it has the form

Parallel`Kernels`kernel[....]

then I launched some kernels and trace the output. Using Position you can find all positions which match a sub-kernel

kernels = LaunchKernels[];

trace = Trace[ParallelMap[$KernelID &, Range[100]]];

pos = Position[trace, Parallel`Kernels`kernel, Infinity];

If you now inspect a bit the positions where the sub-kernels arise, you first find what you found: Parallel`Protected`$kernels. But soon you see

Part[trace,Sequence@@Drop[pos[[10]], -4]]

(*

{Parallel`Protected`$sortedkernels,

{KernelObject[1,local],KernelObject[2,local],

KernelObject[3,local],KernelObject[4,local]}}

*)

This brings us to the following solution:

Block[{

$KernelCount = 2,

Parallel`Protected`$sortedkernels = Take[kernels, 2]

},

ParallelMap[$KernelID &, Range[100]]

]

(*

{1,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,1,1,1,1,1,1,1,1,1,1,

1,1,1,2,2,2,2,2,2,2,2,2,2,2,2,2,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,

2,2,2,1,1,1,1,1,1,1,1,1,1,1,1,2,2,2,2,2,2,2,2,2,2,2,2}

*)

Since I didn't find this that quick, I had time to do some more spelunking. You may have noted, that I set $KernelCount in the Block. This is, because the value of it is used for the partitionizer.

Block[]within a Module. In fact, I never use Block[]. May I ask why you use it here? Why not just doMyParallelMap[f_, data_, kernels_] := Module[{chunks = chunkenize[data, Length[kernels]], subdata}, MapIndexed[ParallelEvaluate[subdata = #1, kernels[[First[#2]]]] &, chunks]; DistributeDefinitions[f]; ParallelEvaluate[Map[f, subdata], kernels]]– WalkingRandomly Nov 27 '12 at 01:11BlockbecauseParallelEvaluatehas theHoldFirstattribute. I just like to make sure (even for the reader) thatsubdatais a new, local variable which should not interfere with any globally definedsubdata. The difference betweenBlockandModuleis the scoping type: First uses dynamic scoping the latter lexical scopying – halirutan Nov 27 '12 at 01:21MyParallelMap[f,data, kernel]several times, how could this code be optimized in casedatawere a fixed quantity, and the functionfwere constantly changing? – Ziofil Apr 18 '13 at 22:06