Trying to get a good mesh often requires significant work. For the finite element package we can have first and second order elements. Thus this suggests we need fine good quality meshes rather than rely on high order shape functions. If you have a point of interest that requires a fine mesh size how quickly can the mesh size change as you move away from this point? Are there tools for this? This question looks at the overal quality of the mesh. Here I am interested in the quality around a point of interest.

To be specific my current problems with stress occur on the boundaries. I note that if I put in a small mesh length at the boundary it will be nicely expanded into the interior.

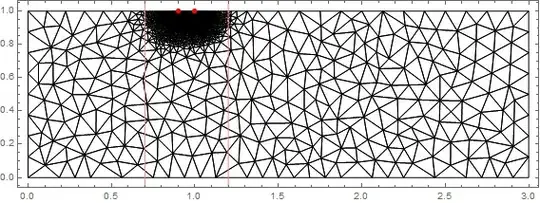

Here is an example in which I have two points on the boundary where I want a fine mesh.

Needs["NDSolve`FEM`"];

L = 3; (* Length *)

h = 1; (* Height *)

Y = 10^3; (* Modulus of elasticity *)

ν = 33/100; (* Poisson ratio *)

gLS = h/5; (* Basic grid length scale *)

sgLS = gLS/200; (* Small grid length scale *)

p1 = L/3; p2 = 1.337; (* Points of interest *)

nn = 4;

cc = {{0, 0},

Sequence @@ Table[{p2 + n sgLS, 0}, {n, -nn, nn}], {L, 0}, {L, h},

Sequence @@ Table[{p1 + n sgLS, h}, {n, -nn, nn}], {0, h}};

ccp = Partition[Range[Length@cc], 2, 1, 1];

bmesh = ToBoundaryMesh["Coordinates" -> cc,

"BoundaryElements" -> {LineElement[ccp]}];

mesh = ToElementMesh[bmesh, MaxCellMeasure -> {"Length" -> gLS}];

Show[mesh["Wireframe"],

Graphics[{Red, PointSize[0.01], Point[{{p1, h}, {p2, 0}}],

Thickness[0.01], Line[{{p2 - gLS/2, h/2}, {p2 + gLS/2, h/2}}]}]]

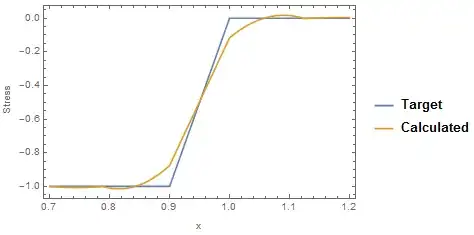

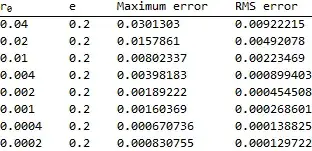

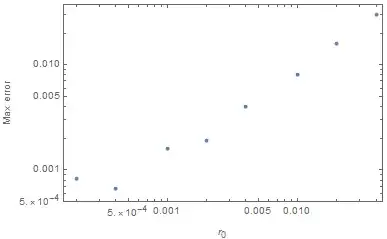

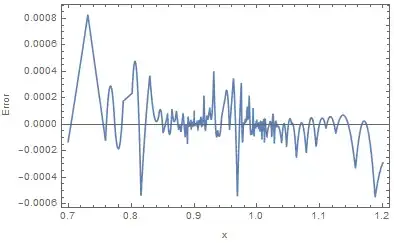

The red line has a length that is my requested basic mesh length scale and I estimate that the actual mesh length scale is about 75% of this which is good. Can the actual grid length scale in an area be extracted. I have also put in 5 closely spaced points on the boundary where I need good resolution. This has been done and the mesh size has been automatically expanded away from these points. A zoom shows this.

d = 0.02;

Show[mesh["Wireframe"],

Graphics[{Red, PointSize[0.02], Point[{{p1, h}, {p2, 0}}] }],

Frame -> True, PlotRange -> {{p2 - d, p2 + d}, {0, 2 d}}]

How do I determine if this rate of mesh expansion away from the point of interest is good enough for my application? What is the heuristic used in making this mesh? Can it be controlled? Of course I can go to a MeshRefinementFunction but how quickly should I expand that if I use it?

The answer to the appropriate rate of expansion probably depends on the problem and the fact that we have second order elements. So here are some modules to run a problem.

planeStress[

Y_, ν_] := {Inactive[

Div][({{0, -((Y ν)/(1 - ν^2))}, {-((Y (1 - ν))/(2 (1 \

- ν^2))), 0}}.Inactive[Grad][v[x, y], {x, y}]), {x, y}] +

Inactive[

Div][({{-(Y/(1 - ν^2)),

0}, {0, -((Y (1 - ν))/(2 (1 - ν^2)))}}.Inactive[Grad][

u[x, y], {x, y}]), {x, y}],

Inactive[Div][({{0, -((Y (1 - ν))/(2 (1 - ν^2)))}, {-((Y \

ν)/(1 - ν^2)), 0}}.Inactive[Grad][u[x, y], {x, y}]), {x, y}] +

Inactive[

Div][({{-((Y (1 - ν))/(2 (1 - ν^2))),

0}, {0, -(Y/(1 - ν^2))}}.Inactive[Grad][

v[x, y], {x, y}]), {x, y}]}

ClearAll[stress2D]

stress2D[{uif_InterpolatingFunction,

vif_InterpolatingFunction}, {Y_, ν_}] :=

Block[{fac, dd, df, mesh, coords, dv, ux, uy, vx, vy, ex, ey, gxy,

sxx, syy, sxy}, dd = Outer[(D[#1[x, y], #2]) &, {uif, vif}, {x, y}];

fac = Y/(1 - ν^2)*{{1, ν, 0}, {ν, 1, 0}, {0,

0, (1 - ν)/2}};

df = Table[Function[{x, y}, Evaluate[dd[[i, j]]]], {i, 2}, {j, 2}];

(*the coordinates from the ElementMesh*)

mesh = uif["Coordinates"][[1]];

coords = mesh["Coordinates"];

dv = Table[df[[i, j]] @@@ coords, {i, 2}, {j, 2}];

ux = dv[[1, 1]];

uy = dv[[1, 2]];

vx = dv[[2, 1]];

vy = dv[[2, 2]];

ex = ux;

ey = vy;

gxy = (uy + vx);

sxx = fac[[1, 1]]*ex + fac[[1, 2]]*ey;

syy = fac[[2, 1]]*ex + fac[[2, 2]]*ey;

sxy = fac[[3, 3]]*gxy;

{ElementMeshInterpolation[{mesh}, sxx],

ElementMeshInterpolation[{mesh}, syy],

ElementMeshInterpolation[{mesh}, sxy]

}]

The example problem (a MWE) is

{uif, vif} =

NDSolveValue[{planeStress[Y, ν] == {0,

NeumannValue[-1, 0 <= x <= p1 && y == h]},

DirichletCondition[v[x, y] == 0, 0 <= x <= p2 && y == 0],

DirichletCondition[u[x, y] == 0, x == 0 && y == 0]}, {u,

v}, {x, y} ∈ mesh];

{σxx, σyy, σxy} =

stress2D[{uif, vif}, {Y, ν}];

Plot[{σxx[x, 0], σyy[x, 0], 500 vif[x, 0]}, {x, 0, L},

PlotRange -> All, Frame -> True, Axes -> False,

PlotLegends ->

LineLegend[{"\!\(\*SubscriptBox[\(σ\), \(xx\)]\)",

"\!\(\*SubscriptBox[\(σ\), \(yy\)]\)", "500 v"}]]

As you can see the results look nice and have a singularity where I have put my point (which is correct). However, is my mesh adequate? How can I tell? Is there a rule of thumb?

Thanks for any advice.

"MeshOrder"->1toToElementMeshyou will get a first order mesh and not a second order mesh. So you can have either or. – user21 Apr 24 '18 at 13:26ElementMesh) with extensive explanation. Maybe some sections could be useful for your case? – Pinti Apr 25 '18 at 09:49