I am looking to weight the words (TF-IDF) of a random text by his occurence and showing that on a matrix. I saw there is a project on it but would like to know if it possible to change the visualization ? https://demonstrations.wolfram.com/TermWeightingWithTFIDF/

3 Answers

"I am looking to weight the words (TF-IDF) of a random text by his occurrence and showing that on a matrix."

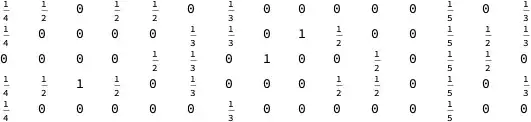

Weighting words by frequency of occurrence is the same as normalizing the columns of the matrix. To do this, let data be you term/document matrix, and sum the columns by using Total. The final line divides each column by the appropriate sum.

data = RandomInteger[{0, 1}, {5, 15}];

norm = Total[data] /. {0 -> 1};

tab = Transpose[Table[Transpose[data][[i]]/norm[[i]], {i, Length[norm]}]];

TableForm[tab]

You'll have to decide what you want it to look like.

- 68,936

- 4

- 101

- 191

-

I come up with this code by now :

TFIDF = FeatureExtraction[ Join[First /@ Keys@ruleData[[All]], Last /@ Keys@ruleData[[All]]], "TFIDF"]

but the output is telling me that there is nonatomic expression my variable rule above is an association between label and sentences ( 1--> sentence 1, 2--> sentence2, 3--> sentence3...)

As I wanted to apply the tf-idf for each sentence and for the total of sentences

– Tom Peterson Apr 08 '19 at 13:32

I come up with this code by now :

TFIDF = FeatureExtraction[ Join[First /@ Keys@ruleData[[All]], Last /@ Keys@ruleData[[All]]], "TFIDF"]

but the output is telling me that there is nonatomic expression

my variable rule above is an association between label and sentences ( 1--> sentence 1, 2--> sentence2, 3--> sentence3...) As I wanted to apply the tf-idf for each sentence and for the total of sentences

- 13

- 3

From the comments of the question:

So far I only clean the data I have by removing stopwords and punctuation... The next step I would have is to : 1. separate my dataset (one big text) into subsets representing : {sentence 1}, {sentence 2...}.... maybe by attributing for each sentence an ID ? 2. taking a unique list of all the words in the text 3. Taking each sentence and count for each word if the word appear in the sentence 4. Put the result under a table like above

This process is more or less followed in the blog post: "The Great conversation in USA presidential speeches".

The crucial step is making the document-word contingency matrix. Generally speaking, for this you can use the function

CrossTabulatefrom the package CrossTabulate.m. For more details see this blog post: "Contingency tables creation examples".The TF-IDF and related measures can be computed with the package DocumentTermMatrixConstruction.m.

(These packages are used in the blog post "The Great conversation in USA presidential speeches".)

- 37,787

- 3

- 100

- 178

- separate my dataset (one big text) into subsets representing : {sentence 1}, {sentence 2...}.... maybe by attributing for each sentence an ID ?

- taking a unique list of all the words in the text

- Taking each sentence and count for each word if the word appear in the sentence

- Put the result under a table like above

– Tom Peterson Apr 07 '19 at 13:26