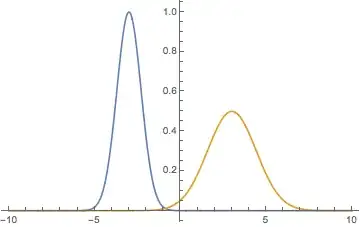

Assume we have 2 peaked positive functions

f[x_] := Exp[-(x + 3)^2]

g[x_] := 1/2 Exp[-(x - 3)^2/4]

that look like

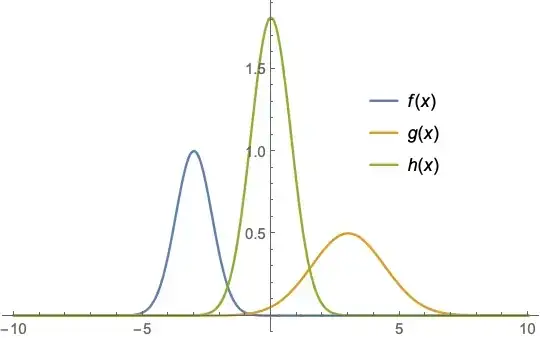

Would it be possible to numerically find a morphed function such as

h[x_] := 2/3 Exp[-4 (x)^2/9]

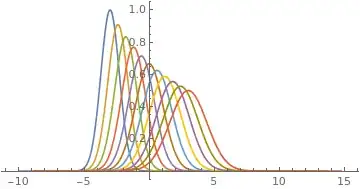

(depicted below as orange)? Notice, linear interpolation (blue) fails miserably as the graph below shows.

Please, assume some blackbox functions. It is clear that the problem is very simple when an analytical expression is given.

Because of the fuzzy formulation, the solution cannot be unique.

a Exp[-b (x-c)^2])? – Chris K Nov 04 '19 at 10:31f[x]+g[x]byDiracDelta-distribution:f[x]+g[x] ~a DiracDelta[x-b]. a is the area of f[x]+b[x], b is the centre of area. To visualize the result you might approximate the dirac-function by a limit(see comment @ChrisK – Ulrich Neumann Nov 04 '19 at 12:46