There is a ton of good data buried in the Wolfram data stores; however, trying to access that in bulk tends to be excruciatingly painful since the access constructs are designed for accessing a single nugget at a time.

My current issue is that I'm pulling down the NY Times COVID-19 data from

https://raw.githubusercontent.com/nytimes/covid-19-data/master/us-counties.csv

and I'd like to integrate it with demographic data.

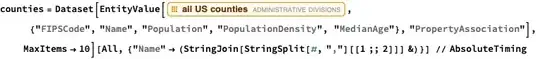

This winds up being pig-dog slow with each set of characteristics requiring 5 seconds for each of the counties. To illustrate, let's pull some data for Texas

focusState = "Texas";

adminDataFields = {"Population", "PopulationDensity",

"MedianHouseholdIncome", "AverageCommute", "PersonsPerHousehold",

"MedianAge"};

These are the Texas counties which have had a COVID-19 report according to the NY Times. The list can grow over time (I'm actually interested at the national level) so I have to refresh the list as more counties get added.

focusStateCounties = {"Harris", "Dallas", "Tarrant", "Bexar",

"Travis", "Fort Bend", "Denton", "Collin", "Galveston", "El Paso",

"Lubbock", "Montgomery", "Brazoria", "Webb", "Hidalgo", "Cameron",

"Brazos", "Williamson", "Jefferson", "Bell", "Smith", "Hays",

"Nueces", "McLennan", "Victoria", "Potter", "Randall", "Wichita",

"Matagorda", "Guadalupe", "Ellis", "Hardin", "Gregg", "Bowie",

"Ector", "Taylor", "Orange", "Midland", "Johnson", "Washington",

"Comal", "Tom Green", "Nacogdoches", "Shelby", "Chambers",

"Kaufman", "Wharton", "Walker", "Bastrop", "Fayette", "Coryell",

"Moore", "Liberty", "Hunt", "Grayson", "Angelina", "Rusk",

"Rockwall", "Donley", "Calhoun", "Val Verde", "Hood", "Hockley",

"Harrison", "Gray", "Waller", "Navarro", "Erath", "Castro",

"Andrews", "Wilson", "Medina", "Kendall", "Hale", "Van Zandt",

"Polk", "Lamar", "DeWitt", "Brown", "Starr", "San Patricio",

"San Augustine", "Panola", "Milam", "Maverick", "Limestone",

"Deaf Smith", "Austin", "Uvalde", "Upshur", "Terry", "Parker",

"Hill", "Henderson", "Colorado", "Cherokee", "Willacy", "Jasper",

"Grimes", "Dawson", "Cass", "Caldwell", "Burnet", "Burleson",

"Atascosa", "Wood", "Tyler", "Titus", "Palo Pinto", "Lavaca",

"Jackson", "Hopkins", "Fannin", "Blanco", "Young", "Wise",

"Trinity", "San Jacinto", "Oldham", "Lynn", "Llano", "Live Oak",

"Goliad", "Eastland", "Comanche", "Clay", "Camp", "Swisher",

"Robertson", "Morris", "Martin", "Leon", "Lee", "Lampasas",

"Kleberg", "Kerr", "Karnes", "Jim Wells", "Hutchinson", "Gonzales",

"Gillespie", "Crane", "Aransas", "Anderson", "Zapata", "Scurry",

"Sabine", "Pecos", "Newton", "Montague", "Mitchell", "McCulloch",

"Mason", "Madison", "Lamb", "Knox", "Jones", "Jack", "Hemphill",

"Hansford", "Hamilton", "Gaines", "Frio", "Franklin", "Floyd",

"Falls", "Dickens", "Delta", "Dallam", "Crosby", "Callahan", "Bee",

"Bandera", "Yoakum", "Hall", "Cooke"};

As an aside, Wolfram is twitchy on it's lookups so, if you want to use the NY Times names, some conditioning is required along the lines ...

mmaLocations = Map[

StringDelete[

Capitalize[ StringDelete[#, "city" | "borough" | "-" | "'" | "."],

"AllWords"], Whitespace] &,

countyAndStateDoublets,

{2}

]

With that introduction, executing the line below takes over five seconds for me from my office here in Michigan. Multiply 5 seconds by the number of counties in the US and you wind up at a large number.

AbsoluteTiming[

QuantityMagnitude[

AdministrativeDivisionData[{StringDelete[#1, Whitespace | "." | "-"],

StringDelete[focusState, Whitespace | "."], "UnitedStates"},

adminDataFields]

] &@"Brazos"

]

Obviously, once I let this grind for the excruciatingly long time required, I archive it to disk and only refresh my demographics every few days.

What I'm hoping is that I'm missing something obvious here since I run into this sort of situation fairly regularly where the Wolfram data access seems to be designed for interactive rather than programmatic (Wolfram Language) access.

Any suggestions?

Thanks,

Mark.

JoinAcross, so the last part should be much much simpler - but that's the code I have lying around. – Victor K. Apr 11 '20 at 00:34