I have a few thousand image urls that I want to download asynchronously, how can I do that while monitoring progress? I'm really asking for 5 things:

- Show dynamic progress in bar

- Downloads must be asynchronous

- Avoid any filename collisions

- Save with correct file extensions (even if not present in url)

- Show list of failed download tasks and why

Here's an example to get started:

Monitor[

URLDownload[

WebImageSearch["dog", "ImageHyperlinks", MaxItems -> 10],

"~/Downloads/"

]

]

Updated response to comment

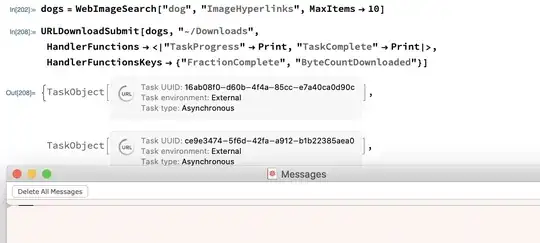

I don't believe URLDownloadSubmit takes a directory, this is the behavior I see (no progress indication):

dogs = WebImageSearch["dog", "ImageHyperlinks", MaxItems -> 10]

URLDownloadSubmit[dogs, "~/Downloads",

HandlerFunctions -> <|"TaskProgress" -> Print,

"TaskComplete" -> Print|>,

HandlerFunctionsKeys -> {"FractionComplete", "ByteCountDownloaded"}]

And the filenames are wrong:

Related but not duplicate:

URLDownloadSubmit[dogs, "~/Desktop/test", HandlerFunctions -> <|"TaskProgress" -> Print, "TaskComplete" -> Print|>, HandlerFunctionsKeys -> {"FractionComplete", "ByteCountDownloaded"}]to print the FractionComplete and ByteCountDownloaded. It's probably a documentation bug that there's no good example of how to do it, but it is mentioned in the usage of URLDownload and URLDownloadSubmit. You can useProgressIndicator[Dynamic[frac]]andfrac = #FractionDownloadedinstead ofPrint. – Carl Lange Aug 12 '20 at 21:26