The optimization of the production of a sparse symmetric matrix with a dense vector has existed for a long time. Someone once compared the matrix operations of Mathematica with MATLAB, and then came to the conclusion: the slowness is due to the copying of the data. Now I want to solve this problem through Mathematica's LibraryLink, such as calling C++ Armadillo or C++ Eigen, without copying data. In the past, Szabolcs's LTemplate successfully called Armadillo, and Henrik Schumacher successfully called Eigen. Now I have three methods to calculate the production of a sparse symmetric matrix with a dense vector. I can use Mathematica alone, calling Armadillo through librarylink and calling Eigen through librarylink. My code as follow:

- Construct a sparse symmetric matrix H

Clear["`*"]

n=300;

nn=n*n;

v=1.;

{minbegin,minend}={Range[n+1,(n-3)*n+1,2*n],Range[2*n,(n-2)*n,2*n]};

H0=SparseArray[Automatic,{nn,nn},0,{1,{Flatten[Range[0,nn-n,1]~Append~ConstantArray[nn-n,n]],n+{#}&/@Range[1,nn-n,1]},ConstantArray[v,nn-n]}]+SparseArray[(#->v)&/@({Table[{i,i+n-1},{i,Range[minbegin[[#]]+1,minend[[#]],1]}]&/@Range[Length[minbegin]]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+n*(n-1)},{i,1,n,1}]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+n*(n-1)+1},{i,1,n-1,1}]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+2*n-1},{i,minbegin}]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+n*(n-2)+1},{i,{n}}]}//Flatten//Partition[#,2]&),{nn,nn}];

H=H0+Transpose[H0];

- Construct a dense vector

f1=(Cos[2*Pi*#]&/@RandomReal[{0,1},nn]);

- only use Mathematica

in: AbsoluteTiming[H.H.H.H.H.H.H.H.H.H.f1 // Total]

out: {1.6734,7.63163*10^6}

- Write a function to call Armadillo to implement the production, where I use shared memory. (You need to install LTemplate and Armadillo)

Needs["LTemplate`"]

code="#include <LTemplate.h>

#define ARMA_COUT_STREAM mma::mout

#define ARMA_CERR_STREAM mma::mout

#include <armadillo>

template<typename T>

arma::Mat<T> toArmaTransposed(mma::MatrixRef<T> m) {

return arma::Mat<T>(m.data(), m.cols(), m.rows(), false /* do not copy /, false / until resized */);

}

template<typename T>

mma::TensorRef<T> fromArmaTransposed(const arma::Mat<T> &m) {

return mma::makeMatrix<T>(m.n_cols, m.n_rows, m.memptr());

}

template<typename T>

arma::Col<T> toArmaVec(mma::TensorRef<T> v) {

return arma::Col<T>(v.data(), v.size(), false /* do not copy /, false / until resized */);

}

template<typename T>

mma::TensorRef<T> fromArmaVec(const arma::Col<T> &v) {

return mma::makeVector<T>(v.size(), v.memptr());

}

template<typename T>

arma::SpMat<T> toArmaSparseTransposed(mma::SparseMatrixRef<T> sm) {

return arma::SpMat<T>(

arma::conv_to<arma::uvec>::from(toArmaVec(sm.columnIndices())) - 1, // convert to 0-based indices; Mathematica uses 1-based ones.

arma::conv_to<arma::uvec>::from(toArmaVec(sm.rowPointers())),

toArmaVec(sm.explicitValues()),

sm.cols(), sm.rows()

);

}

template<typename T>

mma::SparseMatrixRef<T> fromArmaSparse(const arma::SpMat<T> &am) {

auto pos = mma::makeMatrix<mint>(am.n_nonzero, 2); // positions array

auto vals = mma::makeVector<double>(am.n_nonzero); // values array

mint i = 0;

for (typename arma::SpMat<T>::const_iterator it = am.begin();

it != am.end();

++it, ++i)

{

vals[i] = *it;

pos(i,0) = it.row() + 1; // convert 0-based index to 1-based

pos(i,1) = it.col() + 1;

}

auto mm = mma::makeSparseMatrix(pos, vals, am.n_rows, am.n_cols);

pos.free();

vals.free();

return mm;

}

class Arma {

public:

mma::RealTensorRef ArmadilloDot(mma::SparseMatrixRef<double> mmaH, mma::RealTensorRef mmaf2) {

arma::sp_mat H = toArmaSparseTransposed(mmaH);

arma::vec f2 = toArmaVec(mmaf2);

arma::vec F3;

F3 = H*H*H*H*H*H*H*H*H*H*f2;

return fromArmaVec<double>(F3);

}

};";

Export["Arma.h",code,"String"]

template=

LClass["Arma",

{

LFun["ArmadilloDot",{{LType[SparseArray,Real,2],"Shared"},{Real,1,"Shared"}},{Real,1}]

}

];

CompileTemplate[template,

"IncludeDirectories"->{"C:\Users\sidy\AppData\Roaming\Mathematica\Applications\LTemplate\armadillo-10.5.1\include"},

"LibraryDirectories"->{"C:\Users\sidy\AppData\Roaming\Mathematica\Applications\LTemplate\armadillo-10.5.1\examples\lib_win64"},

"Libraries"->{"libopenblas"},"CompileOptions"->{"-std=c++11","-O2","-fopenmp"}]

LoadTemplate[template]

arma=Make[Arma]

in: AbsoluteTiming[arma@"ArmadilloDot"[H, f1] // Total]

out: {1.11007,7.63163*10^6}

- Write a function to call Eigen to implement the production, where I use shared memory. (You need to install Eigen)

srcpath="~";

outpath="~";

Needs["CCompilerDriver`"];

Module[{opts,path,file,lib},If[!FileExistsQ[srcpath],CreateDirectory[srcpath]];

If[!FileExistsQ[outpath],CreateDirectory[outpath]];

file=Export[FileNameJoin[{srcpath,"cClipGeneralizedEigenvalues.cpp"}],"

#include <iostream>

#include <vector>

#include"WolframLibrary.h"

#include "WolframSparseLibrary.h"

#include<Eigen/Eigenvalues>

#include <Eigen/Sparse>

using namespace std;

using namespace Eigen;

EXTERN_C DLLEXPORT int cClipGeneralizedEigenvalues(WolframLibraryData libData, mint Argc, MArgument *Args, MArgument Res)

{

MTensor MArow = MArgument_getMTensor(Args[0]);

MTensor MAcol = MArgument_getMTensor(Args[1]);

MTensor MAval = MArgument_getMTensor(Args[2]);

MTensor MBvec = MArgument_getMTensor(Args[3]);

MTensor MCout;

libData->MTensor_new(MType_Real, 1, libData->MTensor_getDimensions(MBvec), &MCout);

mint Mlength = MArgument_getInteger(Args[4]);

mint n = MArgument_getInteger(Args[5]);

Eigen::Map<Eigen::VectorXd >Arow(libData->MTensor_getRealData(MArow),Mlength);

Eigen::Map<Eigen::VectorXd >Acol(libData->MTensor_getRealData(MAcol),Mlength);

Eigen::Map<Eigen::VectorXd >Aval(libData->MTensor_getRealData(MAval),Mlength);

Eigen::Map<Eigen::VectorXd >B(libData->MTensor_getRealData(MBvec),n);

Eigen::Map<Eigen::VectorXd >C(libData->MTensor_getRealData(MCout),n);

Eigen::SparseMatrix<double> A(n, n);

vector < Triplet < double > > triplets ;

for ( int i = 0 ; i < Mlength ; ++ i )

{

triplets . emplace_back ( Arow(i) , Acol(i) , Aval(i)) ;

}

A . setFromTriplets ( triplets . begin ( ) , triplets . end ( ) ) ;

C = AAAAAAAAAAB;

MArgument_setMTensor(Res, MCout);

return 0;

}","Text"];

lib=CreateLibrary[{file},"cClipGeneralizedEigenvalues","TargetDirectory"->outpath,"IncludeDirectories"->{"C:\Users\sidy\AppData\Roaming\Mathematica\Applications\eigen-3.4-rc1"},"CompileOptions"->{"-std=c++11","-O2","-fopenmp"}];

With[{libfile=lib},cClipGeneralizedEigenvalues::usage="";

cClipGeneralizedEigenvalues:=cClipGeneralizedEigenvalues=LibraryFunctionLoad[libfile,"cClipGeneralizedEigenvalues",{{Real,1,"Shared"},{Real,1,"Shared"},{Real,1,"Shared"},{Real,1,"Shared"},Integer,Integer},{Real,1,Automatic}];]]

in: HCSR=ArrayRules[H][[1;;-2]]/.Rule->List//Flatten//Partition[#,3]&;

{row,col,val}={HCSR[[;;,1]]-1,HCSR[[;;,2]]-1,Developer`ToPackedArray[HCSR[[;;,3]]]};

AbsoluteTiming[cClipGeneralizedEigenvalues[row,col,val,f1,Length[val],nn]//Total]

out: {2.0971,7.63163*10^6}

- Compare

tab=Table[

nn=n*n;

v=1.;

{minbegin,minend}={Range[n+1,(n-3)*n+1,2*n],Range[2*n,(n-2)*n,2*n]};

H0=SparseArray[Automatic,{nn,nn},0,{1,{Flatten[Range[0,nn-n,1]~Append~ConstantArray[nn-n,n]],n+{#}&/@Range[1,nn-n,1]},ConstantArray[v,nn-n]}]+SparseArray[(#->v)&/@({Table[{i,i+n-1},{i,Range[minbegin[[#]]+1,minend[[#]],1]}]&/@Range[Length[minbegin]]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+n*(n-1)},{i,1,n,1}]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+n*(n-1)+1},{i,1,n-1,1}]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+2*n-1},{i,minbegin}]}//Flatten//Partition[#,2]&),{nn,nn}]+SparseArray[(#->v)&/@({Table[{i,i+n*(n-2)+1},{i,{n}}]}//Flatten//Partition[#,2]&),{nn,nn}];

H=H0+Transpose[H0];

f1=(Cos[2*Pi*#]&/@RandomReal[{0,1},nn]);

{AbsoluteTiming[arma@"ArmadilloDot"[H,f1]//Total][[1]],

AbsoluteTiming[H.H.H.H.H.H.H.H.H.H.f1//Total][[1]],

HCSR=ArrayRules[H][[1;;-2]]/.Rule->List//Flatten//Partition[#,3]&;

{row,col,val}={HCSR[[;;,1]]-1,HCSR[[;;,2]]-1,Developer`ToPackedArray[HCSR[[;;,3]]]};

AbsoluteTiming[cClipGeneralizedEigenvalues[row,col,val,f1,Length[val],nn]//Total][[1]]},{n,100,1000,100}]

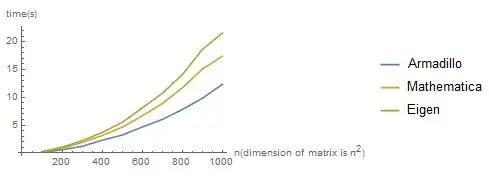

ListLinePlot[{Thread[{Range[100,1000,100],tab[[;;,1]]}],Thread[{Range[100,1000,100],tab[[;;,2]]}],Thread[{Range[100,1000,100],tab[[;;,3]]}]},PlotLegends->{"Armadillo","Mathematica","Eigen"},AxesLabel->{"n(dimension of matrix is n^2)","time(s)"}]

I found that Armadillo took the shortest time, followed by Mathematica and finally by Eigen. Is there any way to speed up my code?

Dot[matrix, Dot[matrix, vectror]]to ensure matrix vector priduct, try low level functions, e.g. https://reference.wolfram.com/language/LowLevelLinearAlgebra/ref/SYMV.html, or call blas directly, another option is to use cuda variant of blas – I.M. Jul 22 '21 at 17:33