I am trying to get an analytical expression for derivaives of an algorithm explained here. I usually use automatic diff. a lot, but since this part of function will not change, I thought to implement analytical solution if possible. Below is my attempt:

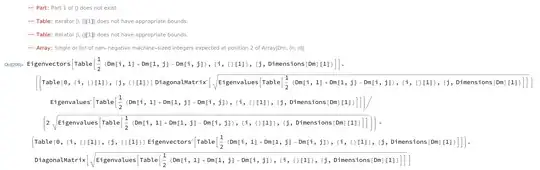

Mf[Dm_] := Table[(Dm[[i, 1]] + Dm[[1, j]] - Dm[[i, j]])/2, {i,

Dimensions[Dm][[1]]}, {j, Dimensions[Dm][[1]]}]

func[Mm_] := Eigenvectors[Mm] . DiagonalMatrix[Sqrt[Eigenvalues[Mm]]]

D[func[Mf[Dm]], Array[Dm, {n, n}]]

- How to do it right? I would ideally like an analytical expression giving derivatives w.r.t Matrix elements of

Dm. - Is it possible to use mathematica for analytical expressions of ML neural nets? I had an idea to train NN using pytorch, then using final trained model to get analytical derivatives from mathematica and using those for more efficient production environment (basically switching off autograd as we have a definite Linear algebraic form of expression).

I am using Mathematica first time for some actual problem so sorry if it is a naive question.

{j, Dimensions[Dm][[2]]}instead of the first part? – IntroductionToProbability Nov 16 '22 at 16:30