So I questioned why there are differences between Wolfram Cloud and Windows 11 Evaluate $\int_{0}^{\pi/2} \cos^a (x)\sin(ax) dx$ using Mathematica

E^(-((4 I \[Pi])/5)) Beta[-1, -(6/5), 11/5] // N

I FullSimplified as that gets better results (even though it should not, this is school math, there difference just happens somehow when -0.8090169943749475/0.587785252292473 changes to -0.8090169943749473/0.5877852522924732 with no reason whatsoever, but that is not what this question is about):

E^(-((4 I \[Pi])/5)) Beta[-1, -(6/5), 11/5] //FullSimplify//N

So, the result on windows is -6.6974 - 8.88178*10^-16 I (actually true value is -6.697403197294095 - 8.881784197001252-16 I) vs result on linux cloud that is -6.6974 - 1.33227*10^-15 I.

Why do you think that happens?

Well, I did trace as here: https://mathematica.stackexchange.com/a/271955/82985

and got the following result after 20 minutes of debug. Apparently it gets to

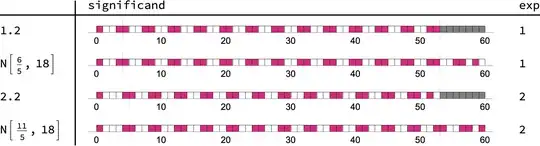

1/(-1.)^1.2 and not only lowers accuracy (why?) and thus loses one 1 in the complex part (on windows and linux), but it also makes a mistake in the real part (only on Linux).

That, that is why. You are aware this exists, right? https://core-math.gitlabpages.inria.fr/

Libm comparisions also exist: https://members.loria.fr/PZimmermann/papers/accuracy.pdf

Trace[-(-1)^(1/5) Beta[-1, -(6/5), 11/5] // N, TraceInternal -> True,

TraceDepth -> 10]

will give this on my Windows 11: https://pastebin.com/h9espi8G (wolfram cloud: https://pastebin.com/DGHQzaQX).

P.S. So there are 3 bugs here: on Linux rounding for floating precision (that cannot be controlled by N as in for 1/(-1.)^1.2) is horrible, even on Windows the error is just off-by-one in last digit, maybe because of AMD/Microsoft libm. Still an error. 2nd bug is that the general solver has somehow variable precision, not equal to this floating default and yet the precision in complex part is lower on one digit (or it just gets an error and sees 0 not 1). 3rd bug is that on windows it can sometimes just copy values through trace wrong with no reason (maybe it is some precision autotracking, dunno).

P.S.2 Opened a thread in community: https://community.wolfram.com/groups/-/m/t/2851685

"13.2.0 for Mac OS X ARM (64-bit) (November 18, 2022)"versus"13.2.0 for Linux x86 (64-bit) (December 12, 2022)"I get a 1 ulp difference in numericizing one factorN[ Beta[-1, -(6/5), 11/5]]and no difference in numericizing the-(-1)^(1/5)factor from the full-simplified expression. This accounts for the results on Macos and Linux. (2) Different rounding errors from different expressions should be expected (it's a chapter in most num. anal. books), so if there is a point to comparing simplified and unsimplified expressions, it needs to be made clearer. – Michael E2 Mar 15 '23 at 21:58FullSimplify, if the question is really about numericizing-(-1)^(1/5) Beta[-1, -(6/5), 11/5]. The result ofFullSimplifyis the same on all systems, isn't it?) – Michael E2 Mar 16 '23 at 02:37myBeta[x_, a_, b_] = MeijerGReduce[Beta[x, a, b], x] // Activate;– Michael E2 Mar 16 '23 at 02:40Beta[]in the body of the question or just the expression1/(-1.)^1.2in the title? – Michael E2 Mar 16 '23 at 13:51