The Problem

I have a decently large amount of data that I'm performing a bunch of computation on. My typical M.O. in Mathematica is to use Table to generate a nested array to store my results, and then refer back to the specific columns for additional computation/plotting. Currently, generating the array takes ~40 min. on a pretty beefy personal machine, using ParallelTable for the computation. While prototyping additional analysis I will frequently encounter crashes or hangs which require me to restart the kernel, and perform that 40 min of computation again (which is pretty frustrating).

Ideally, I would be able to export the output of that computation as some kind of file that I could then import into Mathematica instead of having to perform the computation repeatedly. I usually use .csv but this data doesn't work well for that as it has nested array elements which can be vary in size (depending on the initial raw data).

The Question

Is there a file format or storage solution that would allow me to keep my computed data as a nested array so that it can be easily imported again? For example, matlab has the save command which basically takes a snapshot of the runtime which makes recovering from a crash easy.

Alternatively, is there a better or more idiomatic solution for storing the results of my computation that I should be using?

EDIT:

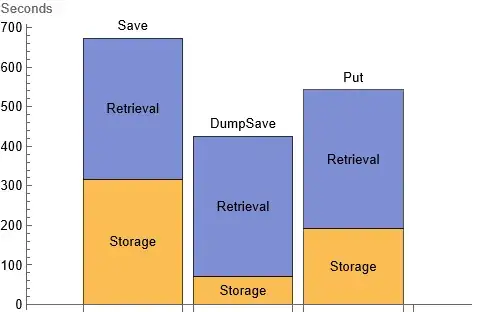

As pointed out in the comments, there are at least 3 functions for saving Mathematica objects, Save, DumpSave, and Put.

Timing the three options on my system indicates that DumpSave is the best option for me:

Additionally, DumpSave results in a much smaller file size compared to Save (~700Mb vs ~1800Mb).

It appears that the main bottleneck is retrieving the data, but it's an order of magnitude faster than repeating the computation every time the kernel crashes.

DumpSave, orSave. This question might help. – N.J.Evans Jul 13 '23 at 16:38Save. – BesselFunct Jul 13 '23 at 16:55PutandGetas shown in the answer to How to export and import these data, a duplicate? – creidhne Jul 13 '23 at 17:01Saveis extremely slow for this application (317s), and results in a 1.8 Gb file, 356s Import time.DumpSavedoes a much better job, at 71s, with a 638 Mb file, 5s Import time. – BesselFunct Jul 13 '23 at 17:03Puttakes 193s, andGettakes 350s to import the object created byPut. So, for my application,DumpSaveappears to be a clear winner – BesselFunct Jul 13 '23 at 17:17GetorImportthe output ofDumpSave, which is very strange. It doesn't indicate that there's been an error,GetandImportjust return empty arrays. – BesselFunct Jul 13 '23 at 17:35Getto work with theDumpSavearchive, and it takes ~ 354s with the successful import. So,DumpSaveis still the winner, but the limit is still retrieving the data. – BesselFunct Jul 13 '23 at 17:45SetStreamPosition. You might have to write the binary file yourself. – N.J.Evans Jul 14 '23 at 14:20