Introduction

There are a few ways to make such a table: (1) a symbolic way that seems conceptually clear and whose slowness is not prohibitive for a table of at most a few thousand entries; (2) using the table created by NDSolve in solving the equation by integrating its derivative either (a) directly or (b) correcting the errors in it; and (3) making a regular-interval table in two ways (my feeling is this should be unnecessary and it's a hold-over from human-use, printed tables). See also my answer to https://mathematica.stackexchange.com/a/27984, where I did some of these things.

Basically, we want a table of the form

Table[{d, x}, {d,...}]

where d = f[x] is given and x = InverseFunction[f][d] is sought. One of the simplest approaches is to reverse the entries in a table of the form

Table[{x, d}, {x,...}]

Finally, an equation f[x[d]] == d may differentiated to get f'[x[d]] x'[d] == 1 and solved with NDSolve, given that we know or can find an initial value. These relationships between the variables x and d may be applied to any equation f[x, d] == 0, except InverseFunction[f][g[d]] would apply only to equations in which the variables can be separated, f[x] == g[d], and InverseFunction[f] is computable (c.f. the inverse function theorem).

A table has to have a range. Since none was indicated, I'll pick 0 <= d <= maxd, where maxd = 100. The OP's function has odd symmetry, so it is unnecessary to create a table for negative values of d.

Some definitions

I'll keep the definitions in terms of a symbolic a. One can use Block[{a}, code] to keep it symbolic through part of a computation. It is occasionally helpful.

Needs["GeneralUtilities`"];

timing = AccurateTiming; (* Use AbsoluteTiming in V9 or search this site for timeAvg *)

a = 0.022;

lhs := (1/2) x Sqrt[1 + 4 a^2 x^2] + ArcSinh[2 a x]/(4 a)

dfn = Block[{a}, Function @@ {{x}, lhs}] (* d as a function of x *)

(* Function[{x}, 1/2 x Sqrt[1 + 4 a^2 x^2] + ArcSinh[2 a x]/(4 a)] *)

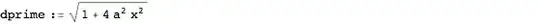

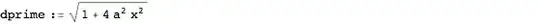

Block[{a}, dprime := Evaluate@Simplify[dfn'[x], x >= 0]];

Definition[dprime]

maxd = 100;

maxx = x /. FindRoot[lhs == maxd, {x, 0}]

(* 58.5729 *)

Initial condition: d equals zero at x equals zero.

dfn[0]

(* 0. *)

Symbolic solution

DSolve can be used to construct the InverseFuction that defines x as a function of d. (DSolve works better with a symbolic a than with an approximate 0.022.)

xfnD = Block[{a}, DSolveValue[{D[dfn[x[d]], d] == 1, x[0] == 0}, x, d]]

(* Function[{d},

InverseFunction[ArcSinh[2 a #1]/(4 a) + 1/2 #1 Sqrt[1 + 4 a^2 #1^2] &][d]] *)

NDSolve solution

One small trick: We need to change x to a function x[d] in the derivative dprime. We're using the inverse function theorem, $x'(d) = 1/d'(x)$, if I may abuse notation, to construct x as an interpolating function of d. NDSolve has many options to control how the integration is done, including the step size and the precision of the result..

xfnN = NDSolveValue[{x'[d] == 1/(dprime /. x -> x[d]), x[0] == 0},

x, {d, 0, maxd}, MaxStepSize -> 0.1];

I want to compare the timings of the methods, so I'll save it:

ndsolvetime = timing[

NDSolveValue[{x'[d] == 1/(dprime /. x -> x[d]), x[0] == 0}, x, {d, 0, maxd},

MaxStepSize -> 0.1];

]

(* 0.00448259 *)

Making tables

We can make tables of values of {d, x} either in the form

tab = Table[{d, xfn[d]}, {d,...}]

or

tab = Table[{lhs, x}, {x,...}]

If we have a table tab, we can create an (order-3) interpolating function with

xTest = Interpolation[tab]

or, if the tables are to be used for linear interpolation,

xTest = Interpolation[tab, InterpolationOrder -> 1]

One might be concerned with the accuracy of the table. We can estimate the maximum relative error of the approximation with the following function. Sometimes it gives a warning about convergence failure, it's hardly important for this purpose. A new seed is used each time, so one can reevaluate to examine the stability of the estimate. The relative error tends to grow near x == 0 although the absolute error diminishes. Consequently, we'll maximize the error over 1 < x < maxx by default. (Note that using cubic interpolation generally improves the error quite significantly, but I'll test the linear interpolation in what follows.)

maxrelerr[xfn_, xmin_: 1] :=

NMaximize[{Abs[(xfn[dfn[x]] - x)/x], xmin < x < maxx}, x,

Method -> {"RandomSearch", "SearchPoints" -> 300, "RandomSeed" -> RandomInteger[1000000]}]

Easiest: regular intervals of x

This method has the advantage of being exact (within machine precision, that is) on the points in the table.

nentries = 1000;

tab = Table[{lhs, x}, {x, 0., maxx, maxx/(nentries - 1)}]; // timing

(* 0.00625478 *)

xTest = Interpolation[tab, InterpolationOrder -> 1];

maxrelerr[xTest]

(* {8.30295*10^-7, {x -> 1.02577}} *)

Using the inverse function: regular intervals of d

This is also easy, although it is a bit slow.

tab = Table[{d, xfnD[d]}, {d, 0, maxd, 1/10}]; // timing

(* 0.710307 *)

xTest = Interpolation[tab, InterpolationOrder -> 1];

maxrelerr[xTest]

(* {2.41031*10^-6, {x -> 1.04883}} *)

Interestingly the first method seems more accurate. One should keep in mind that it will depend on the function being interpolated. This one is nice and gets straighter as d increases. The previous method happens to take larger steps, for this particular function, as d increases. It won't always happen that way.

This method is similar to Mr.Wizard's, albeit this one is a little slower.

Table[Quiet@FindRoot[lhs == d, {x, 0}], {d, 0, maxd, 1/10}]; // timing

(* 0.479001 *)

Extracting the NDSolve table

The table is stored inside the InterpolatingFunction (see What's inside InterpolatingFunction[{{1., 4.}}, <>]? for more). The list of values of d is given by

First @ xfnN["Coordinates"]

and the list of values of x is given by

xfnN["ValuesOnGrid"]

We can form the table via transposition of the lists.

tab = Transpose[{First@xfnN["Coordinates"], xfnN["ValuesOnGrid"]}]; // timing

tab // Length

(* 0.000373258

1008 *)

The total timing is roughly

ndsolvetime + 0.000373258

(* 0.00485584 *)

xTest = Interpolation[tab, InterpolationOrder -> 1];

maxrelerr[xTest]

(* {2.40168*10^-6, {x -> 1.04722}} *)

There is not really an advantage to using NDSolve in this case for two reasons. First, the simple form of the equation f[x] == d allows the first method to work. Second, the lhs is monotonic and the second derivative monotonically tends to zero. This method is more useful for more complicated equations.

Aside from interpolation, one source of error in this method is that NDSolve calculates the table entries only approximately (with a precision of around one half of machine precision). These usually can be improved to machine precision by a single step of Newton's method, which I'll share:

newx = x - (lhs - d)/dprime /.

{d -> First@xfnN["Coordinates"], x -> xfnN["ValuesOnGrid"]};

newtab = Transpose[{First@xfnN["Coordinates"], newx}];

xTest1 = Interpolation[tab]; (* cubic interpolation *)

xTest2 = Interpolation[newtab];

{maxrelerr[xTest1] // Quiet,

maxrelerr[xTest2] // Quiet}

{maxrelerr[xTest1, 20] // Quiet, (* error for larger d *)

maxrelerr[xTest2, 20] // Quiet}

(* {{9.07249*10^-9, {x -> 1.00002}}, {1.12738*10^-10, {x -> 1.04673}}}

{{3.41066*10^-10, {x -> 20.0006}}, {8.87196*10^-13, {x -> 26.5654}}} *)

Again since the graph is straightening out as d gets larger, the relative error is improved greatly for larger values of d because the error is primarily due to the error in the table.

Using NDSolve to construct regular intervals in d

tab = Table[{d, xfn[d]}, {d, 0, 100, 1/10}]; // timing

ndsolvetime + %

(* 0.00265543

0.00713802 *)

xTest = Interpolation[tab, InterpolationOrder -> 1];

maxrelerr[xTest]

(* {2.38447*10^-6, {x -> 1.04884}} *)

The relative error is not quite as good as the first method but comparable to the others.

Exporting

To export to Excel is easy:

Export[FileNameJoin[{$TemporaryDirectory, "foo.xls"}], tab]

(* "/var/folders/9d/68khy4s15sjf9qfpnhqz9tnc0000gn/T/foo.xls" *)

SystemOpen[%]

Other formats may be found in the Listing of All Formats.

Summary

All the methods are reasonably fast for a one-time table generation. The accuracy of each may be controlled by controlling the step size. I think it is important to examine the accuracy of the generated table and adjust the steps to get the desired accuracy. For the OP's particular example, the first method wins hands-down. NDSolve is not shown to advantage in this problem, but it is normally what I use for getting a numerical approximation to a function.