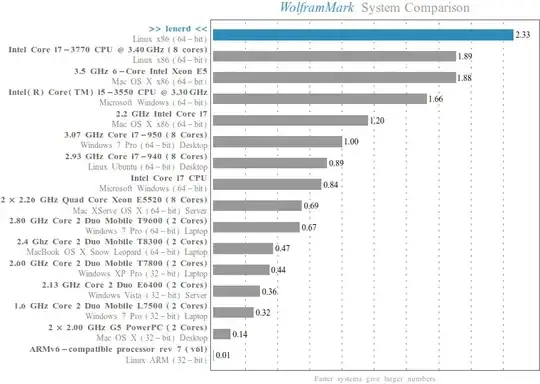

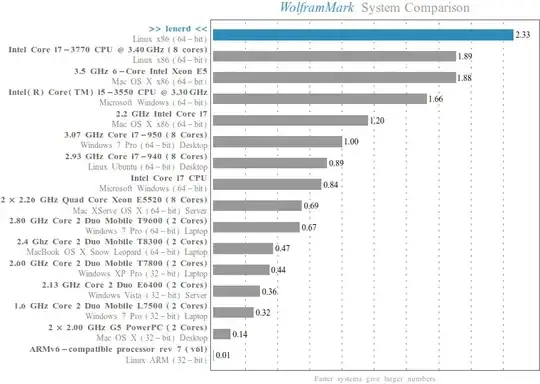

Let me give you a start by sharing some insights. This all is purely subjective. When I buy a new computer, then I take some time to study the state of the art regarding hardware. You will never know everything because there are just too much topics, too much details and just too much to read, but with some research you can get a pretty good idea. Last time when I did this for my own pc was about 2 years ago. Here is my benchmark from today in Mathematica 10.0.2 (with overclocked settings in BIOS):

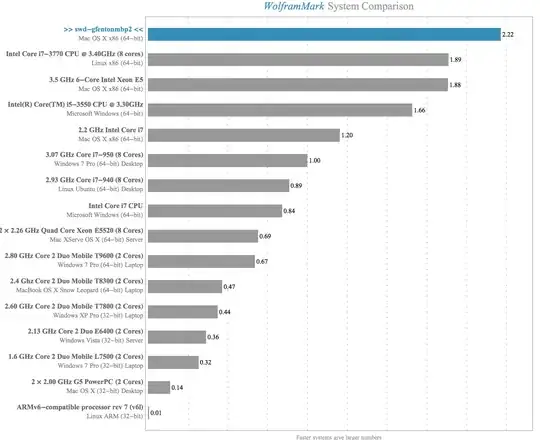

Let me tell you upfront: You buy this kind of benchmark with money. There is no way of cleverly choosing cheap hardware and getting a super computer. The problem is that the dependency between money and computational power is not linear but highly exponential. It always follows this kind of graph:

So when you buy the second best processor which is already very very good, then buying the best one will probably cost you much much more, although the speed increase is not really noticeable. So when you have a fixed budget, you should keep this in mind because you probably can save some money at one place and spend it in another one, where the impact on the overall power of your system is higher.

When you do much with graphics, then you should definitely care about the graphics card. The rendering of 3d graphics is done with OpenGL and runs completely in the graphics card. Although the creation of the graphics doesn't! For me, the choice here was always simple because I started with NVidia when they introduced CUDA and used it ever since. OK, one time I was forced because the Apple guys switched to ATI once.

Graphics cards are expensive; they usually cost much more than your mainboard. The German NVidia page gives you a pretty good overview what the GeForce series cost. If you want to compare them, then look out for number of CUDA cores and how much RAM they have. Note that NVidia cards are usually built by others. So you will find the same card (say Geforce GTX 980) from different vendors like MSI, ASUS, Gainward, ZOTAC, etc. They can differ in some details. I usually use ASUS because I often use ASUS mainboards. But I guess this just like the belief in god and has not really an impact.

The second big pack comes as triple: CPU, RAM, Mainboard. This is because the mainboard needs to have the correct socket for the CPU, and CPU and mainboard need to support the RAM size and type you want. I usually start by deciding what CPU I want. Intel CPU's are my favorite for two reasons: When I program something in C/C++, then I use the Intel Compiler, which works best with their own CPU's. Additionally, Mathematica uses the Intel libraries for numerical algorithms too. Choosing the CPU is probably the hardest part, because there are zillion of different types and revisions which differ not only in the frequency and number of cores and threads, but additionally in things like L1/L2/L3 cache sizes, when they were build, etc... What you probably want is a quad-core (which has 8 hyperthreads). If your budget is high enough, you could look out for the i7 series. In my two year old pc I have a i7-2700K 3.5GHz x 8 which still rocks.

For the mainboards, I usually go for a gamer product because they often support features like overclocking. The important parts of a mainboard are the chipsets that do the work. I know that my Maximus IV Extreme-Z mainboard has an Intel chipset but whether this really is necessary, I have no idea.

If you choose RAM, then I would suggest at least 8GB. I'm doing data processing very often and I have to work with 3d data. With such stuff, you can fill a large amount of RAM very quickly. That is why I chose 32GB back then. Two points: (i) Usually, you don't need that much, but on the other hand (ii) even 32GB are sometimes not enough.

Regarding hard-drive: When your budget allows it, then buy 2 hard-drives. One SSD where you install the operating system and one big normal hard-drive for your data. You will be surprised what a difference this makes because one of the (often underestimated) bottlenecks is the transfer of data from the hard-drive to the RAM during startup.

As a final note: If you don't want to build your computer from pieces but rather like to buy a complete system, then just go for a good gamer pc. They usually have exactly the properties of a very good computer, because, well, they need to be power-horses to run today's games.

ParallelCombineetc across multi-cores. – alancalvitti Dec 20 '14 at 00:24