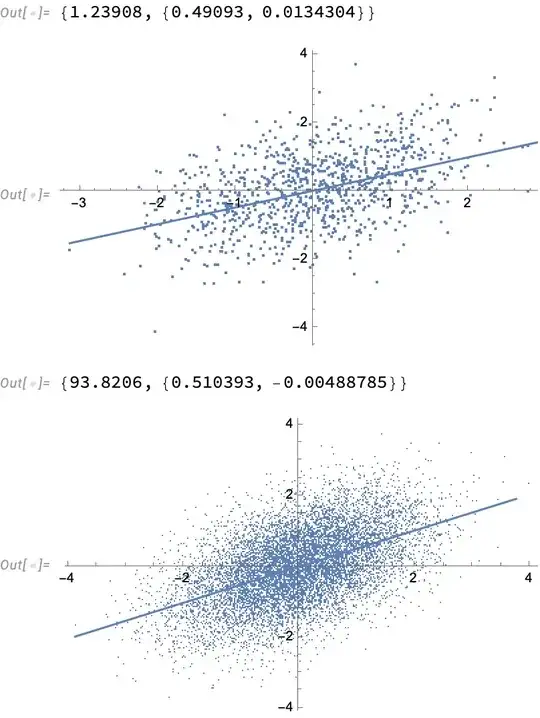

The Theil-Sen estimator finds the slope and intercept of a line passing through a set of points by calculating the median slope and median intercept of the set of lines passing through all possible distinct point pairs. It is spectacular at fitting a line through data containing outliers.

Here is a reasonably efficient way of calculating this for medium-sized sets of points:

slope[data:{{_,_}..}]:=Median[

Join@@Table[

(data[[;;-(n+1),2]] - data[[n+1;;,2]]) / (data[[;;-(n+1),1]] - data[[n+1;;,1]]),

{n,Length[data]-1}

]

]

intercept[data:{{_,_}..}]:=Median[

Join@@Table[

(data[[;;-(n+1),1]] data[[n+1;;,2]] - data[[n+1;;,1]] data[[;;-(n+1),2]]) /

(data[[;;-(n+1),1]] - data[[n+1;;,1]]),

{n,Length[data]-1}

]

]

However, on a machine with 16 GB of RAM, this runs out of memory when processing somewhere between 20,000 points and 50,000 points. How can the code be made more memory-efficient to operate on bigger datasets?

Here is a way of generating an example value for data:

sampleData[nPoints_Integer?Positive]:=(

SeedRandom[0];

datax = 10 N[Normalize[Range[nPoints],Max]];

Transpose[{

datax,

1 + 0.001 datax + RandomReal[NormalDistribution[0,0.01],nPoints] +

datax RandomChoice[{0.01,1-0.01}->{0.02,0},nPoints]

}]

)

n log(n)or so fornpoints? I think I know of an approach that uses maybeO(n)storage but the time complexity would ben^2 log(n)and that's quite steep for the size range in question. And fishing through the details for an actual implementation would be not so easy. – Daniel Lichtblau Feb 04 '15 at 16:55