This question,which is still unanswered, might be relevant because it involves NIntegrate over lists and it also has Exp.

In the question linked I encountered slow evaluation of NIntegrate. I thought it might be because Mathematica somehow does the calculations slowly over lists and it is not the fault of NIntegrate the slow performance, however I am not sure yet. Anyway, I tried to calculate the integral by the simplest method that is boxing the area under integral.

Consider this:

<<Developer`

axis = ToPackedArray@Table[1.*i, {i, 0, 10000, 0.01}];

Total[0.01*Exp[-axis]] // AbsoluteTiming

{10.108011, 1.0050083333194446}

It is really slow compared to Matlab. Then I tried:

Exp[-axis]; // AbsoluteTiming

{8.998900, Null}

and

Sin[-axis]; // AbsoluteTiming

{0.009001, Null}

It seems exponential on a list is really slow.

Compiling helps a lot:

exp = Compile[{{axis, _Real, 1}},

Apply[Plus, 0.01*Exp[-axis]],

Parallelization -> True, CompilationTarget -> "C"

]

exp[axis] // AbsoluteTiming

{0.062006, 1.0050083333194315}

But still Matlab is ten time faster.

Matlabs code:

axis = 0:0.01:10000;

tic

sum(exp(-axis).*0.01)

toc

ans =

1.0050

Elapsed time is 0.006555 seconds.

Why is Mathematica slow in this case and how can I make it faster?

I think this happens because Mathematica keeps really small numbers:

Exp[-1000000.]

3.296831478*10^-434295

How can I prevent this? Is it related to the issue at all?

Edit

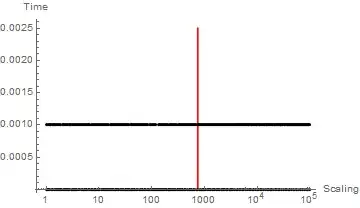

It seems for Mathematica length isn't important but size does matter!

axis2 = Table[Abs@Sin[1.*i], {i, 0, 10000, 0.01}];

Total[0.01*Exp[-axis2]] // AbsoluteTiming

{0.015000, 5558.330252144095}

Although the length of the list is the same as before its values are between zero and one and it's faster.

0forexp(-1000000.). – Karsten7 Mar 17 '15 at 18:25Expin Mathematica is less efficient? – MOON Mar 17 '15 at 21:53