By default, NIntegrate works with MachinePrecision and its PrecisionGoal is set to Automatic which is effectively a value near 6:

In[1]:= Options[NIntegrate, {WorkingPrecision, PrecisionGoal}]

Out[1]= {WorkingPrecision -> MachinePrecision, PrecisionGoal -> Automatic}

I need sufficiently higher accuracy when computing the integrals similar to this one:

dpdA[i_] := NIntegrate[

Cos[φ] Cos[i*φ] Exp[Sum[-Cos[j*φ], {j, 11}]], {φ, 0, Pi},

Method -> {Automatic, "SymbolicProcessing" -> None}]

The integral cannot be taken symbolically, so "SymbolicProcessing" is off.

Actually I need to compute such integrals thousands of times during an optimization procedure in order to find best coefficients a[j] under the summation:

dpdA[i_] :=

NIntegrate[Cos[φ] Cos[i φ] Exp[Sum[(-a[j]) Cos[j φ], {j, 11}]], {φ, 0, Pi},

Method -> {Automatic, "SymbolicProcessing" -> None}]

Is there a way to precondition this integral in order to make integration with high WorkingPrecision faster? Perhaps using Experimental`NumericalFunction?

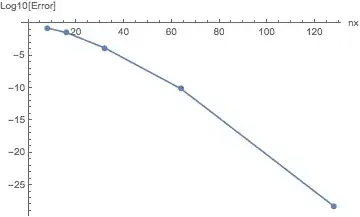

The problem is that when I increase PrecisionGoal to 15 and consequently WorkingPrecision to a value higher than MachinePrecision I get very low performance.

NIntegrate, performance is usually dictated by the appropriateness or otherwise of the chosen method. Try, for example,dpdA[i_] := NIntegrate[Cos[φ] Cos[i*φ] Exp[Sum[-Cos[j*φ], {j, 11}]], {φ, 0, Pi}, WorkingPrecision -> $MachinePrecision, MinRecursion -> 3, MaxRecursion -> 5, Method -> {"GlobalAdaptive", Method -> {"GaussKronrodRule", "Points" -> 20}, "SymbolicProcessing" -> False}]. This works well for small arguments, but for larger arguments the integrand is highly oscillatory so another method could be better. – Oleksandr R. Jul 28 '12 at 00:24"LevinRule"as theMethod(though it seems to work nicely on your integral when I tried it, and removing"SymbolicProcessing" -> Noneto that effect). I would suggest trying"ClenshawCurtisOscillatoryRule"as theMethod. See if it helps. – J. M.'s missing motivation Jul 28 '12 at 00:40u==Cos[\[CurlyPhi]],\[CurlyPhi]==ArcCos[u],du/Sqrt[1-u^2]==d\[CurlyPhi]. With that transformation you get rid of all the costly trigonometric function calls soNIntegratemight give you shorter integration times. – Thies Heidecke Aug 10 '12 at 18:53AbsoluteTiminggives0.8593750second forWorkingPrecision->60, PrecisionGoal->30and0.5625000forWorkingPrecision->30, PrecisionGoal->15on my machine. I need to compute such integrals thousands of times with variable coefficientsa[j]under the summation:NIntegrate[Cos[φ]*Cos[i*φ]*Exp[Sum[-a[j]*Cos[j*φ],{j,11}]],{φ,0,Pi}]. – Alexey Popkov Feb 11 '13 at 22:33