This question is motivated by teaching : I would like to see a completely elementary proof showing for example that for all natural integers $k$ we have eventually $2^n>n^k$.

All proofs I know rely somehow on properties of the logarithm. (I have nothing against logarithms but some students loathe them.)

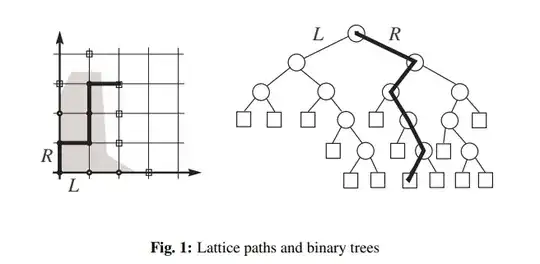

Is there a brilliant "proof from the book" for this inequality (for example given by an explicit easy injection of a set containing $n^k$ elements into, say, the set of subsets of $\lbrace 1,\ldots,n\rbrace$ for $n$ sufficiently large)?

A fairly easy but somewhat computational proof (which leaves me therefore unhappy):

Given $k$ choose $n_0>2^{k+1}$. For $n>n_0$ we have \begin{align*} &\frac{(n+1)^k}{2^{n+1}}\\ &=\frac{1}{2}\left(\frac{n^k}{2^n}+\frac{\sum_{j=0}^{k-1}{k\choose j}n^j}{2^n}\right)\\ &\leq \frac{n^k}{2^n}\left(\frac{1}{2}+\frac{2^k}{2n_0}\right)\\ \leq \frac{3}{4}\frac{n^k}{2^n} \end{align*} showing that the ratio $\frac{n^k}{2^n}$ decays exponentially fast for $n>n_0$.

A perhaps more elementary but slightly sloppy proof is the observation that digits of $n\longmapsto 2^{2^n}$ (roughly) double when increasing $n$ by $1$ whilst digits of $n\longmapsto (2^n)^k$ form (roughly) an arithmetic progression. (And this "proof" uses therefore properties of the logarithm in disguise.)

Addendum: Fedor Petrov's proof can be slightly rewritten as $$2^{n+k}=\sum_j{n+k\choose j}>{n+k\choose k+1}>n^k\frac{n}{(k+1)!}$$ showing $$2^n>n^k\frac{n}{2^k(k+1)!}>n^k$$ for $n>2^k(k+1)!$.

Second addendum: Here is sort of an "injective" proof: $n^{k+1}$ is the number of sequences $(a_1,a_2,\ldots,a_{k+1})\in \lbrace 1,2,\ldots,n\rbrace^{k+1}$. Writing down the corresponding binary expressions, adding leading zeroes in order to make them of equal length $l\leq n$ (with $l$ such that $n<2^l$) and concatenating them we get a binary representation of an integer $<2^{l(k+1)}\leq 2^{n(k+1)}$ which encodes $(a_1,\ldots,a_{k+1})$ uniquely. This shows $$2^{(k+1)n}>n^{k+1}.$$ Replacing $(k+1)n$ by $N$ we get $$2^N>N^k\frac{N}{(k+1)^{k+1}}>N^k$$ for $N$ in $(k+1)\mathbb N$ such that $N>(k+1)^{k+1}$.

For the general case we have $$2^{N-a}>N^k\frac{N}{2^a(k+1)^{k+1}}>(N-a)^k\frac{N-a}{2^a(k+1)^{k+1}}>(N-a)^k$$ (where $a$ is in $\lbrace 0,1,\ldots,k\rbrace$) if $N-a>2^k(k+1)^{k+1}$.

Variation for the last proof: One can replace binary representations by characteristic functions: $a_i$ is encoded by the $a_i$-th basis vector of $\mathbb R^n$. Concatenating coefficients of these $k+1$ basis vectors we get a subset (consisting of $k+1$ elements) of $\lbrace 1,\ldots,(k+1)n\rbrace$.