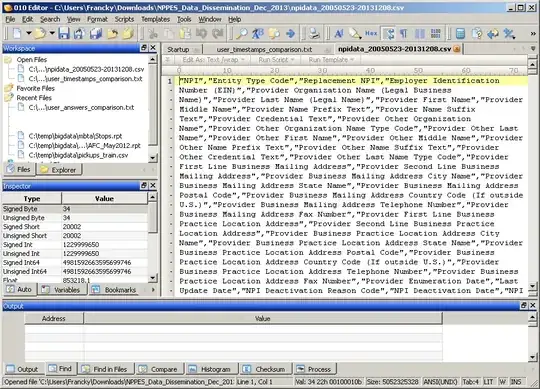

I recently had to parse the 6GB NPPES file and here is how I did it:

$ wget http://download.cms.gov/nppes/NPPES_Data_Dissemination_July_2017.zip

$ unzip NPPES_Data_Dissemination_July_2017.zip

$ split -l 1000000 npidata_20050523-20170709.csv

$ add headers...

$ python parse.py

$ load *.tab files to the database

The code for the parse.py script used to extract some columns:

import os

import pandas as pd

import csv

files = [

'xaa.csv',

'xab.csv',

'xac.csv',

'xad.csv',

'xae.csv',

'xaf.csv']

usecols = [

"NPI",

"Provider Organization Name (Legal Business Name)",

"Provider Business Mailing Address City Name",

"Provider Business Mailing Address State Name",

"Provider Business Mailing Address Postal Code",

"Provider Business Mailing Address Country Code (If outside U.S.)"]

for f in files:

print("Parsing file: {}".format(f))

df = pd.read_csv(f,

engine='c',

dtype='object',

skipinitialspace=True,

quoting=csv.QUOTE_ALL,

usecols=usecols,

nrows=None)

df.rename(columns={

'NPI': 'npi',

'Provider Organization Name (Legal Business Name)': 'business',

'Provider Business Mailing Address City Name': 'adr_city',

'Provider Business Mailing Address State Name': 'adr_state',

'Provider Business Mailing Address Postal Code': 'adr_zip',

'Provider Business Mailing Address Country Code (If outside U.S.)': 'adr_country', # noqa

}, inplace=True)

out = os.path.join('out', "{}.tab".format(f))

df.to_csv(out, sep='\t', index=False)

xsv select "Healthcare Provider Taxonomy Code_1" inputfile.csvwill extract a column of data, much faster than the excellent csvkit. (I don't have enough reputation yet to answer below) – Liam Sep 10 '21 at 15:49