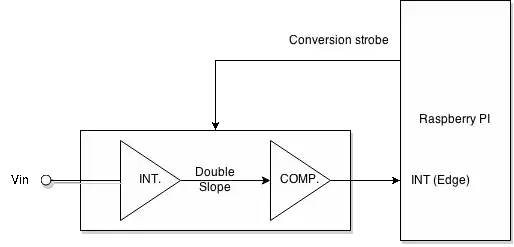

I am planning to setup a poor-man's ADC using a Raspberry PI and an operational amplifier wired as a double-slope integrating ADC for extremely low-frequency signals sampling (< 10 Hz). I have hence imagined I could use Linux performance counter to time the integration process.

The devil here is latency. Since the PI can also manage edge-triggered interruptions at the kernel level, I thought of signalling the end of the integration process with an edge from the comparator output.

The performance counter would only need to be initialized when an ADC conversion is required and read as soon as the interrupt is triggered. I would probably extend the kernel module to implement a poor-man's ADC and store the conversion result in /sysfs, for instance.

Has anyone come up with a similar solution? Does it make sense at all to do it that way?

n = (counter - a) / bwith a being the averaged pulse count of the first slope and b a normalizing ratio. Just that the performance counter is a high resolution counter (up to the microsecond). – May 02 '15 at 11:02