I want to know if there is an analytical way to approximate the distribution of a random variable defined by

$$Y_n:=\left|\sum_{k=1}^n e^{i X_k}\right|$$

where the $X_k\sim U[-\pi,\pi]$ are i.i.d. I did some computer simulations, however Im trying to see if there is some analytic machinery to, at least, approximate the distribution of $Y_n$.

I know how to write analytically $E[Y_n]$ for $n$ (indeed I have the estimate $E[Y_n]\le\sqrt n$, via Jensen's inequality because $E[Y_n^2]=n$), and I could write explicitly $F_{Y_2}$, however for $n>2$ I dont know how to proceed (or how to approximate).

Also I know how to explicitly write the iterated integrals for the computation of the distribution of $\sum_{k=1}^n e^{i X_k}$, however I dont know any approach to compute the distribution of it absolute value.

Some help will be appreciated or, if someone knows, some paper or book where to dig about similar questions.

EDIT: also note that $Y_n=|Y_{n-1}+e^{i X_n}|$, so it seems possible to approximate the distribution of $Y_n$ using some kind of recursion.

Also it is easy to check that

$$Y_n=\sqrt{n+2\sum_{1\le j< k\le n}\cos(X_j-X_k)}$$

However this last expression at first glance doesn't seems useful for an analytic (approximation) to it distribution.

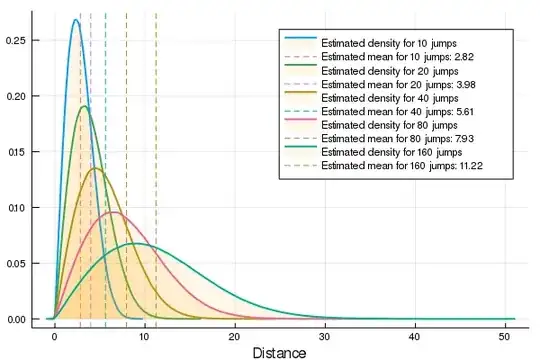

EDIT 2: adding some histograms that approximate the densities

For $n\le 5$ the estimated densities are strange (not of bell form), however for $n=2$ the density approaches the theoretical density, that is

$$f_{Y_2}(x)=\frac1{\pi\sqrt{1-(x/2)^2}}\chi_{[0,2]}(x)$$

(Note the vertical asymptote at $x=2$ in $f_{Y_2}$. Anyway the empirical approximation tends to it). If someone is interested this was the code, written in Julia, that I had used for the simulations:

# Sum of random jumps in the plane

function rsum(n::Int)

p = 1.0

for j in 2:n

p += exp(2pi * im * rand())

end

return p

end

# Distance after n random jumps

function rd(n::Int)

abs(rsum(n))

end

# Data array to build an histogram

function sim(n::Int, m::Int = 22)

datos = zeros(2^m)

for i in 1:2^m

datos[i] = rd(n)

end

return datos

end

# Plotting densities

using StatsPlots, Statistics

function dd(n::Int,m::Int=22)

x = sim(n,m)

density!(x, w = 2,

xlabel = "Distance",

label = "Estimated density for $n jumps",

fill = (0, 0.1, :orange))

vline!([mean(x)],

label = "Estimated mean for $n jumps: $(round(mean(x),digits=2))",

line = :dash)

end