A testing file with the specified format can be downloaded from here

Let's read a few lines from this file

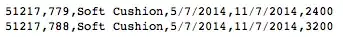

(recLines = ReadList[csvFileName, Record, 3]) // TableForm

SalesOrderID,ProductID,ProductName,OrderDate,ShipDate,Revenue

51217,779,Soft Cushion,5/7/2014,11/7/2014,"$2,400"

51217,788,Soft Cushion,5/7/2014,11/7/2014,"$2,400"

ls[[2]] // FullForm

"51217,779,Soft Cushion,5/7/2014,11/7/2014,\"$2,400\""

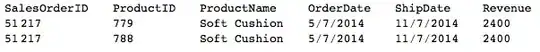

Had I used the Import I would have got

recData = Import[csvFileName];

recDate[[1;;3]]//TableForm

SalesOrderID ProductID ProductName OrderDate ShipDate Revenue

51217 779 Soft Cushion 5/7/2014 11/7/2014 2400

51217 788 Soft Cushion 5/7/2014 11/7/2014 2400

Head /@ csvData[[3]]

{Integer, Integer, String, String, String, Integer}

This is exactly the output I would like to get, but instead of using Import I want to use ReadList.

I have read various posts related to ReadList and this is the closest I have found to an answer that will use ReadList

rl = ReadList[csvFileName, Word, WordSeparators->{","}, RecordSeparators->{"\n"}, RecordLists->True]

rl[[1 ;; 3]] // TableForm

SalesOrderID ProductID ProductName OrderDate ShipDate Revenue

51217 779 Soft Cushion 5/7/2014 11/7/2014 "$2 400"

51217 788 Soft Cushion 5/7/2014 11/7/2014 "$2 400"

rl[[3]] // FullForm

List["51217","788","Soft Cushion","5/7/2014","11/7/2014","\"$2","400\""]

Head /@ rl[[3]]

{String, String, String, String, String, String, String}

Could you please continue this to a full answer or perhaps share a better solution with ReadList ? For example, is it possible to read and transform at the same time with ReadList, by specifying the header, i.e. types of the record items, and get exactly the same result as that of Import ?

I also have this challenge question for the experts of the language:

ReadList is a memory efficient and fast method of reading and parsing CSV files. But it cannot detect automatically the data types of the record items, something that Import does AUTOMAGICALLY. Wouldn't be possible to implement somehow a ReadList that can also detect the header format?

If not, suppose we do know what is the format of the records, and suppose it does not change. There are all these cryptic types and options of ReadList to assist you in getting the parsing of data items right. Can somebody explain me in the file I specified how I can use these types and options to achieve reading lists same way like Import function ?

ReadListinstead ofImport? – SquareOne Feb 02 '16 at 12:29ReadListwith your CSV file in the most efficient way, the entries in your file should be formatted more friendly for Mathematica. Do you have a way to modify your csv file to add some formatting ? – SquareOne Feb 02 '16 at 12:58ReadListcannot detect the formats, you have to specify it. OK, I'll try an answer now. – SquareOne Feb 02 '16 at 13:57ReadList, and I have understood that that's probably meaningfull for big files. Nevertheless, the OP of this question looks for a solution withReadListfor the same reasons as you. I'm afraid that the problem in fact is that there no solution withReadList(or may be doing a preprocessing of the file as SquareOne suggests) – andre314 Feb 02 '16 at 14:11ReadListas you have it, then applied a series of transformations to turn elements like{51217,779,Soft Cushion,5/7/2014,11/7/2014,"$2,400"}into elements like{51217, 779, "Soft Cushion", "5/7/2014", "11/7/2014", 2400}? You want it to be more flexible and do it automatically? – Jason B. Feb 02 '16 at 15:43ReadListthat gives directly{51217,779,Soft Cushion,5/7/2014,11/7/2014,"$2,400"}, that is to say that has solved the problem of the comma. Do you really have something ? – andre314 Feb 02 '16 at 15:52