I am looking to perform a $\chi^2$ fit to more than one data set in mathematica, I just wondered how one would set this up?

Naively, if I just do the following,

Needs["ErrorBarPlots`"]

MasData1 = {{{89, 6.7}, ErrorBar[1.272]}, {{112, 7.9}, ErrorBar[1.220]}, {{141, 9.3}, ErrorBar[1.697]}}

MasData2 = {{{83.9, 4.04}, ErrorBar[0.7754]}, {{114.1, 5.29}, ErrorBar[1.086]}, {{144.2, 6.1}, ErrorBar[1.681]}}

MasData3 = {{{62, 16.6}, ErrorBar[2.6172]}, {{85, 20.7}, ErrorBar[3.0809]}, {{108, 21.9}, ErrorBar[3.0647]}, {{135, 25.8}, ErrorBar[3.9115]}, {{183, 33.2}, ErrorBar[5.993]}, {{83.9, 14.5}, ErrorBar[2.772]}, {{114.1, 24.7}, ErrorBar[4.5875]}, {{144.2, 24.1}, ErrorBar[6.5756]}}

MasData4 = {{{53.3, 25.1}, ErrorBar[3.5489]}, {{83.9, 30}, ErrorBar[4.309]}, {{114.1, 41.5}, ErrorBar[6.1404]}, {{144.2, 45}, ErrorBar[9.6243]}, {{57, 24.4}, ErrorBar[3.6056]}, {{80, 36.7}, ErrorBar[7.9925]}, {{101, 43}, ErrorBar[6.6138]}, {{128, 48.8}, ErrorBar[9.1001]}, {{180, 61.1}, ErrorBar[10.5575]}}

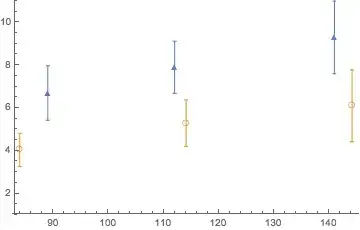

ErrorListPlot[{MasData1, MasData2}, PlotRange -> {1, 11}, PlotStyle -> Thickness[0.003], PlotMarkers -> {"\[FilledUpTriangle]", "\[EmptyCircle]"}

it would plot the following graph

Given some ansatz function $F$ depending on some parameters $a,b$ to describe both sets of data, i.e $F = F(a,b)$ I want to do a $\chi^2$ fit that will take both sets of data into account to get the best fit values of the parameters.

What I have so far basically assumes I want the ansatz function to describe all six points so instead of wanting to achieve two curves through the points in each data set it gives me a ridiculously large chi^2 by attempting to put a single curve through all six points.

So how to set the minimisation routine up such that it takes both data sets into account to determine best fit parameters $a,b$ but also so that it is not assuming I want a single curve through all six points?

Is my question clear? Thanks!

Edit: My fitting function is

f1[x_, y_] := NN x^(-a - b*Log[y/0.45])

where $x,y$ are arguments and $NN,a,b$ are the parameters I wish to find the best fit values for. My attempt at $\chi^2$ is

chisq1 = Sum[((MasData[[k]][[1]][[2]] - f1[MasData[[k]][[1]][[1]],y])/MasData[[k]][[2]][[1]])^2, {k, 1, 3}]

I put all six data points in MasData before but now have split the points into MasData1 and MasData2 as shown above. I'm not sure how to combine them into a common $\chi^2$ routine.

Thanks!

Edit:

Given @JimBaldwin's answer, I have attempted to write out the analysis with an improved model ansatz depending on more than one variable per data set (e.g in case above, there was only $y$, here have $x$ and $y$ hardwired parameters)

Needs["ErrorBarPlots`"]

MasData1 = {{{89, 6.7}, ErrorBar[1.272]}, {{112, 7.9},ErrorBar[1.221]}, {{141, 9.3}, ErrorBar[1.697]}};

MasData2 = {{{83.9, 4.04}, ErrorBar[0.7754]}, {{114.1, 5.29},ErrorBar[1.086]}, {{144.2, 6.1}, ErrorBar[1.681]}};

MasData3 = {{{62, 16.6}, ErrorBar[2.6172]}, {{85, 20.7},ErrorBar[3.0809]}, {{108, 21.9}, ErrorBar[3.0647]}, {{135, 25.8},ErrorBar[3.9115]}, {{183, 33.2}, ErrorBar[5.993]}, {{83.9, 14.5},ErrorBar[2.772]}, {{114.1, 24.7}, ErrorBar[4.5875]}, {{144.2, 24.1},ErrorBar[6.5756]}};

MasData4 = {{{53.3, 25.1}, ErrorBar[3.5489]}, {{83.9, 30},ErrorBar[4.2095]}, {{114.1, 41.5},ErrorBar[6.1404]}, {{144.2, 45}, ErrorBar[9.6243]}, {{57, 27.4}, ErrorBar[3.6056]}, {{80, 36.7}, ErrorBar[7.9925]}, {{101, 43}, ErrorBar[6.6138]}, {{128, 48.8}, ErrorBar[9.1001]}, {{180, 61.1},ErrorBar[10.5575]}};

MasData5 = {{{44.8, 47.5}, ErrorBar[4.0]}, {{54.8, 50.1},ErrorBar[4.2]}, {{64.8, 61.7}, ErrorBar[5.1]}, {{74.8, 64.8},ErrorBar[5.5]}, {{84.9, 75}, ErrorBar[6.2]}, {{94.9, 81.2},ErrorBar[6.7]}, {{104.9, 85.3}, ErrorBar[7.1]}, {{119.5, 94.5},ErrorBar[7.5]}, {{144.1, 101.5}, ErrorBar[8.3]}, {{144.9, 101.9},ErrorBar[10.9]}, {{162.5, 117.8}, ErrorBar[12.8]}, {{177.3, 130.2},ErrorBar[13.4]}, {{194.8, 147.7}, ErrorBar[17.1]}, {{219.6, 137.4},ErrorBar[20.1]}, {{244.8, 176.6},ErrorBar[20.3]}, {{267.2, 178.7},ErrorBar[21.1]}, {{292.3, 200.4}, ErrorBar[29.1]}, {{60, 55.8},ErrorBar[4.838]}, {{80, 66.6}, ErrorBar[7.280]}, {{100, 73.4},ErrorBar[6.426]}, {{120, 86.7}, ErrorBar[7.245]}, {{140, 104},ErrorBar[12.083]}, {{160, 110}, ErrorBar[16.279]}, {{42.5, 43.8},ErrorBar[3.482]}, {{55, 57.2}, ErrorBar[3.980]}, {{65, 62.5},ErrorBar[4.614]}, {{75, 68.9}, ErrorBar[5.197]}, {{85, 72.1},ErrorBar[5.523]}, {{100, 81.9}, ErrorBar[5.368]}, {{117.5, 95.7},ErrorBar[6.277]}, {{132.5, 103.9}, ErrorBar[6.912]}, {{155, 115},ErrorBar[7.920]}, {{185, 129.1}, ErrorBar[9.192]}, {{215, 141.7},ErrorBar[10.666]}, {{245, 140.3}, ErrorBar[14.526]}, {{275, 189},ErrorBar[24.274]}, {{49, 39.2}, ErrorBar[10]}, {{86, 75.7},ErrorBar[14.414]}, {{167, 118}, ErrorBar[22.828]}, {{43.2, 50.7},ErrorBar[1.5]}, {{50, 59.5}, ErrorBar[1.4]}, {{57.3, 61.8},ErrorBar[1.9]}, {{65.3, 67.6}, ErrorBar[1.7]}, {{73.9, 72.4},ErrorBar[1.9]}, {{83.2, 79.9}, ErrorBar[2.3]}, {{93.3, 84.4},ErrorBar[2.1]}, {{104.3, 86.7}, ErrorBar[2.7]}, {{47.9, 55.4},ErrorBar[2.1]}, {{68.4, 66.4}, ErrorBar[2.9]}};

y1 =.;

x1 =.;

data1 = Table[{MasData1[[i, 1, 1]], y1, x1, MasData1[[i, 1, 2]]},{i,Length[MasData1]}]

y2 =.;

x2 =.;

data2 = Table[{MasData2[[i, 1, 1]], y2, x2, MasData2[[i, 1, 2]]}, {i,Length[MasData2]}]

y3 =.;

x3 =.;

data3 = Table[{MasData3[[i, 1, 1]], y3, x3, MasData3[[i, 1, 2]]}, {i,Length[MasData3]}]

y4 =.;

x4 =.;

data4 = Table[{MasData4[[i, 1, 1]], y4, x4, MasData4[[i, 1, 2]]}, {i,Length[MasData4]}]

y5 =.;

x5 =.;

data5 = Table[{MasData5[[i, 1, 1]], y5, x5, MasData5[[i, 1, 2]]}, {i,Length[MasData5]}]

gamma = 5.55*^-6;

MJpsi = 3.1;

alphaem = 1/137;

Rg = 2^(2*(a + b*Log[y/0.45]) + 3)/Sqrt[Pi]*Gamma[(a + b*Log[y/0.45]) + 5/2]/ Gamma[(a + b*Log[y/0.45]) + 4];

data = Join[data1, data2, data3, data4, data5]

yvalues = {y1 -> 6.4025, y2 -> 8.0025, y3 -> 4.1525, y4 -> 3.2025,y5 -> 2.4025} (*mu^2*)

xvalues = {x1 -> 0.2478239650859146,x2 -> 0.2390601794032581, x3 -> 0.266809, x4 -> 0.2796636697708153, x5 -> 0.29570467203639167}

nlm = NonlinearModelFit[data /. {yvalues, values}, 3.89379*^5*1/(4.9 + 4*0.06*Log[w/90])*gamma*MJpsi^3*(Pi)^3/48/alphaem*(x/(y)^2*Rg*NN*(((4*y - MJpsi^2) + MJpsi^2)/((4*y - MJpsi^2) + w^2))^(-a -b*Log[y/0.45]))^2*(1 + (4*y - MJpsi^2)/MJpsi^2)*(1 + (Pi)^2/4*(a + b*Log[y/0.45])^2), {NN, a, b}, {w, y, x}]

I get the following error however,

NonlinearModelFit::fitc: Number of coordinates (-1) is not equal to the number of variables (3).

Is there an easy fix?

a,b, andNN? or are those common values for both data sets? – JimB Aug 02 '17 at 22:58yis, I think this question should be closed. It's just that (in my opinion) there is too much confusion and lack of specifics. – JimB Aug 03 '17 at 17:50