Yes, but that particular dataset is not extremely great.

Instead of using the Fashionista dataset, let's use this dataset instead: https://github.com/bearpaw/clothing-co-parsing. The Fashionista dataset is too much inside the Matlab walled garden (I am living in a glass house and throwing stones).

This dataset is much more usable. 1004 images have masks (a matlab matrix, but one we can read). They look like this:

Let's load the images and masks.

images = (File /@

FileNames["~/Downloads/clothing-co-parsing-master/photos/*"])[[;;

1004]];

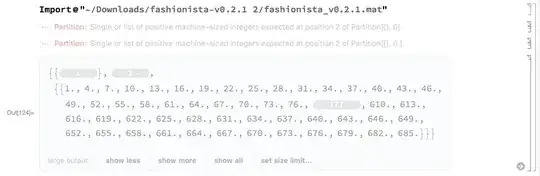

masks = Import[

"~/Downloads/clothing-co-parsing-master/annotations/pixel-level/*"]

Now we'll turn resize the masks to something much smaller (my poor laptop isn't beefy enough for larger image dimensions). Input images will get resized by the network NetEncoder. We add 1 to the masks so that they are the lie in the same range as the output.

dims = {104, 157};

amasks = Round[

ArrayResample[First[#], {157, 104}, "Bin",

Resampling -> "NearestRight"]] + 1 & /@ masks;

So we can generate image->mask data for the neural net like so:

data = Thread[images -> amasks];

Now we'll build an extremely quick-and-dirty pixel-level segmentation network as a proof-of-concept.

This network is of my own design, which means it's probably terrible. However, it does go fast. We chop off the last few layers of Squeezenet and attach a few skip connections. This network does a decent job of binary prediction - let's see how it does with 59-class prediction.

A quick improvement to this network is to use more than just the first few layers of squeezenet. However, I don't want to watch my computer train a network slowly forever, so I have settled for about the first half of squeezenet.

squeeze =

NetModel["SqueezeNet V1.1 Trained on ImageNet Competition Data"];

tnet = NetGraph[

Join[Normal@

NetFlatten[

NetTake[NetReplacePart[squeeze,

"Input" -> NetEncoder[{"Image", dims, "ColorSpace" -> "RGB"}]],

"fire5"]],

<|

"f2u" -> {BatchNormalizationLayer[],

ResizeLayer[{Scaled[2], Scaled[2]}, "Resampling" -> "Nearest"],

ConvolutionLayer[59, 1], ElementwiseLayer["ELU"]},

"f3u" -> {BatchNormalizationLayer[],

ResizeLayer[{Scaled[2], Scaled[2]}, "Resampling" -> "Nearest"],

ConvolutionLayer[59, 1], ElementwiseLayer["ELU"]},

"f4u" -> {BatchNormalizationLayer[],

ResizeLayer[{Scaled[4], Scaled[4]}, "Resampling" -> "Nearest"],

ConvolutionLayer[59, 1, "PaddingSize" -> 1],

ElementwiseLayer["ELU"]},

"f5u" -> {BatchNormalizationLayer[],

ResizeLayer[{Scaled[4], Scaled[4]}, "Resampling" -> "Nearest"],

ConvolutionLayer[59, 1, "PaddingSize" -> 1],

ElementwiseLayer["ELU"]},

"cat" -> CatenateLayer[],

"drop" -> DropoutLayer[],

"sig" -> ElementwiseLayer["ReLU"],

"con" -> {ResizeLayer[Reverse@dims], ConvolutionLayer[59, 1],

TransposeLayer[{1 <-> 3, 1 <-> 2}], SoftmaxLayer[]}

|>

],

{Fold[#2 -> #1 &,

Reverse@Keys@Normal@NetFlatten[NetTake[squeeze, "fire5"]]],

"fire2" -> "f2u",

"fire3" -> "f3u",

"fire4" -> "f4u",

"fire5" -> "f5u",

{"f2u", "f3u", "f4u", "f5u"} -> "cat" -> "drop" -> "sig" -> "con"

},

"Input" -> NetEncoder[{"Image", dims, "ColorSpace" -> "RGB"}],

"Output" -> NetDecoder[{"Class", Range[59], "InputDepth" -> 3}]

]

Now we can train this network. I have frozen the squeezenet weights, but it's not necessary (and not doing this will definitely result in a more accurate network).

net = NetTrain[tnet, data[[;; -30]], ValidationSet -> data[[-30 ;;]],

LearningRateMultipliers -> {"conv1" -> 0, "fire2" -> 0,

"fire3" -> 0, "fire4" -> 0}]

I trained this for a short time (5 rounds), let's see what it looks like:

That's not bad at all for such a short time training. Especially because there are many improvements you could make to this extremely off-the-cuff network:

Good luck!