I am trying to simulate heating and melting of the steel plate by means of FEM.The model is based on nonlinear heat conduction equation in axial symmetry case.

The problem statement is the next: $$ \rho c_{eff}\frac{\partial T}{\partial t}= \frac{1}{r}\frac{\partial}{\partial r}\left(r\lambda \frac{\partial T}{\partial r} \right) + \frac{\partial}{\partial z}\left(\lambda \frac{\partial T}{\partial z} \right),\\ 0\leq r\leq L_r,~0\leq z\leq L_z,~0\leq t\leq t_f $$ $$\lambda \frac{\partial T}{\partial z}\Bigg|_{z=L_z}=q_{0}exp(-a r^2),~~\frac{\partial T}{\partial r}\Bigg|_{r=L_r}=0, T|_{z=0}=T_0\\T(0,r,z)=T_0$$

To take into account latent heat of fusion $L$ the effective heat capacity is introduced $c_{eff}=c_{s}(1-\phi)+c_{l}\phi+ L\frac{d \phi}{dT} $, where $\phi$ is a fraction of liquid phase, $ c_s, c_l $ are the heat capacity of solid and liquid phase respectively. Smoothed Heaviside function

$$h(x,\delta)=\left\{\begin{array}{l l l} 0,& x<-\delta\\ 0.5\left(1+\frac{x}{\delta}+\frac{1}{\pi}sin(\frac{\pi x}{\delta}) \right), &\mid x\mid\leq \delta\\ 1,& x>\delta \end{array} \right.$$

is employed to describe mushy zone so that $\phi(T)=h(T-T_m,\Delta T_{m}/2)$, where $T_m$ and $\Delta T_m$ are melting temperature and melting range respectively. FE approximation is used for spatial discretization of PDE whereas time derivative is approximated by means of first order finite difference scheme: $$\left.\frac{\partial T}{\partial t}\right|_{t=t^{k}} \approx \frac{T(t^k,r,z)-T(t^{k-1},r,z)}{\tau}$$

where $\tau$ is a time step size. For calculation of $c_{eff}$ at k-th time step the temperature field from k-1 time step is utilized. After discretization in time one can rewrite equation:

$$c_{eff}\left(T(t^{k-1},r,z)\right) \frac{T(t^k,r,z)-T(t^{k-1},r,z)}{\tau}=\frac{1}{r}\frac{\partial}{\partial r}\left(r\lambda \frac{\partial T(t^k,r,z)}{\partial r} \right) + \frac{\partial}{\partial z}\left(\lambda \frac{\partial T(t^k,r,z)}{\partial z} \right)$$

At each time step the DampingCoefficients is corrected in InitializePDECoefficients[] so that interpolation is used for $c_{eff}$.Such approach leads to significant grows of computational time in comparison with solution of linear problem when $c_{eff}$=const. I also tried to use ElementMarker to set certain value of $c_{eff}$ in each element. Such approach allows to avoid interpolation but the computation time is getting more larger. This last fact I can not understand at all. As to me the duration of FE matrix assembly should be diminished when interpolation for $c_{eff}$ is avoided.

Needs["NDSolve`FEM`"];

Needs["DifferentialEquations`NDSolveProblems`"];

Needs["DifferentialEquations`NDSolveUtilities`"];

Setting of the computational domain dimensions and mesh generation:

Lr = 2*10^-2; (*dimension of computational domain in r-direction*)

Lz = 10^-2; (*dimension of computational domain in z-direction*)

mesh = ToElementMesh[FullRegion[2], {{0, Lr}, {0, Lz}},MaxCellMeasure -> {"Length" -> Lr/50}, "MeshOrder" -> 1]

mesh["Wireframe"]

Input parameters of the model:

lambda = 22; (*heat conductivity*)

density = 7200; (*density*)

Cs = 700; (*specific heat capacity of solid*)

Cl = 780; (*specific heat capacity of liquid*)

LatHeat = 272*10^3; (*latent heat of fusion*)

Tliq = 1812; (*melting temperature*)

MeltRange = 100; (*melting range*)

To = 300; (*initial temperature*)

SPow = 1000; (*source power*)

R = Lr/4; (*radius of heat source spot*)

a = Log[100]/R^2;

qo = (SPow*a)/Pi;

q[r_] := qo*Exp[-r^2*a]; (*heat flux distribution*)

tau = 10^-3; (*time step size*)

ProcDur = 0.2; (*process duration*)

Smoothed Heaviside function:

Heviside[x_, delta_] := Module[{res},

res = Piecewise[

{

{0, Abs[x] < -delta},

{0.5*(1 + x/delta + 1/Pi*Sin[(Pi*x)/delta]), Abs[x] <= delta},

{1, x > delta}

}

];

res

]

Smoothed Heaviside function derivative:

HevisideDeriv[x_, delta_] := Module[{res},

res = Piecewise[

{

{0, Abs[x] > delta},

{1/(2*delta)*(1 + Cos[(Pi*x)/delta]), Abs[x] <= delta}

}

];

res

]

Effective heat capacity:

EffectHeatCapac[tempr_] := Module[{phase},

phase = Heviside[tempr - Tliq, MeltRange/2];

Cs*(1 - phase) + Cl*phase +LatHeat*HevisideDeriv[tempr - Tliq, 0.5*MeltRange]

]

Numerical solution of PDE:

ts = AbsoluteTime[];

vd = NDSolve`VariableData[{"DependentVariables" -> {u},"Space" -> {r,z},"Time" -> t}];

sd = NDSolve`SolutionData[{"Space","Time"} -> {ToNumericalRegion[mesh], 0.}];

DirichCond=DirichletCondition[u[t, r, z] ==To,z==0];

NeumCond=NeumannValue[q[r],z==Lz];

initBCs=InitializeBoundaryConditions[vd,sd, {{DirichCond, NeumCond}}];

methodData = InitializePDEMethodData[vd, sd] ;

discreteBCs = DiscretizeBoundaryConditions[initBCs, methodData, sd];

xlast = Table[{To}, {methodData["DegreesOfFreedom"]}];

TemprField = ElementMeshInterpolation[{mesh}, xlast];

NumTimeStep = Floor[ProcDur/tau];

For[i = 1, i <= NumTimeStep, i++,

(*

(*Setting of PDE coefficients for linear problem*)

pdeCoefficients=InitializePDECoefficients[vd,sd,"ConvectionCoefficients"-> {{{{-lambda/r, 0}}}},

"DiffusionCoefficients" -> {{-lambda*IdentityMatrix[2]}},

"DampingCoefficients" -> {{Cs*density}}];

*)

(*Setting of PDE coefficients for nonlinear problem*)

pdeCoefficients =

InitializePDECoefficients[vd, sd,

"ConvectionCoefficients" -> {{ {{-(lambda/r), 0}} }},

"DiffusionCoefficients" -> {{-lambda*IdentityMatrix[2]}},

"DampingCoefficients" -> {{EffectHeatCapac[TemprField[r, z]]*

density}}];

discretePDE = DiscretizePDE[pdeCoefficients, methodData, sd];

{load, stiffness, damping, mass} = discretePDE["SystemMatrices"];

DeployBoundaryConditions[{load, stiffness, damping},

discreteBCs];

A = damping/tau + stiffness;

b = load + damping.xlast/tau;

x = LinearSolve[A,b,Method -> {"Krylov", Method -> "BiCGSTAB",

"Preconditioner" -> "ILU0","StartingVector"->Flatten[xlast,1]}];

TemprField = ElementMeshInterpolation[{mesh}, x];

xlast = x;

]

te = AbsoluteTime[];

te - ts

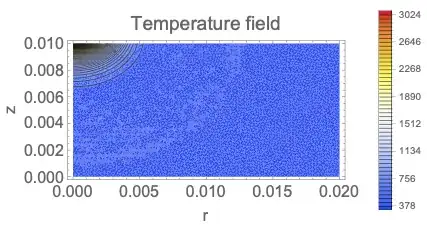

Visualization of the calculation results

ContourPlot[TemprField[r, z], {r, z} \[Element] mesh,

AspectRatio -> Lz/Lr, ColorFunction -> "TemperatureMap",

Contours -> 50, PlotRange -> All,

PlotLegends -> Placed[Automatic, After], FrameLabel -> {"r", "z"},

PlotPoints -> 50, PlotLabel -> "Temperature field", BaseStyle -> 16]

On my laptop the computation time are 63 sec and 2.17 sec for nonlinear and linear problems respectively.This question can be generalized to the case when $\lambda=\lambda(T)$. I would appreciate if anyone could please show me a good way which leads to time savings. Thanks in advance for your help.

methodDatais undefined. Please edit your post and add all relevant code. – Henrik Schumacher Mar 21 '19 at 09:23"StartingVector". You should also try to find suitable values for the"Tolerance"option; the default values are usually way too low. Moreover, using the backendSparseArray`KrylovLinearSolvedirectly is usually a bit faster and also allows for reusing the preconditioner (generated bySparseArray`SparseMatrixILUand applied withSparseArray`SparseMatrixApplyILU). – Henrik Schumacher Mar 21 '19 at 09:30SparseArray`KrylovLinearSolve; so please ping me with @Henrik in a comment when you are done. – Henrik Schumacher Mar 21 '19 at 09:32xinto anInterpolatingFunctionin order to discretize it again in the next iteration is just a waste. Try generate the next iteration's"DampingCoefficients"from the previous iteration'sxdirectly; this should basically amount toEffectHeatCapac /@( density x), no? (EffectHeatCapac[ density x]might also work...) – Henrik Schumacher Mar 21 '19 at 09:37linearDiscretePDE["SystemMatrices"] + nonlinearDiscretePDE["SystemMatrices"]. Before using "Krylov" tryMethod->"Pardiso"forLinearSolve. – user21 Mar 22 '19 at 05:53linCoeffs=InitializePDECoefficients[linear coefficients]; lin=Discretize[linCoeffs];and in the loopnonLin=InitializePDECoefficients[nonlinear coeffs]; nonlin=Discretize[nonLin]; stifffness = lin["StiffnessMatrix"] + nonlin["StiffnessMatrix"];etc.. – user21 Mar 25 '19 at 07:01