I want to fit data in the hope of obtaining an estimate for other data points (i.e. a good guess for the last 4 variables as a function of the first).

Example data:

data={{3.38, 1.028877662, 2.009398505, 2.067322478, 4.214191194}, {3.4,

1.030082372, 1.995543604, 2.105894366, 4.234656059}, {3.5,

1.035994874, 1.992385102, 2.200815333, 4.282937808}, {3.57,

1.036731784, 1.986961442, 2.224357922, 4.307824219}, {3.6,

1.036978228, 1.985081926, 2.231988058, 4.315914728}, {3.62,

1.037229736, 1.983076125, 2.239730469, 4.323988127}, {3.78,

1.038461995, 1.969909372, 2.283628754, 4.374960036}, {3.8,

1.038741973, 1.96716995, 2.291334094, 4.384253554}}

This data was obtained using a computationally expensive method. I want to obtain good guesses for more data points located in between (interpolation) and slightly outside this region (extrapolation). This guess will then be used in the same computationally expensive method were a better initial guess results in a cheaper computation of the exact answer for these additional points.

Thus, I am seeking for a fit that will hopefully be predictive in the current data range and slightly outside it.

There is no general expectation for the shape of the functions except that the function is expected to be continuous and monotonic (increasing or decreasing) (and probably once differentiable).

(The data is exact so ideally the fit goes exactly through the provided data points. Also I do not care about accuracy for points say further than 0.2 away from current data points. The purpose is not to guess the true underlying function which will no doubt be too complicated and not even well defined for all real values.)

I tried using NetChain which was not producing amazing results (probably due to my ignorance in how to use it). I had expected that as I added layers it would eventually at least overfit and go through the data points but in fact I couldn't produce any fit at all that actually went through the data points. (Of course this approach does not use the monotonicity constraint and something else might be better.)

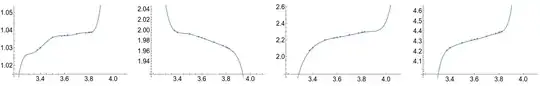

Here are example fits that I would consider a decent fit (although the first function is not monotonic and would be a slightly better fit if it were):

These were obtained by fitting polynomials of order 7 and adding points at the end to get a better asymptotic behavior. I would be very happy if I could reproduce a similar fit without having to fine tune the polynomial order. (The asymptotic behavior is not actually important I only care about points a distance less than 0.2 away from current data points, so I guess I did that mostly for aesthetic reasons.)

P.S. The data as posted here is obviously only accurate to at most 9 digits but that is just the truncation I used here to keep the post readable. I don't think the error introduced by this rounding will pose any problem to finding a fit but if for some method it does I can provide the high precision data

Also I used the word fit in the title but perhaps interpolation (and slight extrapolation) are more accurate. The resulting function should pass through the data points (or very close to them).

Context:

The first parameter defining a family of non-convex optimization problems. The other parameters are parameters within that non-convex optimization problem over which an objective is optimized. The point in parameter space (i.e. the last 4 variables), give the point that minimizes the last parameter under the constraint that some objective function is positive.

Monotonicity in the last variable (as a function of the first) is mathematically guaranteed. Monotonicity in the other variables is simply observed.

But the space is definitely not as simple as a low order polynomial or a log or something. Some combination of multiple logs etc could perhaps work but I would like to avoid spending a lot of time on a case by case basis looking what function looks like my data. I'm hoping to find an automated process for this.

– Kvothe Jul 20 '23 at 19:28ResourceFunction["MonotonicInterpolation"]seems like it could be helpful. – Kvothe Aug 01 '23 at 13:18