I want to try Advanced Activations Layers in Mathematica,but not found.

So I try to use ElementwiseLayer to implement it.

Thank you @nikie,LeakyReLU,ELU,ThresholdedReLU can be written like this.

LeakyReLU : ElementwiseLayer[Ramp[#] - Ramp[-#]*0.3 &]

ELU : ElementwiseLayer[Ramp[#] - Ramp[-#]/#*(Exp[#] - 1) &]

ThresholdedReLU : ElementwiseLayer[Ramp[# - 1]/(# - 1)*Ramp[#] &]

PReLU has a learned parameter alpha,but I don't know how to train the net ...

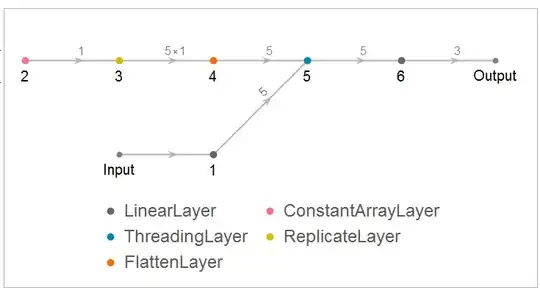

graph = NetGraph[{ConstantArrayLayer["Array" -> ConstantArray[0.3, 5]], ThreadingLayer[Ramp[#] - Ramp[-#]*#2 &]}, {{NetPort["Input"], 1} -> 2}]

graph[{-1, -0.5, 0, 0.5, 1}](*{-0.3, -0.15, 0., 0.5, 1.}*)

Is there any more simple method to make Advanced Activations Layers?

Application: this post used leayReLU[alpha_] := ElementwiseLayer[Ramp[#] - alpha*Ramp[-#] &]

AttributetoListableseems to be the problem. You can try withFunctiondirectly:g = Function[x, Piecewise[{{0.3*x, x < 0}, {x, x > 0}}], Listable]– Anjan Kumar May 22 '17 at 04:53PReLU, I think you need to useConstantArrayLayerfor learned constants, and (I guess)ThreadingLayerto combine input from the constant and the "data" input layers – Niki Estner May 22 '17 at 07:09