According to the documentation about the pseudospectral difference-order:

It says:

Following the discussion here:

I found the messy behavior is always on the artificial boundary in $\omega$-direction ($u(t,\theta,\omega_{cutoff})=0$ because I want $\omega$ to be unbounded.) Perhaps, this is so called Runge phenomenon? In principle, we should not use pseudospectral difference-order for all directions. However, it is not clear how to specify them separately.

Here is code:

a = 1;

T = 1;

ωb = -15; ωt = 15;

A = 8;

γ = .1;

kT = 0.1;

φ = 0;

mol[n_Integer, o_: "Pseudospectral"] := {"MethodOfLines",

"SpatialDiscretization" -> {"TensorProductGrid", "MaxPoints" -> n,

"MinPoints" -> n, "DifferenceOrder" -> o}}

With[{u = u[t,θ, ω]},

eq = D[u, t] == -D[ω u,θ] - D[-A Sin[3θ] u, ω] + γ (1 + Sin[3θ]) kT D[

u, {ω, 2}] + γ (1 + Sin[3θ]) D[ω u, ω];

ic = u == E^(-((ω^2 +θ^2)/(2 a^2))) 1/(2 π a) /. t -> 0];

ufun = NDSolveValue[{eq, ic, u[t, -π, ω] == u[t, π, ω],

u[t,θ, ωb] == 0, u[t,θ, ωt] == 0}, u, {t, 0, T}, {θ, -π, π}, {ω, ωb, ωt},

Method -> mol[61], MaxSteps -> Infinity]; // AbsoluteTiming

plots = Table[

Plot3D[Abs[ufun[t,θ, ω]], {θ, -π, π}, {ω, ωb, ωt}, AxesLabel -> Automatic,

PlotPoints -> 30, BoxRatios -> {Pi, ωb, 1},

ColorFunction -> "LakeColors", PlotRange -> All], {t, 0, T,

T/10}]; // AbsoluteTiming

ListAnimate[plots]

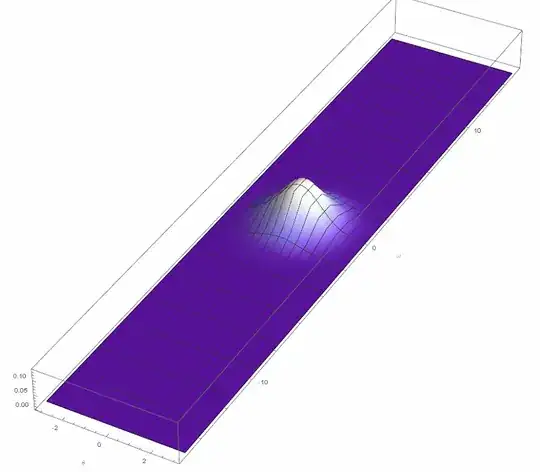

$t=0$

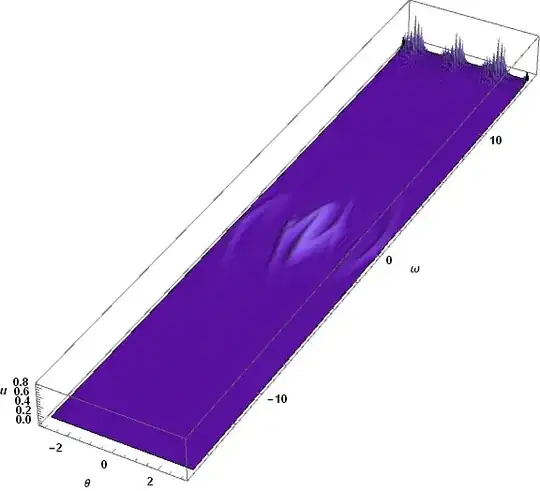

$t=0.8$

$t=0.9$

One can clearly see the large deviation occurs only in $\omega$-direction, which is consistent with the description as above (neither periodic nor Chebyshev).

Is it possible to have something like:

"DifferenceOrder" ->{"Pseudospectral", Automatic}

The above simply doesn't work.

Update: Finally, I figure out the problem is just due to convection-domination. The problem is depending on the ratio of convection term and diffusion term. Artificial diffusion or denser grid points is necessary.

Update(8/25): After using the implicit RungeKutta scheme, the solution is much stable. Now the another problem is the convergency.

What I expect is something similar to the following smooth behavior.

But so far their is no such method which can arrive this, or?

yis not one of the independent variables in your code. Also, the sentence, "In principle, we should not should pseudospectral difference-order for all direction." is garbled. For better responses by readers, please correct these and any other issues in the question. – bbgodfrey Aug 22 '18 at 18:08Pseudospectralin Mathematica is implemented following these 2 instances. – xzczd Aug 23 '18 at 05:50NDSolveis free from Runge phenomenon. – xzczd Aug 23 '18 at 06:18D[u[t, θ, ω], t] == 0. One solution is, of course,u == 0. If there are more static solutions, there may be many more, and the initial value calculation may yield a mix of them. By the way, the choice of boundary conditions remains an issue. – bbgodfrey Aug 27 '18 at 04:56NDSolveat the moment), only with a special setting for the step size. – xzczd Aug 27 '18 at 15:25Method -> {"MethodOfLines", "SpatialDiscretization" -> {"TensorProductGrid", "MaxPoints" -> {41, 83}, "MinPoints" -> {41, 83}, "DifferenceOrder" -> 4}}What's more, if thefixfunction is added, only about 9 seconds is taken, so once again, the time integration is trivial. – xzczd Aug 27 '18 at 15:54